AnxietyRanger

Well-Known Member

What makes you think that the fact that the rest of the industry is doing something differently (and has been for longer) serves as evidence that the rest of the industry is somehow doing things in a better way than Tesla?

Mine was a response to this:

Also worth remembering that the AP2 solution wasn't designed one afternoon by Musk on the back of a Starbucks napkin, but is the work of many skilled engineers, who would have been able to verify common scenarios like how the system would handle your Volvo blindspot example.

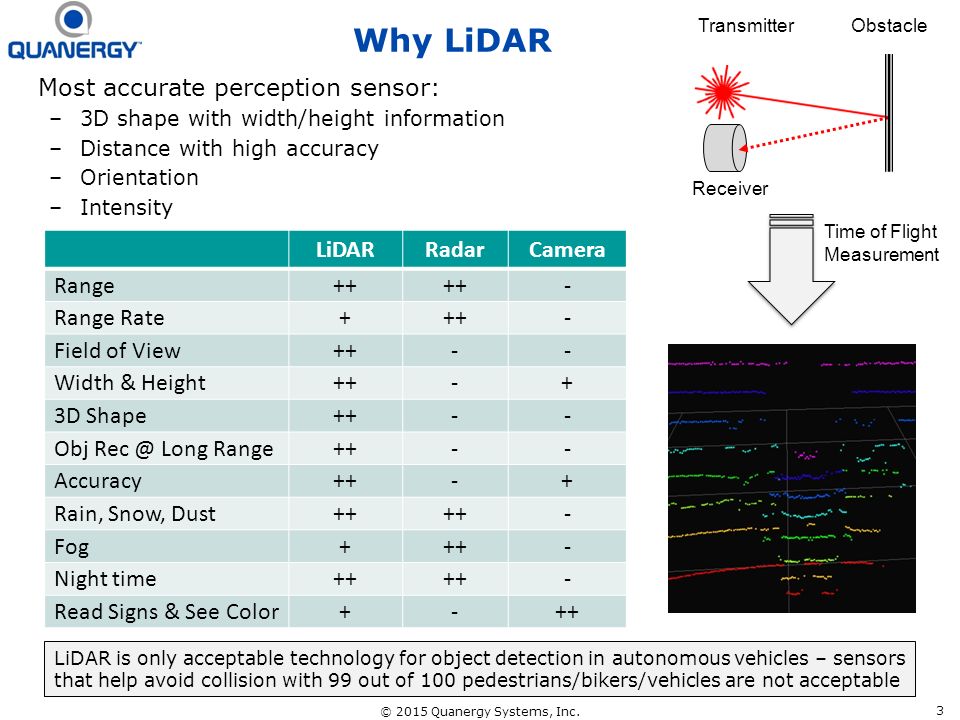

The point was - tons of other skilled engineers have been coming to a different conclusion about self-driving suites. Including tons of engineers within both Silicon Valley (e.g. Google) as well as the traditional automotive industry (e.g. Audi/VW and Volvo). I was just reminding that these systems too are the work of many skilled engineers, who have thought about and been able to verify (likely to a far larger extent, given longer times working on this) various common scenarios.

Look. None of us know what the optimal FSD sensor suite will be. Neither of us - as in you or me - are experts in this area, not even pundits, we're just guys paying some attention and having some interest in it. It will be up to the future to inform us.

The second point I'm making is this: An "AP3" sensor suite may be based on considerations quite different from AP2, which means it may include for example more sensors.

I think several factors contributed to Tesla's selection of the AP2 suite, including the need to port legacy features from AP1 (hence the radar, the ultrasonics and the attempted MobilEye integration in AP2 - the board even has room for it). The rest of the suite seems to include the bare minimum of what is required for vision-based FSD, with very little redundancy and visual blindspots especially around the lower nose. The number of cameras were, thus, probably in part dictated by cost and expected computing performance/capability.

With AP1 he built the most functional semiautonomous system on the road using the least number of sensors. That alone should show us "it's the software, stupid" - or at least "let's withhold judgment."

He - well, Tesla - did. However, that was standing on the shoulders of a giant in its ownright, the MobilEye chip. Since then we have learned a thing or two about Tesla's internal software prowess in the form of the adventure that is AP2. We have also learned just how much and probably how dangerously Tesla was pushing the AP1 envelope, seeing how they scaled the autonomy back through nags and disengagements. MobilEye had an opinion about that as well.

What I personally view the AP2 as, is the bare minimum of what Tesla needed to take AP1 work forwards (the legacy part that still runs things like TACC, blind spot detection and auto-parking) and release a bare-minimum visual platform on the market that they can start working, deploying and researching full self-driving on. This is the part where Tesla's aggressive, let's do more with less and deploy it before it is ready strategy is showing. For this purpose they probably felt the need to put a limit on how much redundancy etc. they would build into the hardware, considering its use would be possibly years away.

Here's the thing: I don't doubt AP2 suite can do good weather FSD, especially with an upgraded CPU/GPU. I have made that clear and barring a company failure, expect Tesla to deliver.

But regarding this thread, it is hard to see how the AP2 suite would somehow be so optimal that once these above-mentioned considerations for Tesla change and the work progresses on the software side, it wouldn't - possibly quickly - be followed by an upgraded sensor suite.

And it is hard to see that the AP2 sensor suite would somehow be superior to the far more robust suites others have been working on for much longer.

Is there some track record you can point to from 2008 'til now that shows the rest of the industry has been getting things right/better (with propulsion systems, sales channels, autopilot systems) with what they bring to market vs Tesla?

There are many things the rest of the industry does better than Tesla, but most imporantly Tesla's successes in some areas do not automatically translate into others. Out of the ones you mentioned, I will obviously agree in the world-changing work on BEVs by Tesla. They changed the world. Also, AP1 was a formidable achievement, no doubt. You did not mention Supercharger network, but I will also chalk that up to Tesla. Sales channel, well, there's so many issues with the Tesla model that some refuse to see, but I would hardly call it automatically better. I've certainly had better service through the traditional model than through Tesla.

But again the thing is: We are talking about the AP2 suite and what/when might be in AP3. Even as you list AP1 as a major achievement, you know it was eventually replaced by AP2. You also know that not all AP1 features made it in the end. You know the history that Tesla has been failing to meet Performance specs with P85D, P90DL Vx, that have eventually meant new products being introduced to finally meet said missed specs. There is plenty of history there as well.

So the idea that an AP3 sensor suite would add more sensors, e.g. more cameras for redundancy and/or blindspots and/or depth vision, or more radar coverage for blind-spot monitoring or cross-traffic monitoring... or even lidar if the rest of the industry made a better bet, seem all quite plausible to me. And given Tesla's missed specs in the past, the idea that an AP3 suite might even fix mistakes in the AP2 is possible.

What Tesla shipped in AP2 is IMO a bare minimum and sort of a first effort. What they ship once costs come down and software matures and they learn more from what they have been doing, might - and IMO likely is - different.

Last edited: