boonedocks

MS LR Blk/Blk 19”

She was a man.... as Seinfeld would say, "not that there's anything wrong with that."

Or as us old timers would say “SNL -it was all Pat’s” fault

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

She was a man.... as Seinfeld would say, "not that there's anything wrong with that."

The other video you haven't found that is also linked in this thread is the video taken by someone else using their phone driving the same chunk of road at night showing that that video is quite deceptive in just how dark that area is. It's a typical urban road, lit up like a Christmas tree. Something is VERY wack with the gamma on that Uber video that the police released.

I don't see off-hand where that is but here's a still shot of the area. Any details on headline - Arizona pedestrian is killed by Uber self-driving car

P.S. The driver isn't a "he".

Or as us old timers would say “SNL -it was all Pat’s” fault

...so quickly...

If only they came forwards when she was homeless.Not so fast apparently!

There are still family members, mother, father and son, coming forward:

More family members of woman killed in Uber self-driving car crash...

If only they came forwards when she was homeless.

Interesting Opinion criticizing weaker Arizona Autonomous Vehicle rules.

Also, it cites a statistic of 1 annual fatality per 100 million miles of human driving VS 10 million miles of Autonomous Vehicle driving.

That means currently, human is 10 times safer than Autonomous Vehicles are and Autonomous Vehicles are 10 times worse than human is!

Watch Video of Scottsdale Victims Minutes Before Fatal Plane Crash on Golf Course

It was an automated plane? (or is that the wrong link?)

"Those rides can be clumsy and filled with hard brakes as the car stops for everything that may be in its path."

Another interpretation with the same conclusion:

Uber’s self-driving software detected the pedestrian in the fatal Arizona crash but did not react in time

That means both sensors and software detected the pedestrian fine but the settings from software programmers have allowed such accident to happen.

If they didn't set their software to such settings:

It sounds like Uber has chosen smoothness and comfort over safety.

Maybe smoothness, but I'm still going with the leading and trailing wheel spokes (along with small tube cross section) caused the rain/ snow filter to activate thus 'seeing' through the pedestrian to the road beyond.

That's a good explanation and theory.

However, according to the articles, there's nothing wrong with the sensors.

It's the immatured Uber software that is not advanced enough to classify pedestrian/bicycle as important obstacles to avoid.

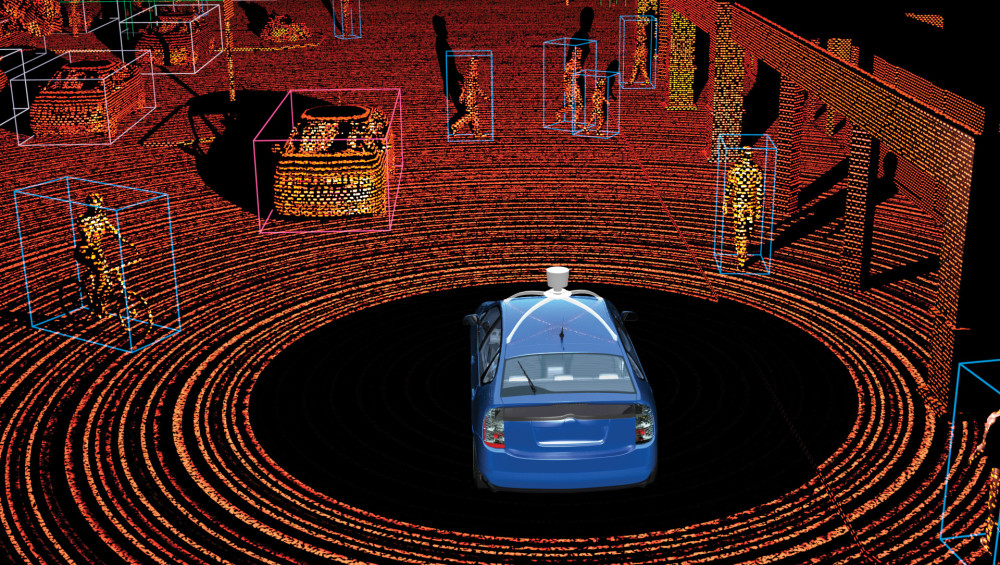

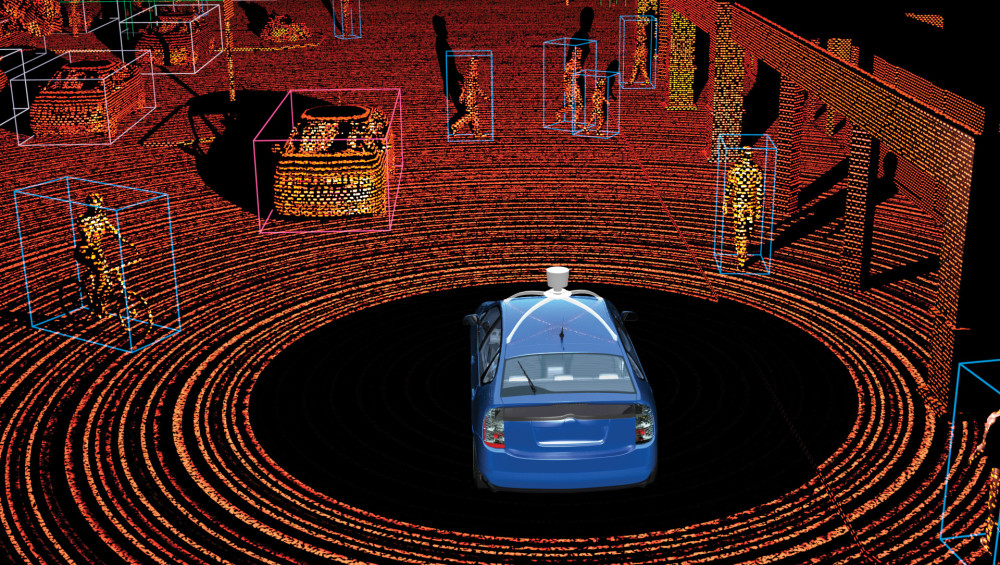

LIDAR can see 3-dimensional. It can measure that a pedestrian/bicycle has thickness for software engineers to write a code to avoid hitting it and not just a paper thin flat object that software engineers to write a code to run over it.

On the other hands, for years, other companies like Waymo has been able to recognize and classify many objects including pedestrians and bicyclist below:

Interesting Opinion criticizing weaker Arizona Autonomous Vehicle rules.

Also, it cites a statistic of 1 annual fatality per 100 million miles of human driving VS 10 million miles of Autonomous Vehicle driving.

That means currently, human is 10 times safer than Autonomous Vehicles are and Autonomous Vehicles are 10 times worse than human is!

Updated: link corrected

Ducey's Drive-By: How Arizona Governor Helped Cause Uber's Fatal Self-Driving Car Crash

According to Uber, emergency braking maneuvers are not enabled while the vehicle is under computer control, to reduce the potential for erratic vehicle behavior. The vehicle operator is relied on to intervene and take action. The system is not designed to alert the operator.”

I think the term is negligent. Negligence: Carelessness which causes harm to life.Those three facts together -- wow what a stupid way to test their system.