Um. So, here we are again, arguing about whether the NN stuff in a Tesla is trainable in any sense by the users.

Every half year or so, I raise this possibility, since the rough block diagram of a NN computer (and this is generalized, so it includes wetware as well as hardware) always shows feedback from the output of said NN back to the NN weights and all that.

This gets jumped on by Various Posters who state that they've seen tweets or talked with actual Tesla coders or whatever; most of the arguments along these lines has the stated fact that the load delivered to the car has a fixed checksum. Hence, if it's got a fixed checksum, those checksums are against the weights, and therefore the Weights Can't Change.

To which my comments, in amongst the shouting, has been, "So what? CS majors are inventive." One could imagine situations where the weights come at fixed values, but then are allowed to have offsets that are mangled up and down over a range over time.

And part of my comments have been driven by observation. It sure has seemed that on one day or drive the car acts this way, then, on another day or drive, it acts that way. Now, that's explainable by minor differences in traffic, big complicated feedback systems with strange internal variables, or whatever.. but niggling along was the idea that the overall system was doing some self-training.

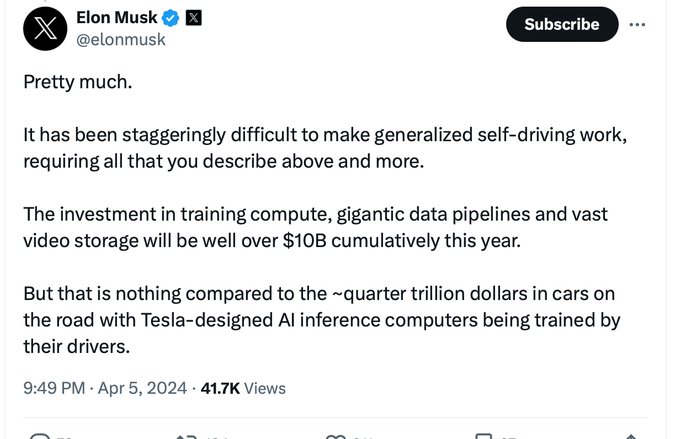

It may very well be that Elon's comment was all about the feedback going back to the Mothership and the weights being changed there. And, assuming that's true, all of the above isn't true. But.. there's some room in there for a bit of autonomous training of this and that, with various results going back to the mothership to see how changing a particular weight, on an ensemble basis across many, many cars affected how those cars drove.

Fun.