Do you believe Tesla's current FSD could handle the car in front going full ABS brakes all the way to a stop in every case without hitting the car in front, or is FSD "stupid"?

You're ignoring the possibility that the answer is "both".

A human takes, on average, 2.3 seconds to start braking. The Tesla

should take, on average, maybe 100 milliseconds or so. So it should be possible for the Tesla to handle another car panic braking without too much trouble, whereas a human driver would probably cause a rear-end collision if not leaving a proper following distance.

A follow distance of 2 seconds in many areas of the USA will end up with multiple cars moving into that space. It's 176 feet at 60 MPH. To say that it should always be safe to just jam your brakes and anyone that hits you is "stupid" is not a very honest view of driving.

The law says that if you rear-end me, it is your fault, period, with zero exceptions unless there was inadequate time for you to slow down between when I got into the lane and when I slammed on the brakes. So from a liability perspective, I have no liability if I bury it in the brakes to avoid hitting someone in the road, whereas if I allow myself to hit someone in the road, I have 100% liability.

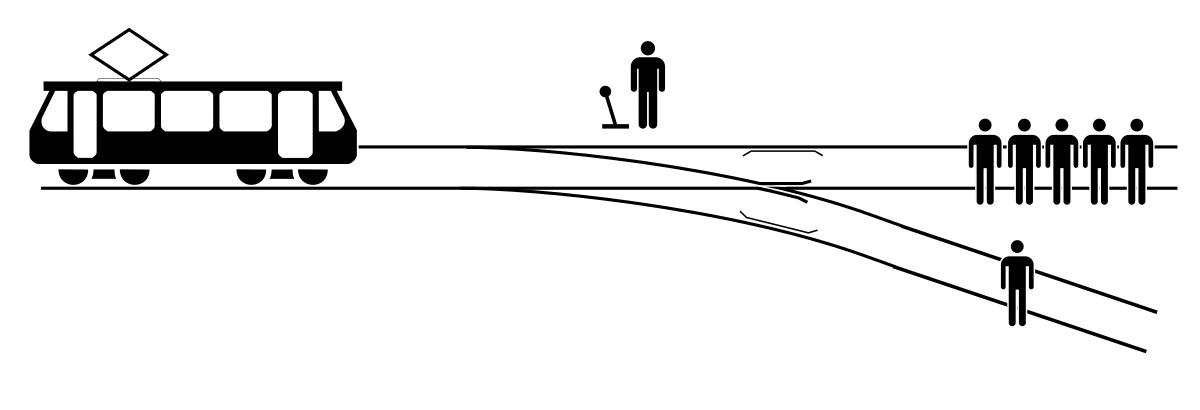

The correct choice is always "evade if safe; if not, slow down as much as humanly possible to minimize the damage and injuries". Period. That's what the law says. You can disagree with the law if you want to, but that's what the law says, and I happen to think that it's the correct call. Better to have a hundred vehicles piling up with a small speed differential between each of the two cars than to have a single collision with a 70 MPH speed differential into an immovable object, a person, or just about anything else that could go flying off and hit someone's windshield.

People drive closer than this because they have done it for decades with no issues, because humans do not drive this way. This is why the automotive industry and NHTSA actually considers inadvertent brake application to be quite dangerous, instead of fully safe like you argue.

Inadvertent. That's the key word. That's not the same thing as using the brakes intentionally to avoid a legitimately unsafe situation. People hate Tesla's phantom braking because it is stupid.

If a car going 3 MPH slower than you intrudes into your lane, it slows down as though it thought a car going 3 MPH intruded into your lane. In those circumstances, it is never necessary to slow down to a speed slower than the vehicle in question, but AP frequently does.

And it confuses things outside of the lane (e.g. guard rails) with cars, and suddenly thinks that part of the car in front of you has stopped, despite that being largely nonsensical (because part of a car doesn't generally suddenly stop).

Those problems are bugs that need to be fixed. But that is independent of whether the vehicle should stop for or avoid any actual object in the road unless it is impossible to do so safely because of time between detection and impact.

The critical thing is to react quickly, reassess quickly, and then adjust quickly. If you think there's something wrong that requires braking, start braking a little bit, verify your data in the next frame, and either increase or decrease braking in a rapid feedback loop. Don't brake at 100% instantly, because 1/30th of a second to verify the data with another frame is almost never critical, but don't wait to start ramping the brakes in case it's real. Then adjust quickly, and if you were wrong, get quickly back up to speed.

All of this is the same thing that will happen with AP if it only crashes once every 50,000 miles. People will get complacent and pay less attention, and then it will steer into a barrier one day, and you'll call them "stupid." But it's not stupid, it's human nature, and good systems designed for humans take human nature into account. You're effectively saying that everyone that has ever been harmed by complacency was stupid. Yet if you search google for "safety systems complacency" you find articles on how to battle this aspect of human nature, not just a bunch of safety experts calling users stupid.

Agreed. That's very much human nature. I wouldn't be surprised if AP occasionally acted like it was going to do something stupid (but then didn't) entirely to scare drivers into paying attention. Maybe that's the real story behind phantom braking.