...Look over the page and tell me where it says that EAP and FSD are beta features..

I do admit that it is difficult for an average consumer to realize that it's beta.

I knew Autopilot was beta when it was first announced in 2014 because people would pay for it first with the promise that it would be activated with subsequent releases of the software and not right away.

I then read the

blog which reinforced my suspicion "Building on this hardware with future software releases, we will deliver a range of active safety features, using digital control of motors, brakes, and steering to avoid collisions from the front, sides, or from leaving the road."

Lots of people didn't read the word "future"! They bought something in 2014 that didn't work until "future"! That's not a final production quality at all!

I then read the owner's manual and it says clearly in there with the word "beta"!

When AP2 was announced in 2016, it's the same thing. People bought it but couldn't use it. It's no where near a final production quality at all!

The

blog said: "Before activating the features enabled by the new hardware, we will further calibrate the system using millions of miles of real-world driving to ensure significant improvements to safety and convenience. While this is occurring, Teslas with new hardware will temporarily lack certain features currently available on Teslas with first-generation Autopilot hardware, including some standard safety features such as automatic emergency braking, collision warning, lane holding and active cruise control. As these features are robustly validated we will enable them over the air, together with a rapidly expanding set of entirely new features. As always, our over-the-air software updates will keep customers at the forefront of technology and continue to make every Tesla, including those equipped with first-generation Autopilot and earlier cars, more capable over time."

It said clearly that when you bought it, it wouldn't work. You had to have patience because it says "more capable over time". These are hints that it's not a final production quality at all!

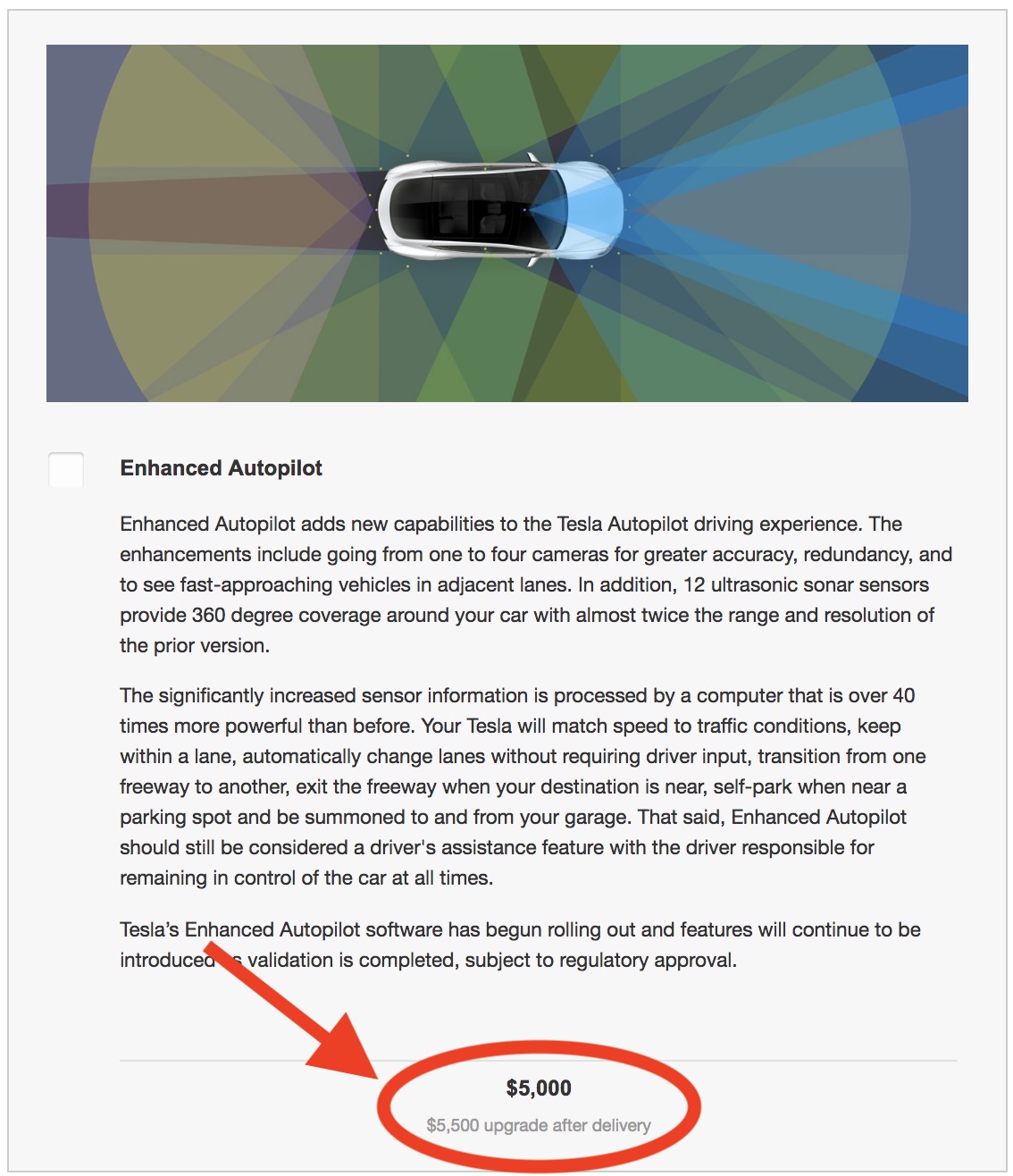

When you ordered Enhanced Autopilot it says It "has begun rolling out". "Begun" is not the same as finished. If it's not finished, it's nowhere near final production quality at all!:

Photo credit: electrek.co

In summary, people may not know that they just bought a beta feature, but the clues are there. The legal document is there!