Why is such a mandate necessary? We don't even require cars with teen or learning drivers to post a special sign and I imagine the probability of an accident with them is drastically higher than your average drive. Nor have there ever been suggestion of doing external status lights for other automation features (like CC, ACC, lane keeping etc). It really should be up to the owner if they want to advertise capabilities of their vehicle with a sign.Waymo has all those LIDAR hanging off it, screaming that this is an unusual car and should be treated as such. It would be nice if Teslas came with something that other drivers intuitively understood to mean that the car is using automation software. I know that some people put bumper stickers on their Teslas, but stickers are only visible at short distances, from behind, and what they say isn't true when the driver is actively controlling the car.

I can imagine a NHTSA mandate that cars running automation software must clearly indicate it to other drivers. How about a taxi light on top that can light up with L1 to L5? Or some roof rack-mounted light bar with 5 bands that light up depending on the level of driving automation?

I just think that being able to communicate to other drivers that "It's not me, it's my car" is something that should happen.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Autonomous Car Progress

- Thread starter stopcrazypp

- Start date

-

- Tags

- Autonomous Vehicles

Why would I not want people to know that I'm using beta test software in public? Or that I'm an inexperienced driver? Or that I'm not a local and don't know the roads? Any considerate person would want other considerate people to know that - which is the reason we don't have such things.Why is such a mandate necessary?

All of that is up to the individual driver, but you are instead talking about a policy mandated by law. Unless there is a more compelling reason to push such a law, the privacy of the owner would trump any curiosity of other drivers.Why would I not want people to know that I'm using beta test software in public? Or that I'm an inexperienced driver? Or that I'm not a local and don't know the roads? Any considerate person would want other considerate people to know that - which is the reason we don't have such things.

powertoold

Active Member

Even Dan doesn't understand what design intent means as it relates to the SAE definitions, speaks volumes about how useless the definitions are:

No it wont because Huawei is banned in the US and parts of the EU.1. Do you think Huawei will launch City driving in US by years end and how will it perform in showdowns vs Tesla FSDb everywhere?

If Huawei were allowed in the US and Tesla FSD outperformed it then yeah I will declare that Tesla is ahead of Huawei.2. If Tesla outperforms Huawei will you finally admit that Tesla FSD is ahead and that you were mistaken?

The same way I declare that Waymo is ahead of Cruise due to functionality and performance. And that both of them are ahead of Mobileye.

I'm not a fanatic of a particular company. I'm an AV/ADAS fan.

EVNow

Well-Known Member

What ifIf Huawei were allowed in the US and Tesla FSD outperformed it then yeah I will declare that Tesla is ahead of Huawei.

- Huawei is better than Tesla in China AND

- Tesla is better than Huawei in US ?

But you made the claim that Tesla is ahead of everyone else and is the only general system?Whether or not China gets FSD beta is irrelevant to me because I want to use it here. The same applies to Huawei being ahead of Tesla or not. I'd love to see actual lay-user videos of any fsd equivalent system though, and if they seem better than fsd beta, I'd say so.

Contrary to what you say, I'm not on any team. I'm a Tesla fsd fanboy because there is a "bitter lesson" in NN training: you need large, diverse, and accurate dataset.

I don't understand how anyone following AVs and NNs can look at Cruise, Mobileye, and Waymo and conclude that they're ahead. You need a large fleet: for unit-testing, for data, for shadow mode, for raw sensor data, for so many important aspects of AVs development. Not only to survive as a healthy business but also to feed NN predictions in all sorts of environments.

Tesla is making all the right moves to capitalize on their large fleet, and it's showing in their progress.

Ah, the allure of buzzwords! They can certainly create a captivating narrative, but when it comes to truly understanding the intricacies of a subject, it's important to dig deeper into the details. It's like saying the charlotte bobcat has the best player in the NBA but to determine its veracity, you have to analyze and catalog all the players on the Charlotte Bobcats roster, as well as those on every other team in the NBA. Only by comparing their individual statistics would you determine if indeed a Bobcats player surpasses everyone else.

While it's easy to tout Tesla as the frontrunner based on their extensive fleet and data collection, a closer look at the specifics of their technology and that of their competitors reveals a more nuanced picture.

Neural Network Training

When it comes to neural network (NN) training, Waymo has been leveraging the power of Google TPU, including the TPU4, while Tesla is still working on setting up Dojo. Consequently, Waymo has been enjoying the benefits that Tesla fans attribute to Dojo from the very beginning.

Large Fleet / Data

Now, if we were to judge AV superiority based on fleet size and data, companies like Mobileye with their full supervision fleet of 100k+ cars, NIO with ~50-100k+ cars, Xpeng with ~100k cars, and Huawei with ~5k cars would all be considered frontrunners ahead of Waymo and Cruise. They boast impressive arrays of sensors, including 8mp cameras (as opposed to Tesla's 1.2mp), *surround radars, *lidars and more powerful compute capabilities, such as NIO's 1000+ TOPS.

When it comes to neural networks, data acquired using radars or lidars typically yields superior results compared to camera data alone. Moreover, one cannot simply add radar or lidar data to pre-existing camera data at a later stage. Consequently, the data collected by other companies is far more valuable than Tesla's, as they can ground it more accurately. Tesla, on the other hand, relied on an outdated ACC radar for ground truth, which was limited to forward tracking of moving objects (not static ones) and had a narrow field of view.

In contrast, other companies train their vision neural networks with rich camera data, seamlessly fused with high-quality HD radar information (and in some cases, even ultra-imaging radar data), as well as high-resolution lidar data. This comprehensive approach to data collection and fusion ultimately leads to more robust and reliable neural network models

ML / Neural Network Architecture

It's crucial to remember that the cutting-edge ML and NN architectures of today didn't materialize out of thin air. Waymo, for example, had been using transformers long before Tesla adopted them. Similarly, other AV companies have been employing multi-modal prediction networks, while Tesla initially relied on their C++ driving policy before eventually making the switch.

Tesla were running an instance of their c++ driving policy (planner) as a prediction of what others would do. They then ditched that and moved to actual prediction networks that others have been using for years. Then they finally caught up and moved to multi modal prediction network which others had also been using. Unlike Tesla fans, Tesla tells you exactly what they are doing and not doing in their tech talks, AI conference and software updates. Its the fans that invent mythical fables and attach it to Tesla.

Heck just days ago Elon admitted their pedestrian prediction is rudimentary.

(Compare that waymo who has been doing this for a long time. Paper: [2112.12141] Multi-modal 3D Human Pose Estimation with 2D Weak Supervision in Autonomous Driving Blog: Waypoint - The official Waymo blog: Utilizing key point and pose estimation for the task of autonomous driving)

When it comes to driving policy, many companies have been incorporating ML into their stacks long before Tesla. In fact, at AI Day 2, Tesla presented a network strikingly similar to Cruise's existing approach. Meanwhile, Waymo had already deployed a next-gen ML planner into their driverless fleet.

Of course, while Tesla was still toying with introducing ML into their planning system, Waymo was already using an ML planner and even releasing a next-gen version for their driverless fleet.

Simulation

As for simulation, Elon Musk initially dismissed its value back in 2019. Back in 2019, Elon musk called it "doing your own homework", basically saying it was useless. This was when Waymo, Cruise and others were knee deep in simulation and using it for basically every part of the stack.

In one of Andrej tech talk he even said that they weren't worried about simulation but on their bread and butter (the real world).

In late 2018, The information released an report saying that Tesla was in the infancy of simulation.

Fast forward to AI Day 2021, and he was singing a different tune, declaring that "all of this would be impossible without simulation." Instead of building their own simulation tech, Tesla opted to modify and use Epic Games' procedural generation system for UE5.

Conclusion

So, if we were to compare Waymo and Tesla in detail, it's clear that Tesla lags behind in several key areas: ML networks, compute power (Waymo's TPU4 vs. Tesla's GPU-based training), sensors (Waymo's higher quality cameras and sensor coverage), simulation tech, driving policy, and support for all dynamic driving tasks. However, this doesn't necessarily mean that Tesla is trailing overall; one could argue that they're still 10 years ahead.

But the fact of the matter is, these facts still exist and remain valid.

It's certainly tempting to lean into the data advantage argument, but I rathe we examine the details and ask logical questions, rather than relying on buzzwords and vague claims. We should consider how data truly affects the perception, prediction, and planning stacks of AV architectures and critically assess the extent to which data augmentation and simulation can compensate for any shortcomings.

- If billions of miles of real-world data are indeed essential, then why aren't the millions of tourists who visit San Francisco / Phoenix annually from all over the world endangered by Waymo vehicles? They are, after all, not part of the perception dataset.

- What about the countless tourists who drive into San Francisco / Phoenix and are not rear-ended by Waymo? Their presence, too, is absent from the perception dataset.

- Why doesn't Waymo mispredict pedestrians' actions and collide with them, or mispredict other vehicles' movements and sideswipe or crash head-on with them? Clearly, these tourist behaviors are not in the prediction dataset either.

These concerns pertain to the perception and prediction stack, but let's also examine the planning and driving policy stack. Within the roughly 200,000 miles of divided highways in the United States, how many miles are truly unique? How many miles are not well represented elsewhere?

From my perspective, a mere 1% of these highways are genuinely distinctive—for example, on-ramp interchanges, cloverleafs, short on/off ramps, close on/off ramps, on/off ramps requiring double lane merges, etc. However, what do you believe? Are 90%, 75%, 50%, or 25% of these highways unique?

This will help us figure out how much data is needed and how much can be covered through data augmentation & simulation at scale.

Last edited:

This is the Avatr 11 made by Changan, CATL and Huawei. Here's a blurb I found on its autonomy hardware in an article from May 2022:Something Fun for interested

"As for autonomous driving, the Avatr 11’s autonomous driving system comprises 3 LIDAR sensors, 6 millimeter-wave radars, 12 ultrasonic radars, and 13 cameras. The “brain” of this system is Huawei’s computing platform, capable of 400 TOPS."

I assume "TOPS" is "TFLOPS".

TOPs usually refers to int8 (integer and fixed-point operations) while TFLOPs usually refers to int32 (floating-point operations)This is the Avatr 11 made by Changan, CATL and Huawei. Here's a blurb I found on its autonomy hardware in an article from May 2022:

"As for autonomous driving, the Avatr 11’s autonomous driving system comprises 3 LIDAR sensors, 6 millimeter-wave radars, 12 ultrasonic radars, and 13 cameras. The “brain” of this system is Huawei’s computing platform, capable of 400 TOPS."

I assume "TOPS" is "TFLOPS".

I should point out that this is ADS 1.0. It suffers from the issues I pointed out last year which is getting stuck behind parked cars.Something Fun for interested

ADS 2.0 which is currently available on the AITO M5 high-end version solves that problem. It will be coming as an OTA to AVATR 11 this year.

EVNow

Well-Known Member

Whats the ODD, though ?This is the Avatr 11 made by Changan, CATL and Huawei. Here's a blurb I found on its autonomy hardware in an article from May 2022:

"As for autonomous driving, the Avatr 11’s autonomous driving system comprises 3 LIDAR sensors, 6 millimeter-wave radars, 12 ultrasonic radars, and 13 cameras. The “brain” of this system is Huawei’s computing platform, capable of 400 TOPS."

I assume "TOPS" is "TFLOPS".

BTW, how do these companies with LIDAR use it ... do they need "HD Maps" ?

Doggydogworld

Active Member

Tesla uses Lidar-equipped cars from time to time to generate training data with correct ground truth measurements. Needless to say, they don't have "billions of miles" of this data. Yet somehow they still find a use for it....Large Fleet / Data

....When it comes to neural networks, data acquired using radars or lidars typically yields superior results compared to camera data alone.

I suspect that Tesla uses lidar data as ground truth for testing things like vision-only range estimation algorithms, not for NN training. NN training, AFAIK, needs to be done with the same sensor inputs that the target vehicles have.Tesla uses Lidar-equipped cars from time to time to generate training data with correct ground truth measurements. Needless to say, they don't have "billions of miles" of this data. Yet somehow they still find a use for it....

powertoold

Active Member

Mobileye is currently where Tesla was 5 years ago, even worse because they don't have their full stack cars in the USA.

Could you elaborate a bit, what does having their full stack cars in the USA afford them?Mobileye is currently where Tesla was 5 years ago, even worse because they don't have their full stack cars in the USA.

Doggydogworld

Active Member

Training uses inputs, which wouldn't include lidar, and the "correct answer". The training process involves repeatedly tweaking the weighting coefficients until the NN output best matches the correct answers. Correct answers for image recognition NNs typically come from humans. When Captcha asks you to click on all the pictures with buses, or the squares that include traffic lights, you are providing "correct answers" for Waymo to train against. Captcha does not ask you "how far away is the red car in the picture" or "how fast is it moving" because you'd get it wrong. Lidar gets it right, so you can use lidar data to train your distance and velocity estimation NNs. You can also use high-res radar data, or ideally both together.I suspect that Tesla uses lidar data as ground truth for testing things like vision-only range estimation algorithms, not for NN training. NN training, AFAIK, needs to be done with the same sensor inputs that the target vehicles have.

powertoold

Active Member

Could you elaborate a bit, what does having their full stack cars in the USA afford them?

Affords them the ability to collect data and develop their stack to drive in the USA.

I find it hard to believe that anyone who's worked in software and product development can look at Mobileye and not see the big disadvantages and, frankly, all the BS in their approach.

1) They don't collect raw images or video from their current fleet. If you think so, find the evidence from Mobileye. Also, do some deep thinking about it: do the Mobileye-equipped cars connect to WiFi and upload petabytes worth of data? If not, are they uploading petabytes using cellular? Who's paying for the cellular data? Even if the Mobileye-equipped cars DO upload raw images and video, it's not their full stack, so it's essentially worthless (they'd only get forward-facing images / video).

2) True Redundancy makes no sense

3) Their full stack car fleet is small and only based in China so far

4) We have no idea how limited their data collection is, because we don't know the exact details of their agreements with Chinese car mfgs. Did they work intimately with the car mfgs to develop, calibrate, and select all the sensors in the car, including the steering motors, accelerometers, camera sensor types / resolutions, camera heating elements, etc. etc.?

5) Do they have large training clusters? Can they even invest in one with only 1.3b in the bank?

Last edited:

Affords them the ability to collect data and developing their stack to drive in the USA.

Maybe its because i have not worked in software and product development, but there is nothing they are doing that is hard to believe. What's BS about what they are doing, they have been one of the largest suppliers of ADAS going back years even the AP 1 used Mobileye tech.I find it hard to believe that anyone who's worked in software and product development can look at Mobileye and not see the big disadvantages and, frankly, all the BS in their approach.

Is that a strawman you are creating? I asked a simple question for you to elaborate what you meant.1) They don't collect raw images or video from their current fleet. If you think so, find the evidence from Mobileye. Also, do some deep thinking about it: do the Mobileye-equipped cars connect to WiFi and upload petabytes worth of data? Even if the Mobileye-equipped cars DO upload raw images and video, it's not their full stack, so it's essentially worthless (they'd only get forward facing images / video).

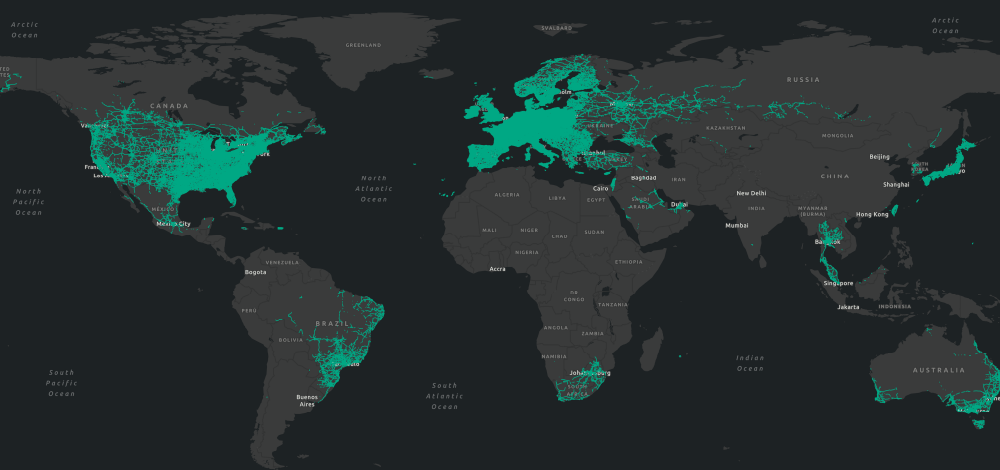

Mobileye’s Self-Driving Secret? 200PB of Data | Mobileye Blog

Powerful computer vision tech and natural language models turn industry’s leading dataset into AV training gold mine.

Mobileye’s database – believed to be the world’s largest automotive dataset – comprises more than 200 petabytes of driving footage, equivalent to 16 million 1-minute driving clips from 25 years of real-world driving. Those 200 petabytes are stored between Amazon Web Services (AWS) and on-premise systems. The sheer size of Mobileye’s dataset makes the company one of AWS’s largest customers by volume stored globally

Makes sense. They have 2 redundant systems that can operate independently of each other which provides a greater MTBF because both systems fail differently. What does not make sense about it?2) True Redundancy makes no sense

They collect data from just about every manufacturer that uses their tech. That is how they are able to create a Map of entire countries. Their technology does not rely on collecting images from fleets.3) Their full stack car fleet is small and only based in China so far

They don't collect images from fleets. Their marketing pitch is that they only need to collect Kilobytes of data per mile or so.4) We have no idea how limited their data collection is, because we don't know the exact details of their agreements with Chinese car mfgs

Something like this?5) Do they have large training clusters? Can they even invest in one with only 1.3b in the bank?

The compute engine relies on 500,000 peak CPU cores at the AWS cloud to crunch 50 million datasets monthly – the equivalent to 100 petabytes being processed every month related to 500,000 hours of driving.

You imagine Mobileye an intel company, one of the world's largest supplier of processors for enterprise and data centers does not have a training cluster?

EVNow

Well-Known Member

This is a shill video .... oh wait, PR videos are so much more trustworthy and independent than "shill" videos.

Similar threads

- Replies

- 10

- Views

- 883

- Article

- Replies

- 4

- Views

- 2K

- Replies

- 37

- Views

- 2K

- Replies

- 41

- Views

- 2K