Dojo will bring down the cost for Tesla, but it will not do anything that writing a big check to AWS will not do. It is Karpathy, his team, the data and HW3 that will bring in the improvements. Right now the bottle neck is validation of HW3 specific software. Once that is out we should see a big improvement in performance. How big is just speculation at this point.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Dojo

- Thread starter Lasairfion

- Start date

GrimRe

Member

It’s not just cost dude. AWS currently use the NVIDIA GPU acceleration for their deep learning instances. Whilst this is at an enormous scale there is a fundamental use case difference in that NVIDIA have many different types of workload to think about. This means certain a chip design that doesn’t suit Tesla specifically. You can hear Andrej talk about in his pyTorch talk to do with the GPU and Layer 1/2 RAM not being suited to video frames.

So even if Tesla cut AWS a $500m cheque, it wouldn’t help them achieve FSD any faster. The idea is similar to sharpening the saw philosophy. Give Tesla a year to cut down a tree and they will spend 90% of it sharpening the saw.

So even if Tesla cut AWS a $500m cheque, it wouldn’t help them achieve FSD any faster. The idea is similar to sharpening the saw philosophy. Give Tesla a year to cut down a tree and they will spend 90% of it sharpening the saw.

It’s not just cost dude. AWS currently use the NVIDIA GPU acceleration for their deep learning instances. Whilst this is at an enormous scale there is a fundamental use case difference in that NVIDIA have many different types of workload to think about. This means certain a chip design that doesn’t suit Tesla specifically. You can hear Andrej talk about in his pyTorch talk to do with the GPU and Layer 1/2 RAM not being suited to video frames.

So even if Tesla cut AWS a $500m cheque, it wouldn’t help them achieve FSD any faster. The idea is similar to sharpening the saw philosophy. Give Tesla a year to cut down a tree and they will spend 90% of it sharpening the saw.

Tesla has various needs, inference, training etc. All of these can be run on GPUs. Some task will require a lot of memory, like the pytorch example in the video. This can be done on GPUs with less memory, but it will be less efficient, thus cost more.

Giving AWS a $500M check would decrease time to achieve FSD, it would allow developers to compile more frequently. Elon will do it better and get long term benefits, but it would help.

GrimRe

Member

Respectfully, I disagree. Of course Tesla will use cloud providers like AWS for the vast majority of their IT workloads. However Dojo is different.

Let’s assume the design goal of Dojo is roughly the same as inference optimised FSD chips. 10x performance increase at 40% price reduction.

Now let’s say you have a training job on AWS that takes 100hrs to complete and costs $100,000. If you want AWS to now perform at the same level as Dojo the cost to train that model in 10hrs would be $1m. That or $60k on Dojo. For the same cost of $1m you could run almost 17 parallel runs of the Dojo training. Tesla running at the scale they are, $500m would be burned very quickly on AWS with not much to show for it. $500m worth of Dojo chips would result in a multi year improvement in FSD readiness.

Let’s assume the design goal of Dojo is roughly the same as inference optimised FSD chips. 10x performance increase at 40% price reduction.

Now let’s say you have a training job on AWS that takes 100hrs to complete and costs $100,000. If you want AWS to now perform at the same level as Dojo the cost to train that model in 10hrs would be $1m. That or $60k on Dojo. For the same cost of $1m you could run almost 17 parallel runs of the Dojo training. Tesla running at the scale they are, $500m would be burned very quickly on AWS with not much to show for it. $500m worth of Dojo chips would result in a multi year improvement in FSD readiness.

Pilot7478

Member

I have just rewatched the Autonomy Day video and the pyTorch talk. It still completely blows me away what is ostensibly going on behind closed doors at Tesla with respect to FSD.

To me the biggest irreconcilable point is the difference between what is being promised as "feature complete" FSD and the state of AutoPilot with FSD right now. I recently received the latest software update and while its fantastic, its absolutely light years behind what I would consider level 5. There is absolutely no city level perception and even used as intended on highways it seems to have strange regressions every now again. Features are slowly added like traffic cone recognition and more recently adjacent speed monitoring. If these "features" are being added piece by piece it will be DECADES before we have feature complete FSD. There will need to be an almost unbelievable step change in AutoPilot capability in the coming months for me to have any hope of the timelines being true.

I wonder if Dojo is the missing ingredient here? Are Tesla banking on the fact that they will all of sudden be able to fully realise the hidden learnings within the video of their fleet? Is this what takes the current NN model from a level 2 to a level 4/5 within a matter of months?

I think, once HW3 became available to Tesla developers, they have started using it exclusively for all new development.

Once all this HW3 only work is released, we should see the massive improvement in Autopilot.

What we see now (these few new features) is just a small part of what can be EASILY deployed in HW2+.

It just does not make sense for them to continue extensive development for HW2+.

And most of the new work just cannot be released to HW2+.

However, HW2+ still needs to be supported for existing functionality (freeway driving), but I guess it's almost complete and will be just fine tuned in future releases (this is what we see in 40.2.1 - improvements to lane changes and NoA).

strangecosmos2

Koopa Troopa

I think, once HW3 became available to Tesla developers, they have started using it exclusively for all new development.

I think some version of this must be true. Going back to October 2018, Karpathy was discussing that his team has developed and trained new neural nets designed specifically for HW3/FSD Computer that are too compute hungry to run on HW2. He said: “we are currently at a place where we trained large neural networks that work very well, but we are not able to deploy them to the fleet due to computational constraints.” (full quote)

I don't think anyone has found definitive proof that these new networks haven't been deployed to HW3 cars or that they have been deployed. Elon's tweets would suggest not yet. So, my hunch is that the “feature complete” FSD update will include the new nets. (Or if there are multiple updates adding different city driving features over time, then in one of those updates.)

P.S. My account and/or TMC itself appears to have been hacked/compromised. Please be aware there may be a security vulnerability in the site and that messages from this account are not guaranteed to be from me.

GrimRe

Member

I agree that Tesla are purposefully holding back AutoPilot features based on HW2 hardware limitations. They aren’t ready to upgrade everyone to HW3 that bought FSD option so they have to keep the feature set roughly equal on HW2/3 for now.

What I assume the rollout may look similar to that of Smart Summon:

Q4 2019

- Handful of Early Access Program release candidate of the City Navigate on AutoPilot tuned for California

Q1 2020

- Wider release to Early Release Program members of City Navigate on AutoPilot (CNoAP) geo-fenced to certain road systems in North America (much like regular NoAP)

Q4 2020

- Initial release of CNoAP for all FSD owners in jurisdictions where approved and on road systems whitelisted by Tesla.

Q4 2022

- First Tesla RoboTaxi service launched in a partnered municipality in the U.S limited to cars using HW4 onboard computer due to its improved safety.

Q2 2024

- CNoAP now available in most markets on most road systems. RoboTaxi operating in most parts of North America.

What I assume the rollout may look similar to that of Smart Summon:

Q4 2019

- Handful of Early Access Program release candidate of the City Navigate on AutoPilot tuned for California

Q1 2020

- Wider release to Early Release Program members of City Navigate on AutoPilot (CNoAP) geo-fenced to certain road systems in North America (much like regular NoAP)

Q4 2020

- Initial release of CNoAP for all FSD owners in jurisdictions where approved and on road systems whitelisted by Tesla.

Q4 2022

- First Tesla RoboTaxi service launched in a partnered municipality in the U.S limited to cars using HW4 onboard computer due to its improved safety.

Q2 2024

- CNoAP now available in most markets on most road systems. RoboTaxi operating in most parts of North America.

DanCar

Active Member

Stanford University says that the amount of compute power available for dojo work doubles every 3.4 months:

https://hai.stanford.edu/sites/g/files/sbiybj10986/f/ai_index_2019_report.pdf

https://hai.stanford.edu/sites/g/files/sbiybj10986/f/ai_index_2019_report.pdf

Stanford A.I. said:

- Prior to 2012 - AI results closely tracked Moore’s Law, with compute doubling every two years (Figure 3.14a).

- Post-2012 - compute has been doubling every 3.4 months (Figure 3.14b). Since 2012, this compute metric has grown by more than 300,000x (a 2-year doubling period would yield only a 7x increase).

strangecosmos2

Koopa Troopa

ratsbew

Active Member

I'm hopeful that Tesla is taking all of the HW2.5 from cars that are being upgraded to HW3.0 and using that as "free" computing. Basically take all of the HW2.5 cards and build a supercomputer from them.

DanCar

Active Member

@jimmy_d Gives us an overview of Dojo, dated Nov 10, 2020. Check out the table of contents in the overview.

TIMESTAMPS:

0:00 - Intro

0:45 - Who is James Douma

1:30 - What is Dojo?

4:05 - How Tesla makes the system better

4:30 - Labeling data

5:15 - How to leverage labeling

6:00 - What is 3D structure through motion

8:55 - Dojo bringing down computational costs

10:20 - GPUs for Neural Nets

11:50 - Custom silicon for neural nets

12:20 - Dojo’s current progress

13:50 - What is labeling

18:55 - Training neural nets

21:24 - How neural nets work with human labelers

23:30 - Supervised training

24:00 - Misperception of what Tesla is doing

25:20 - What data the fleet sends to Tesla

29:50 - Embedding

34:00 - Compounding improvement to system

35:20 - Detecting edge cases

36:25 - Driver interventions

41:35 - What can be send to cars without a big firmware update?

44:05 - Perception engine for FSD vs planning system

0:00 - Intro

0:45 - Who is James Douma

1:30 - What is Dojo?

4:05 - How Tesla makes the system better

4:30 - Labeling data

5:15 - How to leverage labeling

6:00 - What is 3D structure through motion

8:55 - Dojo bringing down computational costs

10:20 - GPUs for Neural Nets

11:50 - Custom silicon for neural nets

12:20 - Dojo’s current progress

13:50 - What is labeling

18:55 - Training neural nets

21:24 - How neural nets work with human labelers

23:30 - Supervised training

24:00 - Misperception of what Tesla is doing

25:20 - What data the fleet sends to Tesla

29:50 - Embedding

34:00 - Compounding improvement to system

35:20 - Detecting edge cases

36:25 - Driver interventions

41:35 - What can be send to cars without a big firmware update?

44:05 - Perception engine for FSD vs planning system

DanCar

Active Member

10K tesla designed computer chips ordered for dojo:

money.udn.com

money.udn.com

Same number as reported for nVidia's neural net accelerators which are commonly referred to as GPUs which is a misnomer.

www.techradar.com

www.techradar.com

特斯拉衝刺超級電腦「Dojo」布局 將擴大下單台積電 | 產業熱點 | 產業 | 經濟日報

特斯拉衝刺旗下超級電腦「Dojo」布局,傳將擴大與台積電合作,其超級電腦晶片「D1」採用台積電7奈米家族製程結合先進封裝...

Same number as reported for nVidia's neural net accelerators which are commonly referred to as GPUs which is a misnomer.

Nvidia is powering a mega Tesla supercomputer powered by 10,000 H100 GPUs

Elon Musk hopes to crack fully autonomous vehicles with an incredibly powerful supercomputer

DanCar

Active Member

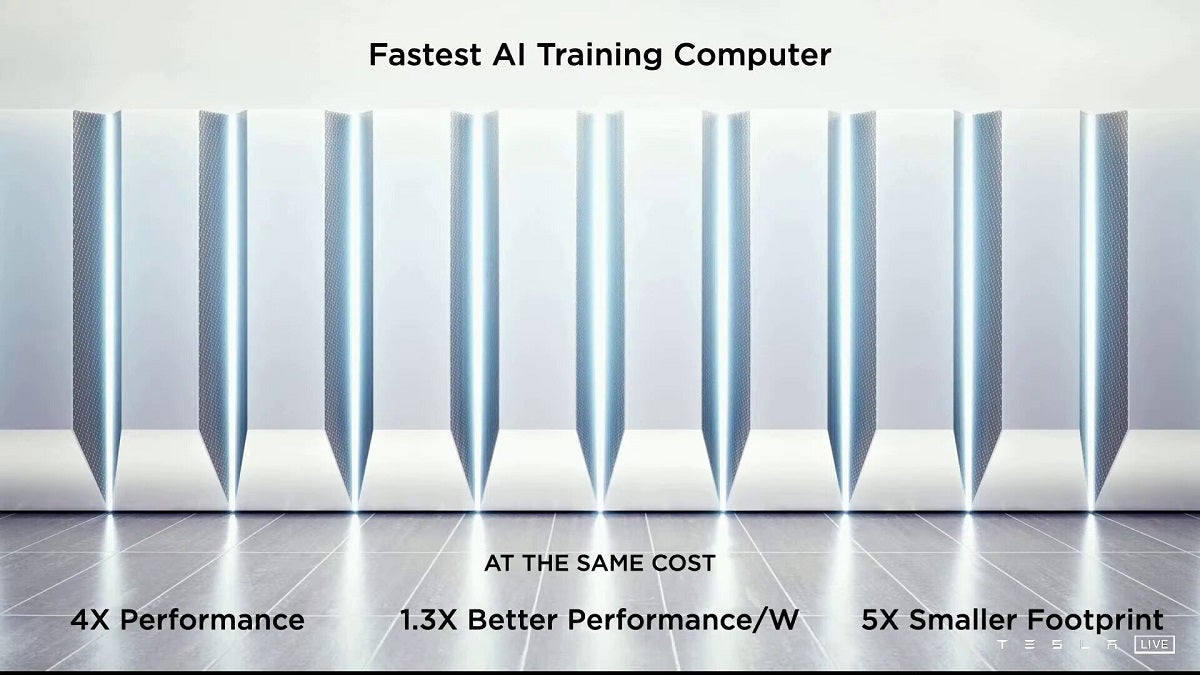

Tesla to Invest $1B in Dojo Supercomputer; 100 Exaflops by October ‘24

To achieve level 5 autonomy, Tesla needs sufficient computing power. The company is investing $1 billion in the Dojo supercomputer, which will begin production soon. The supercomputer will provide fast training of neural networks, which means faster achievement of full driving autonomy.

$1B / 10K = $100K for each Tesla dojo chip.

If the $1B if for both nVidia and Tesla dojo then it is $1B / 20K = $50K for each chip and supporting hardware.

According to below article h100 costs $30K each:

Google's new A3 GPU supercomputer with Nvidia H100 GPUs will be generally available next month | TechCrunch

Despite their $30,000+ price, Nvidia's H100 GPUs are a hot commodity -- to the point where they are typically back-ordered. Earlier this year, Google

DanCar

Active Member

Latest version of dojo in the works:

electrek.co

electrek.co

Tesla's next-gen Dojo AI training tile is in production

Tesla’s next-gen Dojo AI training tile is in production, according to supplier Taiwan Semiconductor Manufacturing Company Limited (TSMC). Tesla has...

electrek.co

electrek.co

Similar threads

- Replies

- 22

- Views

- 9K

- Replies

- 3

- Views

- 6K

- Replies

- 23

- Views

- 5K

B

- Replies

- 104

- Views

- 12K

- Replies

- 33

- Views

- 28K