Is there any information about this anywhere? I started wondering when I had to charge to 100% a dozen times or so on road trips and noticed the first 10-20% of the battery drops much faster than the Wh/mi measurement suggests,then levels off. This significantly affected the initial estimated range; so an estimate that I'll reach the next supercharger with 25% changes to 6% in the span of about 15 mins. I brought it up with my SC whose diagnostics indicated my battery is no different than most. Anyone else have a similar experience?

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How is the dashboard battery % calculated?

- Thread starter BuildingCap

- Start date

The trip planner in the car assumes energy consumption based on a hard number and of course terrain. It does not remember and account for weather or your driving style or head wind or anything that can affect energy consumption. When you start a trip the prediction in the trip graph is based on whatever Tesla has programmed in as 'normal' energy consumption. In many cases that's too optimistic. When I'm on road trips, I almost always use more energy than the trip graph predicts.

Once you are driving, the car measures the actual energy consumption and compares it to what it predicted and you see the lines in the graph drift apart. Since the car measures energy taken out of the battery, everything is automatically included. Headwind, speed, temperature. Anything that affects range is automatically considered. After 10 miles or so, you get a good estimate what your real energy consumption will be.

Why does Tesla not consider your driving style and use that as a prediction? There are many reasons, but consistency is probably the most important one. Since the car cannot see road conditions, wind or other things that are ahead of you, it makes little sense to use for example the last 100 miles as a reference. It would not make the prediction more accurate. But since the car compares actual and predicted consumption, you basically have both options available.

This doesn't mean there is no room for improvement. Some online trip planners get traffic info, get weather data from the route and allow you to enter your desired speed. This leads to much more accurate predictions. For example I used EVTrippplanner.com and compared it to my actual drive. It was accurate almost to the minute! It would also be nice if the trip planner would give you two calculations. One based on the standard numbers and one based on the last trip/leg you drove. Elon tweeted a vastly better navigation system is coming. There is hope

Once you are driving, the car measures the actual energy consumption and compares it to what it predicted and you see the lines in the graph drift apart. Since the car measures energy taken out of the battery, everything is automatically included. Headwind, speed, temperature. Anything that affects range is automatically considered. After 10 miles or so, you get a good estimate what your real energy consumption will be.

Why does Tesla not consider your driving style and use that as a prediction? There are many reasons, but consistency is probably the most important one. Since the car cannot see road conditions, wind or other things that are ahead of you, it makes little sense to use for example the last 100 miles as a reference. It would not make the prediction more accurate. But since the car compares actual and predicted consumption, you basically have both options available.

This doesn't mean there is no room for improvement. Some online trip planners get traffic info, get weather data from the route and allow you to enter your desired speed. This leads to much more accurate predictions. For example I used EVTrippplanner.com and compared it to my actual drive. It was accurate almost to the minute! It would also be nice if the trip planner would give you two calculations. One based on the standard numbers and one based on the last trip/leg you drove. Elon tweeted a vastly better navigation system is coming. There is hope

I too wondered what percentage indicator actually means. I have 2017 75S and only this winter to measure accuracy of wh/mile with % used. I find my % indicator is depleting quicker than my consumption shows regardless of SoC. Its off by about 5 to 10%.

This means after doing 64 miles at 280wh/mile I should have used 17.9KWh however in that time I've lost 28% of charge. At this rate it would mean I have a battery of only 64KWh. I know, or at least I hope, that usably battery on this model is about 72.5KWh which would mean that wh/mile measure is lying to me. The car is almost new with just over 2k miles on it and charging tells me I can indeed add almost 73KWh of energy into it so no battery degradation. After learning that I'm now adding 10% to my usage to know actual consumption during driving.

This means after doing 64 miles at 280wh/mile I should have used 17.9KWh however in that time I've lost 28% of charge. At this rate it would mean I have a battery of only 64KWh. I know, or at least I hope, that usably battery on this model is about 72.5KWh which would mean that wh/mile measure is lying to me. The car is almost new with just over 2k miles on it and charging tells me I can indeed add almost 73KWh of energy into it so no battery degradation. After learning that I'm now adding 10% to my usage to know actual consumption during driving.

dgatwood

Active Member

It's not just you. I've concluded that some of the estimates must be extremely crude. On my roughly three-week-old Model X (of which I've been home only a week and a half), the battery estimate on the dashboard drops by about six miles within the first half mile of driving. Obviously, it doesn't continue to fall at that rate, or I'd barely be able to make it work and back on a full charge.

And the charging time estimates (at least at my house) aren't much better. Yesterday, I had to get something out of my car while it was charging, and I watched on the dashboard as the charge time estimate crept up from 22 hours to 23, then 24 just because of the power drain from having the door open with the overhead lights on. The car had been charging for about 15 minutes at the time, and my mental math said it should have taken about five hours to charge, which it did. I have no idea where it got a number in the twenty-odd-hour range. And sometimes when says that it is charging at 12 amps, it says it is adding 2 miles per hour, and sometimes it says it is adding 3 miles per hour. I'm certainly not an expert in modern battery chemistry, but how can the number of miles you get from a fixed charge current possibly vary by even low double-digit percent, much less 50%?

Basically, my crude in-my-head guesses, even as a brand-new, first-time EV owner, seem to be better than the car's estimates every time. I'm not sure what the heck kind of new-age math the car is doing, but I'm starting to wonder if it involves machine learning and a number line....

But in all seriousness, I think that when you check the voltage of a battery right after charging, it lies to you a bit, because the charge hasn't fully distributed through the entire cell/pack, so you get an artificially high value upon initial measurement that quickly dissipates as soon as you put the pack under actual load. This also means that when you tell your car to stop charging at 265 miles, you're probably stopping several miles short of that, and the shortfall likely depends on charge speed, so superchargers would give you the most loss right off the bat. Or at least that's my best guess about what's happening here.

And the charging time estimates (at least at my house) aren't much better. Yesterday, I had to get something out of my car while it was charging, and I watched on the dashboard as the charge time estimate crept up from 22 hours to 23, then 24 just because of the power drain from having the door open with the overhead lights on. The car had been charging for about 15 minutes at the time, and my mental math said it should have taken about five hours to charge, which it did. I have no idea where it got a number in the twenty-odd-hour range. And sometimes when says that it is charging at 12 amps, it says it is adding 2 miles per hour, and sometimes it says it is adding 3 miles per hour. I'm certainly not an expert in modern battery chemistry, but how can the number of miles you get from a fixed charge current possibly vary by even low double-digit percent, much less 50%?

Basically, my crude in-my-head guesses, even as a brand-new, first-time EV owner, seem to be better than the car's estimates every time. I'm not sure what the heck kind of new-age math the car is doing, but I'm starting to wonder if it involves machine learning and a number line....

But in all seriousness, I think that when you check the voltage of a battery right after charging, it lies to you a bit, because the charge hasn't fully distributed through the entire cell/pack, so you get an artificially high value upon initial measurement that quickly dissipates as soon as you put the pack under actual load. This also means that when you tell your car to stop charging at 265 miles, you're probably stopping several miles short of that, and the shortfall likely depends on charge speed, so superchargers would give you the most loss right off the bat. Or at least that's my best guess about what's happening here.

The funny thing is that in my previous EV - Ioniq Electric - the range and charge estimates were exact science. No guesses and you could actually rely on the readings to the last mile. Tesla really has not figured out how to do these well yet and the gom (guess o meter) is as bad as in the first gen Leaf.

That said, when you start driving, the car consumes a lot initially due to various reasons. This is probably what you see in the miles that drop fast. Kind of normal thing, but somehow the Ioniq managed to make even these cold start readings work.

That said, when you start driving, the car consumes a lot initially due to various reasons. This is probably what you see in the miles that drop fast. Kind of normal thing, but somehow the Ioniq managed to make even these cold start readings work.

aesculus

Still Trying to Figure This All Out

The OP asked about the display of the battery on the dash, but many of the responses talked about the navigation range. As I understand it the dash battery soley displays the state of the battery (voltage?) by either percent or if you have selected a range display, the estimated range using the static EPA efficiency ratio against the SoC %. The navigation display of range is based on the recent history (last 30 miles?) so it's very dynamic. And as was pointed out it is really a poor predictor of expected range unless the rest of the trip (speed, temperature, wind, driving style, precip, road conditions) are going to be the same as your last 30 miles.

So what would cause the battery to display a number that does not match up to the figures calculated by the average or total energy consumption? Good question and I have seen this before and been puzzled by it. You can do the math after a few trips and test out what it should display based on the starting SoC and the cars display of both total energy consumed and efficiency along with distance. Sometimes it does not add up. Note you have to guess what the usable battery energy is, you can't use the total because some of the energy is unavailable. For example a 90 kWh battery may have less than 87 kWh of usable energy and it could be worse if your battery has degraded.

In my experience it has been in situations where it's cold outside and/or I have started the trip with a cold soaked battery. I am currently trending with the idea that the cars energy consumption displays do not include battery heating energy, but the battery display on the dash does because its showing the state of the battery. The jury is still out on this one for me though. It's really hard to tell because there is so much going on wrt energy consumption and the documentation is not significant.

So what would cause the battery to display a number that does not match up to the figures calculated by the average or total energy consumption? Good question and I have seen this before and been puzzled by it. You can do the math after a few trips and test out what it should display based on the starting SoC and the cars display of both total energy consumed and efficiency along with distance. Sometimes it does not add up. Note you have to guess what the usable battery energy is, you can't use the total because some of the energy is unavailable. For example a 90 kWh battery may have less than 87 kWh of usable energy and it could be worse if your battery has degraded.

In my experience it has been in situations where it's cold outside and/or I have started the trip with a cold soaked battery. I am currently trending with the idea that the cars energy consumption displays do not include battery heating energy, but the battery display on the dash does because its showing the state of the battery. The jury is still out on this one for me though. It's really hard to tell because there is so much going on wrt energy consumption and the documentation is not significant.

And the charging time estimates (at least at my house) aren't much better. Yesterday, I had to get something out of my car while it was charging, and I watched on the dashboard as the charge time estimate crept up from 22 hours to 23, then 24 just because of the power drain from having the door open with the overhead lights on. The car had been charging for about 15 minutes at the time, and my mental math said it should have taken about five hours to charge, which it did. I have no idea where it got a number in the twenty-odd-hour range.

When you get in the car the cabin heater or AC turns on using power which means there is less available to charge the battery. The numbers (Volt/Ampere) do not change because it is measured at the incoming side. But a good part is used for the HVAC and thus slowing down the charge process. It's not the head lights or interior lights. They use very little.

But in all seriousness, I think that when you check the voltage of a battery right after charging, it lies to you a bit, because the charge hasn't fully distributed through the entire cell/pack, so you get an artificially high value upon initial measurement that quickly dissipates as soon as you put the pack under actual load. This also means that when you tell your car to stop charging at 265 miles, you're probably stopping several miles short of that, and the shortfall likely depends on charge speed, so superchargers would give you the most loss right off the bat. Or at least that's my best guess about what's happening here.

Um that's not how electricity works. It doesn't need time to distribute through the pack

Again, the voltage you see when charging at home is from the grid going into the chargers. It is not the battery voltage. At a Supercharger, where it's direct current, the voltage is the same as the battery.

dgatwood

Active Member

Um that's not how electricity works. It doesn't need time to distribute through the pack

Batteries store energy chemically, and when charging or discharging, reaction byproducts build up near the ends of the battery. It takes time for the chemistry to level out within each cell.

Why do batteries seem to go dead and then come back to life if you let them rest?

As a result, batteries typically produce a higher voltage immediately after charging — an effect that typically dissipates after a short period of time. I'm certain of that.

I'm not 100% certain about whether there's any significant charge propagation between series-charged batteries within a pack after charging completes. I think I remember reading that at some point long ago, but I can't find any references to it, so I will concede that I might be remembering wrong.

Again, the voltage you see when charging at home is from the grid going into the chargers. It is not the battery voltage. At a Supercharger, where it's direct current, the voltage is the same as the battery.

I'm not quite sure what you're trying to say here, but if the charge voltage were the same as the battery's voltage, the battery would not charge (at all), because there would be no difference in potential, so current would not flow in either direction. Charge voltage must be higher than the pack voltage, by definition.

Yes, if the charger is considered as a voltage source (zero internal resistance), the voltage must be larger than the battery open voltage in order to charge.I'm not quite sure what you're trying to say here, but if the charge voltage were the same as the battery's voltage, the battery would not charge (at all), because there would be no difference in potential, so current would not flow in either direction. Charge voltage must be higher than the pack voltage, by definition.

However, the battery pack has internal resistance, and in the case of Tesla where they use 18650 cells, the internal resistance is relatively very large.

Let's assume the pack open voltage is 350V, the internal resistance is 0.1 Ohm, the charger voltage is 360V, then according to Ohm's law, the charge current will be (360-350)/0.1=100A.

When charging, if we ignore the wire/connector resistance, the battery terminals is 360V, same as the charger voltage because they connected together. Therefore, @David99 was not wrong in this context.

In practice when supercharging, the charging voltage is slightly higher than the pack voltage because of the resistance of wires and connectors. Also, the average cell voltage times the number of cells in series (96 in the case of 85, 90, 100kWh) is also less than the pack voltage because of internal wire resistance and contactors in the pack.

To answer the OP's question, There is a Tesla patent called 'Battery capacity estimating method and apparatus': US8004243B2

Where they calculate the SOC by combination of current integration (Columbus method) and voltage monitoring.

Ultimately, the SOC is determined by the open voltage of the battery after a long rest (plus some modifications by temperature effect and error curve etc.)

However, the calculated SOC by this approach is capacity based (SOC-Ah), not energy based (SOC-Wh). I also checked about a dozen other SOC calculation patents and they all capacity based.

How on earth Tesla deduct SOC-Wh from SOC-Ah? I guess they have a conversion table to lookup plus Interpolation.

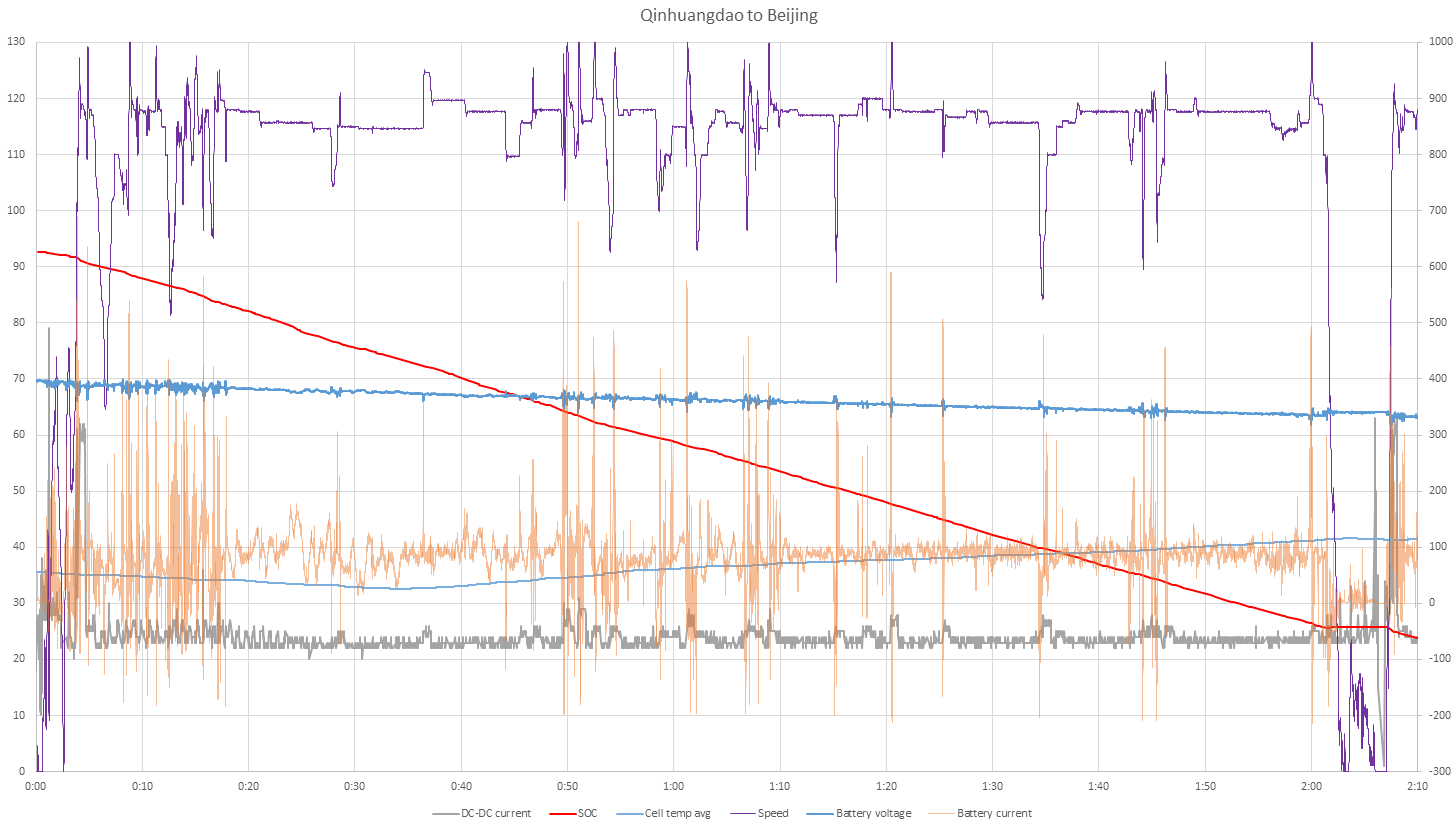

I record most of my long trip with Scan my Tesla and I observe no phenomenon as OP said (faster drop of SOC at the beginning). Here is a typical one when I drive from Qinhuangdao to Beijing, there SOC drops quite linearly.

(Battery voltage and Battery current are to the right axis, others are to the left)

Where they calculate the SOC by combination of current integration (Columbus method) and voltage monitoring.

Ultimately, the SOC is determined by the open voltage of the battery after a long rest (plus some modifications by temperature effect and error curve etc.)

However, the calculated SOC by this approach is capacity based (SOC-Ah), not energy based (SOC-Wh). I also checked about a dozen other SOC calculation patents and they all capacity based.

How on earth Tesla deduct SOC-Wh from SOC-Ah? I guess they have a conversion table to lookup plus Interpolation.

I record most of my long trip with Scan my Tesla and I observe no phenomenon as OP said (faster drop of SOC at the beginning). Here is a typical one when I drive from Qinhuangdao to Beijing, there SOC drops quite linearly.

(Battery voltage and Battery current are to the right axis, others are to the left)

Similar threads

- Replies

- 12

- Views

- 1K

- Replies

- 9

- Views

- 686

- Replies

- 12

- Views

- 2K

- Replies

- 17

- Views

- 2K