Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla is dumping Mobileye???

- Thread starter jkliu47

- Start date

-

- Tags

- Autopilot mobileye Tesla Inc.

electracity

Active Member

I don't see why the car's vision system has to produce 3D information. The radar unit is extremely good at depth perception.

The problem with radar is probably resolution. Is that a short fat person about to step into the street, or a mailbox?

Tam

Well-Known Member

...But a $80,000 car...

Hopefully, Tesla will have more affordable cars, even cheaper than Model ≡ so that trading in for more whistles and bells won't be so bad on the family budget.

Tam

Well-Known Member

I don't see why the car's vision system has to produce 3D information. The radar unit is extremely good at depth perception.

I used to romanticize that a Radar would draw me a nice picture of a car just the same way as weather channel gives me a beautiful picture of radar based cloud/rain/snow image or nice icons of airplanes with their IDs at air traffic controller console...

That until Elon tweeted

"Radar tunes out what looks like an overhead road sign to avoid false braking events"

That's when I look around and realize that it recognizes a car by a pre-fed car signature, the complicate up and down waveform graph:

Radar Signatures of a Passenger Car

Tam

Well-Known Member

To clarify, I thought Radar images should be like LIDAR before Elon's tweet. Since then, this is what I now understand:

LIDAR can map out a whole traffic block full of images and it is taught what those images mean such as the gesture of a bicyclist for a left turn signal below:

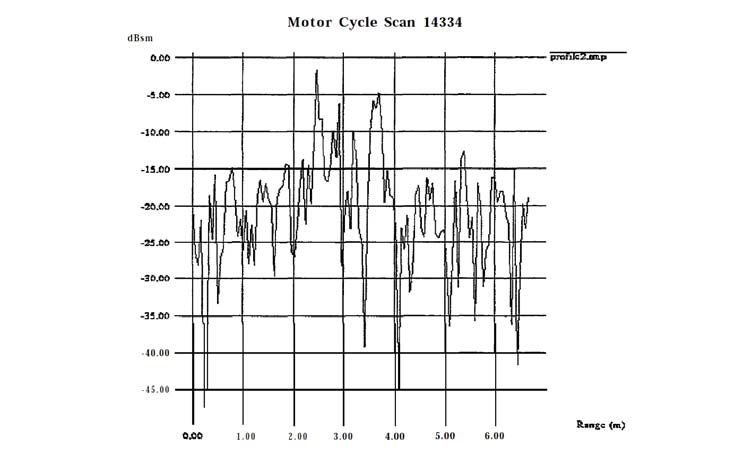

However, Automobile Radar seems not create an image and its shape. It can create a "signature" so that it could learn this signature below is a motorcycle but it has no idea what a motorcycle looks like (shape) or whether there's a hand signal or not:

LIDAR can map out a whole traffic block full of images and it is taught what those images mean such as the gesture of a bicyclist for a left turn signal below:

However, Automobile Radar seems not create an image and its shape. It can create a "signature" so that it could learn this signature below is a motorcycle but it has no idea what a motorcycle looks like (shape) or whether there's a hand signal or not:

As it happens I have only one functioning eye. Anybody thinking monocular vision can simulate multi sensor vision does not understand too well. I also possess an Airline Transport Pilot certificate. The examination to grant a SODA (statement of demonstrated ability) proved, to me anyway, that a large amount of ancillary information is required to compensate for the limitations implicit in monocular vision. I am confident that multiple sources will be cheaper and more reliable than will be monocular workarounds. Of course I am betting on my analogy...

Sorry to hear that. When growing up, one of my best friends had a benign tumour which had blinded him in one eye and also made him completely deaf on that side. He was also a keen sportman, and one of the best cricketers I knew. When asked how he could tell where to hit a solid ball that was coming directly at him at very high speed, he put it down to "instinct" and "practice". I guess this is where the MobilEye IP comes in to play, replacing "instinct" with algorithmic prediction, and using fleet learning for "practice".

Radar that is used in Tesla has problems with detecting stationary objects. It can easily detect objects that are moving related to the background with good enough resolution, but not static objects.I don't see why the car's vision system has to produce 3D information. The radar unit is extremely good at depth perception.

Radar that is used in Tesla has problems with detecting stationary objects. It can easily detect objects that are moving related to the background with good enough resolution, but not static objects.

Does it have a problem detecting them or merely distinguishing cars stopped in your lane or that you will otherwise drive around, from cars stopped/parked on the side of the lane? See the thread where people complain that Tesla detects cars parked in front of a lane that merges and reduces power. Something is detecting those parked cars.

sillydriver

Member

I think the issue is that the whole world is sending back a mess of echos from objects that the doppler rader identifies as stationary, where the radar cannot reliably discriminate what these echos are from. So the system pays attention to those few echos that are not converging at the forward velocity of the car - those are the echos of moving vehicles.

The camera is used, in some capacity, as a backup system for object identification to distinguish stationary cars from overhead roadsigns. However, the current generation camera is monochrome (RED channel only), so it only sees objects with enough contrast.

electracity

Active Member

The camera is used, in some capacity, as a backup system for object identification to distinguish stationary cars from overhead roadsigns. However, the current generation camera is monochrome (RED channel only), so it only sees objects with enough contrast.

Why would it be red channel? Would not it just be unfiltered for color?

electracity

Active Member

I'll throw an idea into why Tesla is not partnering with mobileye: Tesla Bus will be an implementation of Google Car.

It seems impossible to me that Tesla could beta a limited urban bus by 2020. Occam's razor leads me to Google Car. Tesla Motors and Tesla Semi will continue down the current Tesla development path. Tesla Bus will implement Google Car.

It seems impossible to me that Tesla could beta a limited urban bus by 2020. Occam's razor leads me to Google Car. Tesla Motors and Tesla Semi will continue down the current Tesla development path. Tesla Bus will implement Google Car.

Stoneymonster

Active Member

I'll throw an idea into why Tesla is not partnering with mobileye: Tesla Bus will be an implementation of Google Car.

It seems impossible to me that Tesla could beta a limited urban bus by 2020. Occam's razor leads me to Google Car. Tesla Motors and Tesla Semi will continue down the current Tesla development path. Tesla Bus will implement Google Car.

Four years is a long time in this business now. I disagree that it's impossible.

electracity

Active Member

Four years is a long time in this business now. I disagree that it's impossible.

Why announce a low speed minibus? Why not just a minibus? Tesla's focus is highway tech. If they can do low speed in a complicated environment, they can do high speed more easily.

A dual development path, each starting in a different place, is likely to produce the best outcome.

Musk simply could not announce a Google deal until AP 2.0 is out, and the model 3 is in production.

3Victoria

Active Member

@electricity this appears to be wild and unsubstantiated speculation on your part, ie FUD. Your other posts seem to be in the same vein.

Tam

Well-Known Member

I'll throw an idea into why Tesla is not partnering with mobileye: Tesla Bus will be an implementation of Google Car.

May I remind 2 big differences between Tesla and Google:

1) Tesla believes in incremental improvements even when it's not perfect which means additional deaths and crashes are expected during those incremental rollouts. It believes even during a period of imperfections, deaths and crashes are much more reduced although not eliminated.

Google believes that drivers are not to be trusted with an imperfect product so it purposely withholds partial implementations and will only introduce a complete product. It is willing to continue to expect a traditional staggering number of deaths and crashes because those are not currently caused by its products.

2) Tesla does not believe in an addition of LIDAR because it is not necessary. Google believes that you need all the best sensors that you can get, so LIDAR is a must.

Last edited:

electracity

Active Member

@electricity this appears to be wild and unsubstantiated speculation on your part, ie FUD. Your other posts seem to be in the same vein.

I'm not sure you understand the concept of FUD.

But I am sure you have no engineering or computer background.

3Victoria

Active Member

Actually I have both.I'm not sure you understand the concept of FUD.

But I am sure you have no engineering or computer background.

Thank you.

I've played with briefly with image recognition algorithms (pre neural network) and the first thing you generally do is throw away the color information, compress the histogram, and generate contrast for edge detection. I have no idea if this is what MobileEye is doing, but by having a monochrome camera you reduce the color information to a single channel (essentially giving you a tinted grayscale image) which I think would give you a headstart on that process. That's just a rough guess at what's happening.Why would it be red channel? Would not it just be unfiltered for color?

electracity

Active Member

May I remind 2 big differences between Tesla and Google:

1) Tesla believes in incremental improvements even when it's not perfect which means additional deaths and crashes are expected during those incremental rollouts. It believes even during a period of imperfections, deaths and crashes are much more reduced although not eliminated.

Google believes that drivers are not to be trusted with an imperfect product so it purposely withholds partial implementations and will only introduce a complete product. It is willing to continue to expect a traditional staggering number of deaths and crashes because those are not currently caused by its products.

2) Tesla does not believe in an addition of LIDAR because it is not necessary. Google believes that you need all the best sensors that you can get, so LIDAR is a must.

"Tesla believes". Tesla had no choice. They started late, and leveraged mobileye technology. It will not be Tesla choice to determine how many people they get to kill testing autonomous driving. One reason to start on the slow speed end, like Google Car, is fewer customer decapitations.

It is not about LIDAR. It's a computer science problem.

Tesla might be doing the slow speed car without a major partner. But this situation would concern me. They have so much to do with the model 3 and batteries.

Last edited:

Similar threads

- Replies

- 9

- Views

- 614

- Replies

- 18

- Views

- 446

- Article

- Replies

- 106

- Views

- 10K

- Replies

- 114

- Views

- 8K

- Replies

- 1

- Views

- 1K