Hm... Sounds just like drug development. So this is standard practice all around?

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial Intelligence

- Thread starter Buckminster

- Start date

Confession: Once I saw a post that I was pretty sure was incorrect. Was too tired to answer myself, so just asked chatGPT to explain why it was incorrect. Pasted the response, got lots of upvotes for the answer being presented with so much detail and long post with good formatting etc. Did a better job than me at posting...

So anyway, saw this one:

RIP Internet, it was fun while it lasted and sorry for being part of the downfall...

So anyway, saw this one:

RIP Internet, it was fun while it lasted and sorry for being part of the downfall...

Not quite. Drug development is based on university research which licenses out a patent. But even from there there is a tremendous amount of work needed to push through clinical trials and it starts out as a normal corporation from the beginning.Hm... Sounds just like drug development. So this is standard practice all around?

Elon doesn't know anything about AI himself, but his characterization of the sleaze that Altman pulled on OpenAI is correct. It was fraud. It was named OpenAI for a reason, but now is entirely closed. They have published nothing.

Somehwat ironically it's the information hoarder Zuckerberg & Meta which have been the most authentically open on their ML developments and research. In significant part because of Yann LeCun, I believe.

For someone who doesn't know anything about AI, Elon has been very lucky with his decision making over the years, investing heavily into getting data, recruiting Karpathy, buying deepscale.ai, developing an alternative to GPUs as a backup if they get too expensive, developing own inference hardware, going into robotics heavily right before everyone else did it, betting on vision etc. It's almost as if he actually is pretty good at doing the physics first analysis of AI. Too bad for Tesla that the CEOs of Toyota, VW, Ford, GM, BMW etc had much better foresight and could outclass Tesla in this domain.Elon doesn't know anything about AI himself

And this is before quantum computers aid the hallucinations...

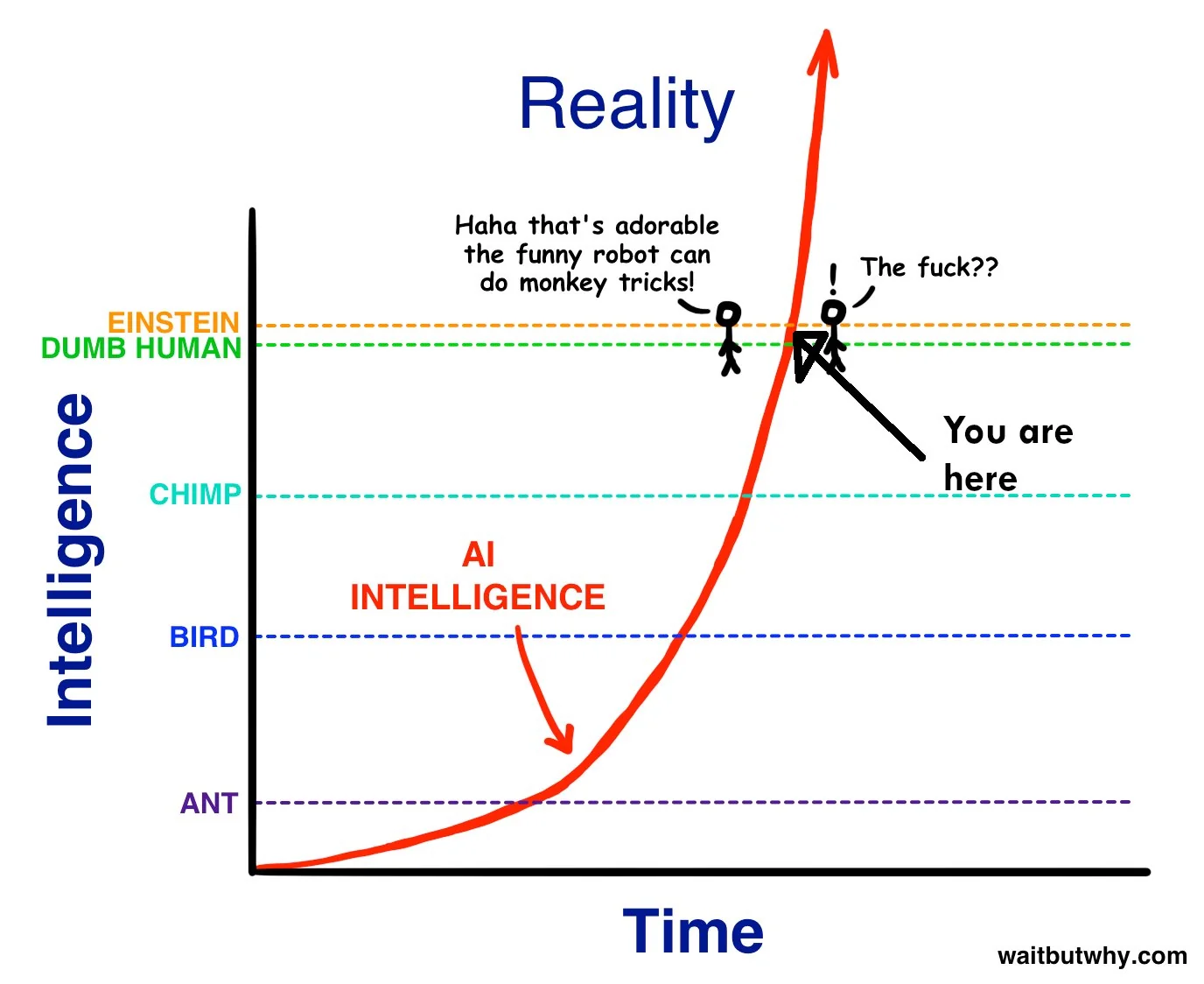

Imo AGI is quickly approaching deep blue levels at text based questions. Sure it might not better than every human at everything today. But it has rapidly gotten better than 90% of humans at 90% of tasks that we have tests for. And the limit is not getting to best human level at everything, it will be more like Stockfish which has a 800 ELO rating advantage over Magnus Carlsen, ie the difference between LLMs and Terence Tao/Ed Witten will be like the difference between Magnus Carlsen and me... In every task where a comparison is meaningful.

Last edited:

As we get closer to building Al, it will make sense to start being less open. The Open in openAl means that everyone should benefit from the fruits of Al after its built, but it's totally OK to not share the science (even though sharing everything is definitely the right strategy in the short and

possibly medium term for recruitment purposes).

possibly medium term for recruitment purposes).

OpenAI and Elon Musk

We are dedicated to the OpenAI mission and have pursued it every step of the way.

openai.com

A usual human working environment problem is something like "well what do you think we should work on next?"

Imo AGI is quickly approaching deep blue levels at text based questions. Sure it might not better than every human at everything today. But it has rapidly gotten better than 90% of humans at 90% of tasks that we have tests for.

Ed Witten and Terry Tao have ideas of useful and interesting new research ideas, short and long term. An AI that answers textbook like questions has no idea about that so far. They might find assistants that can usefully scan and summarize research literature as helpful.And the limit is not getting to best human level at everything, it will be more like Stockfish which has a 800 ELO rating advantage over Magnus Carlsen, ie the difference between LLMs and Terence Tao/Ed Witten will be like the difference between Magnus Carlsen and me... In every task where a comparison is meaningful.

Claude has been gaining 20IQ points with each version:

How fatalistic should we be on AI?

The godfather of artificial intelligence has issued a stark warning about the technology

www.ft.com

www.ft.com

“If I were advising governments, I would say that there’s a 10 per cent chance these things will wipe out humanity in the next 20 years. I think that would be a reasonable number,” he says.

12 Questions for Sam Altman:

- Why did you argue that building AGI fast is safer because it will take off slowly since there's still not too much compute around (the overhang argument), but then ask for $7T for compute?

- Why didn't you tell congress your worst fear?

- Why did you tell Musk that the leader of OpenAI probably shouldn't be its board, yet you just had yourself re-appointed to it?

- Why did you also tell him that OpenAI employees would not be compensated in OpenAI equity, when in fact they are now given hundreds of thousands of dollars in it every year?

- Why were you fired from YCombinator?

- Why, when you were fired from YCombinator, did you publish a blog post on their website announcing yourself as chairman without any authorization or agreement to do so?

- Why are you, in the form of OpenAI, clearly breaking GDPR data protection laws, as the Italian regulator has determined, abusing user data and likely in breach of similar laws in other jurisdictions?

- How much of an increase in unemployment do you expect AI to cause?

- What is the future of meaning? How do you see people finding meaning in their lives in the future you intend to bring into being?

- Do you think you should have as much power over the future as you do?

- Will you support calls for an internationally upheld pause on the advancement of AI capabilities and a grand-scale focused effort on AI safety research, via an international treaty? And if not, why not?

- Where is Ilya?

Similar threads

- Replies

- 54

- Views

- 5K

- Replies

- 48

- Views

- 7K

- Replies

- 137

- Views

- 9K

- Replies

- 6

- Views

- 1K