An good post but this is only 25% of the whole deal.

They also have a fail operation board that has 3x EyeQ5H and acts as a backup if the primary system fails. The Fail operation board also handles perception, mapping and planning independently. All together you get 9x EyeQ5, in the future it will be 3x EyeQ6.

medium.com

medium.com

Think of it as two independent ways to detect a peds/vehicles.

The way i put it is that you have different sensor modalities (camera, lidar, radar) that have different pros and cons and they fail for different reasons. So its not like you have two systems of cameras which have correlated failures. Radar can see through rain, snow, fog and dust, cameras can't. Lidar can see in direct sun light and low light situations including pitch darkness, camera struggles with it. I could keep going.

If you have two systems with uncorrelated failures you get two benefits. The amount of data required to validate the perception system is massively lower and if one of the independent systems fails the vehicle can continue operating safely in contrast to a low-level fused system that needs to cease driving immediately.

In essence if you have two completely independent ways to sense a person, you assume they won’t fail in a correlated way. If either sensor sees a person, you act as if a person is there. And because of independence, you assume that both sensor systems missing a real person is so improbable that it will essentially never happen. Sure, its not 100% independent. But there's hundreds/thousands of independent modes. Completely independence would give you a complete dot product. If your MTBF was 10,000 hours for each system. 10,000 hours * 10,000 hours = 100 million hours. Since its not completely independent, its lower. If you end up somewhere around 10 million hours that's alot better than 10,000 hours. Even 1 million is alot better than 10k.

Waymo already uses 4D imaging radars in their 5th gen cars and Mobileye is planning to.

Mobileye's radar for example has over 500k points points per sec while Tesla radar has 900 points per sec. Huge difference that matters for sensor fusion.

Take for example this video. It only takes secs to go from good weather to zero visibility fog and you can't stop in the middle of the road because they become a hit target. A vision only system would be fked.

www.youtube.com/watch?v=uu-OLV3x0E0

I should note that Amnon has always detailed in his presentation that they are talking about perception independence not driving policy independence and that the driving policy uses two independent ways of sensing the environment.Independence:

If you read the fine print they're independent perceptually, but clearly not fully independent as successful and trustable stand-alone AV operators in normal operation. Right after claiming they are,, Mobileye clarifies that the Vision side is the "backbone", consistent with my argument that if one side can operate alone, that can only be the Vision side.

Yes that kind of redundancy is hardware and system redundancy. Which is different from what true redundancy is about. Here is what mobileye is doing for system redundancy if you are wondering. Obviously the vehicle the are using similar to Waymo has steering/brakes and power redundancy (hardware redundancy).Common-cause failure:

Seems very general, any vehicle or system can suffer this. True redundancy against this would require dual or secondary everything. Battery module, sensors, electronics, wiring, electromechanical control. As @Daniel in SD said, this isn't that kind of redundancy. Thinking about it, I'd say it's a complementary architecture to draw increased confidence from each sensor set's strengths, per below:

They also have a fail operation board that has 3x EyeQ5H and acts as a backup if the primary system fails. The Fail operation board also handles perception, mapping and planning independently. All together you get 9x EyeQ5, in the future it will be 3x EyeQ6.

This is referred to as late fusionTheir real point of differentiation, according to the flow diagram and per the summary by @diplomat33, is that the fusion of the two sides comes after the Perception modules (though there is fusion of Radar+Lidar in the Perception module of that side). So not Sensor Fusion overall

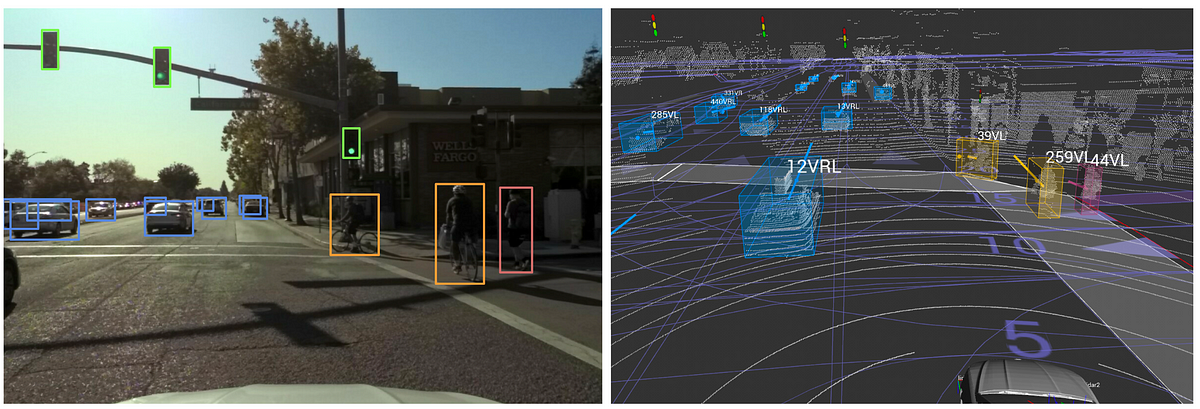

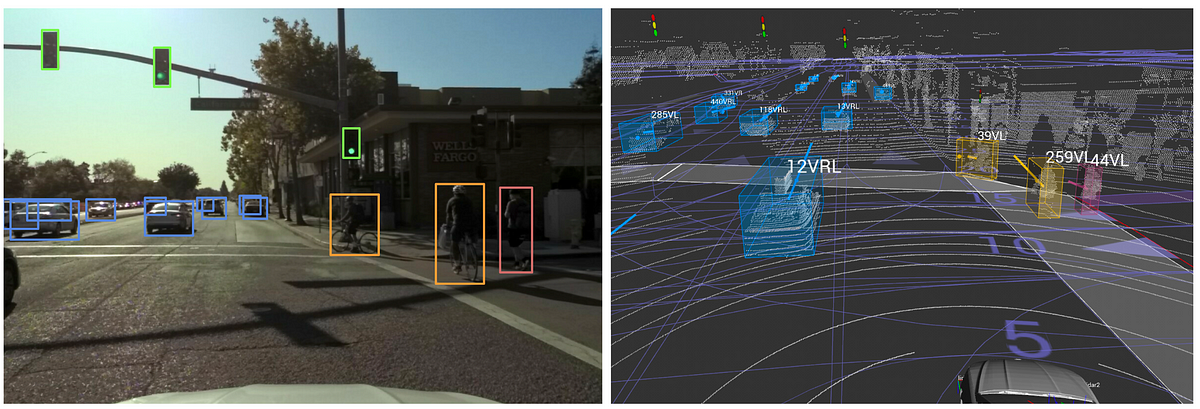

Leveraging Early Sensor Fusion for Safer Autonomous Vehicles

By: Interns Eric Vincent¹ and Kanaad Parvate², with support from Andrew Zhong, Lars Schnyder, Kiril Vidimce, Lei Zhang, and Ashesh Jain…

medium.com

medium.com

The radar/Lidar with a front camera can absolutely be used to drive the car in routes that involve a traffic light control. It’s crucial to actually have a system that can drive independently (in most cases). Because you can use it to bring the car to a safe parking spot if a failure were to happen. Especially if you have passengers, you don’t want to just stop in the middle of the highway, you want to lane change to the shoulder and then park. If there is no shoulder then take the next exit and parking in a public parking lot., but Policy and Planning fusion of the two World Views. Although as I keep saying, the Radar+Lidar cannot actually be used to safely pilot the AV in the real world, nonetheless the system architecture asks for its World View as if it could, and this is perhaps a good way to solve well-known problems of normal Sensor Fusion.

It will do all of that and then more.I'm guessing that what is really going on here is a dominantly Vision-driven car, not unlike the Tesla Vision FSD approach, but using the Radar+Lidar in a scout / backseat-driver role.

"I saw something that you missed or misjudged, better not go there."

Or, "you saw a confusing shadow or puddle across the road, but I can tell you it's clearoy not a solid object or a ditch".

Or, (famous edge case) "I'm very confident that image you see of of a (painted-on) clear underpass is really a solid wall!"

Think of it as two independent ways to detect a peds/vehicles.

The way i put it is that you have different sensor modalities (camera, lidar, radar) that have different pros and cons and they fail for different reasons. So its not like you have two systems of cameras which have correlated failures. Radar can see through rain, snow, fog and dust, cameras can't. Lidar can see in direct sun light and low light situations including pitch darkness, camera struggles with it. I could keep going.

If you have two systems with uncorrelated failures you get two benefits. The amount of data required to validate the perception system is massively lower and if one of the independent systems fails the vehicle can continue operating safely in contrast to a low-level fused system that needs to cease driving immediately.

In essence if you have two completely independent ways to sense a person, you assume they won’t fail in a correlated way. If either sensor sees a person, you act as if a person is there. And because of independence, you assume that both sensor systems missing a real person is so improbable that it will essentially never happen. Sure, its not 100% independent. But there's hundreds/thousands of independent modes. Completely independence would give you a complete dot product. If your MTBF was 10,000 hours for each system. 10,000 hours * 10,000 hours = 100 million hours. Since its not completely independent, its lower. If you end up somewhere around 10 million hours that's alot better than 10,000 hours. Even 1 million is alot better than 10k.

Remember the problem tesla is having is because they are using a radar from 2011 and 2014.In other words, it goes a long way towards solving the minority set (but a critically important set) of cases where Vision is non-confident or falsely cofident. And it does so without introducing the kind of difficult Sensor Fusion challenges that people commonly talk about, including the noisy pulsing radar-estimation examples given by Andrej Karpathy in his recent CVPR talk.

Waymo already uses 4D imaging radars in their 5th gen cars and Mobileye is planning to.

Mobileye's radar for example has over 500k points points per sec while Tesla radar has 900 points per sec. Huge difference that matters for sensor fusion.

good analogy but this makes radar/lidar system look weak.RadarLidar: BlippyBlip, I don't know, kind of noisy data...

Take for example this video. It only takes secs to go from good weather to zero visibility fog and you can't stop in the middle of the road because they become a hit target. A vision only system would be fked.

Mobileye will have an un-edited video showcasing their radar/lidar only system later in the year. admittedly that system is behind their vision only perception system.Hope you enjoyed that. I like the approach but see it not as real redundancy, more like recon for the squad. I don't think they should be describing it as a self-sufficient AV using Radar+Lidar; that's not real but it informs the architectural flow diagram and is a way to resolve some known conflicts, as posed in Elon's tweet:

"When radar and vision disagree, which one do you believe? Vision has much more precision, so better to double down on vision than do sensor fusion."Mobileye is avoiding sensor fusion, avoiding Karpathy's radar-noise example, adding Lidar which trumps the precision argument, and letting the Planning module choose, in the moment, which divergent World View item to trust. How to choose? Each of the Perception output World View data sets have already assigned confidence values to their detected objects and drivable-space regions, so generally pick the more confident one - but quite importantly, also weight-adjusted for factors of downside risk. Most often they will agree.

Last edited: