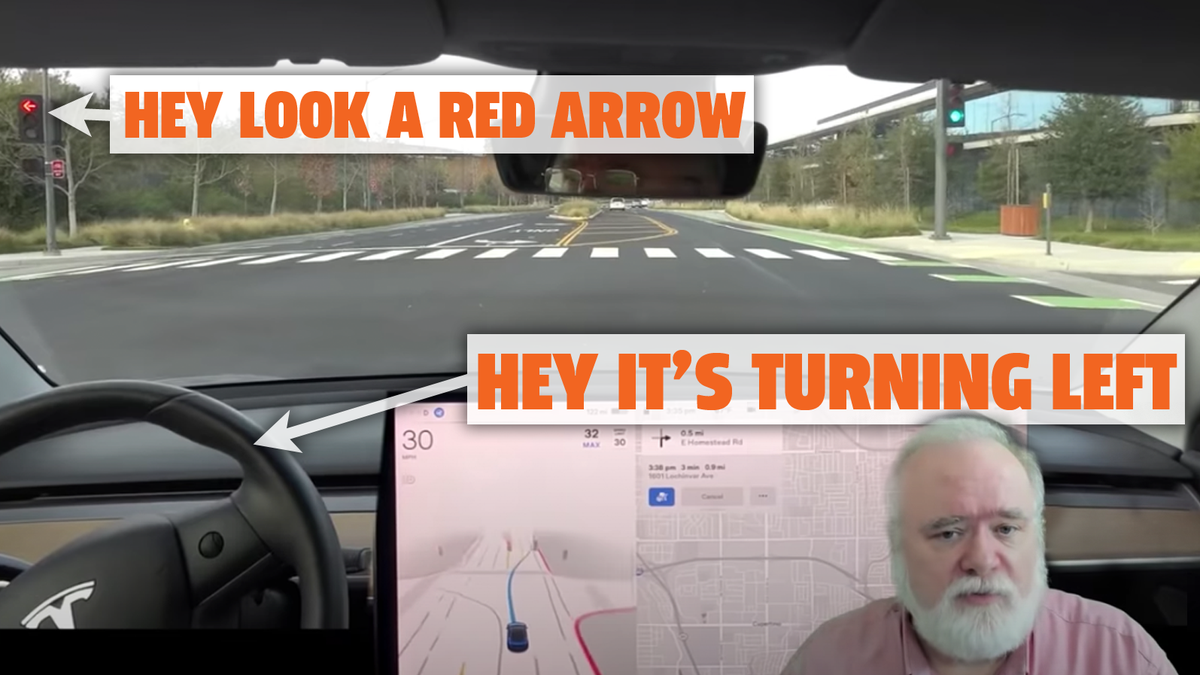

Would you consider Tesla using OpenStreetMap type/quality data be considered detailed? Here's OSM's understanding of the beginning of North Tantau where you made a right turn from Stevens Creek and your video showed FSD Beta accidentally getting in the left turn lane when it should have kept right to stay straight and ended up running a red left turn light:

OpenStreetMap is a map of the world, created by people like you and free to use under an open license.

www.openstreetmap.org

Notably, that map data indicates the oncoming direction (South) has

lanes:forward=3 and

turn:lanes:forward=left|left|through|right which seems to be inconsistent as the turn lanes has 4 entries, and I'm guessing whoever put that value intended for the "through" to be for the bike lane.

The next immediate segment

Way: North Tantau Avenue (417037131) | OpenStreetMap has

lanes=4 without

lanes:forward or

lanes:backward but potentially one could infer lane counts from

turn:lanes:forward=left|| indicating 3 lanes going forwards (North for this segment). Is it just poor FSD Beta logic of getting confused by the lack of directional lane counts when it could have inferred the lane counts from the turn tag… except the previous segment incorrectly(?) included the bike lane in that tag?

Basically, some would say those extra tags is more than plain road connectivity for navigation and is detailed but potentially not accurate.