Please see this

Thanks! I posted that video earlier, but somehow I missed the part how they actually collected the images.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Please see this

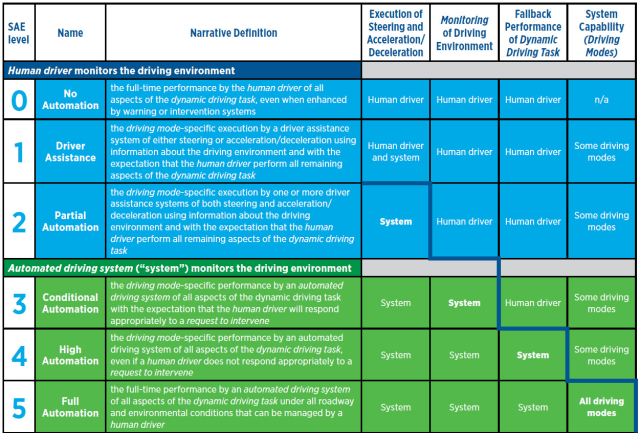

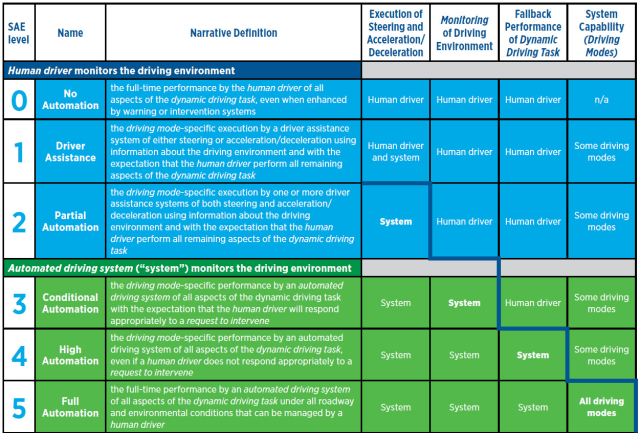

Don't you mean L3 on the highway with no driver input? Autopilot is already L2.

Have you read this? Karpathy argues, that developing AI for games is much easier than AI for real world.

AlphaGo, in context – Andrej Karpathy – Medium

Model 3s can autonomously drive into in your garage. Technically, that makes them Level 4 in your driveway.

Images are uploaded to Tesla’s servers and then manually labelled by Tesla employees. Check out Andrej Karpathy’s talk.

The SAE levels of automation don’t capture a lot of important distinctions. Model 3s can autonomously drive into in your garage. Technically, that makes them Level 4 in your driveway. The definition of Level 4 doesn’t include any stipulations about which driving modes don’t have a human in the loop.

People with the FSD option will in fact get something that drives itself on local roads, stops at stop signs, etc. But they will have to supervise it the entire time, making it still an L2 system.

I would disagree that it would still be L2. L2 is defined as a system where both speed and steering are automated by the car but the driver does everything else including monitoring the environment. L3 is defined as a system where both speed and steering are automated AND the car also monitors the environment but the driver may need to take over (hence still needs to supervise). So L3 can still require driver supervision. Just because the driver may need to supervise does not automatically bump it back down to L2. In your scenario, if the car is driving itself on local roads and able to stop at stop signs, traffic lights etc, then it is monitoring the environment hence by definition it is L3, not L2.

Quite possibly true, however, is anyone seriously considering using their Tesla that way? I for one certainly would not, and doubt anyone who values their vehicle would either, even if ever made available, which I too doubt.[snip] Or, of course, the holy grail being to let the Tesla be an autonomous taxi in a ride sharing network (which implies you don't need a driver in the car at all), or summon itself across the country to come and get you.

Quite possibly true, however, is anyone seriously considering using their Tesla that way? I for one certainly would not, and doubt anyone who values their vehicle would either, even if ever made available, which I too doubt.

As a Software Engineer working with ML systems, I can safely say this is false. The processes involved in producing production-ready ML code are radically different from traditional software engineering.

As a software engineer and lead developer on an mobile app that is about to deploy an app in 7 days that services over 2 million individuals that uses multiple neural net models to scan medications.

It is 100% Jargon. Look up what jargon is. Ask any machine learning engineer or data scientist what software 2.0 is and they will look at you wrong. Now ask them what data gathering, data labeling, building models and you will have a hour long conversation.

In-fact you find no mention of "software 2.0" in google up until November 2017 when Andrej wrote his blog.

That is the definition of jargon.

data gathering and data labeling is machine learning 101. Software have been compromised of just NN models for a long time. People who think its something new and cutting edge are simply misinformed.

The SAE levels of automation don’t capture a lot of important distinctions. Model 3s can autonomously drive into in your garage. Technically, that makes them Level 4 in your driveway. The definition of Level 4 doesn’t include any stipulations about which driving modes don’t have a human in the loop.

Similarly, Audi’s planned Traffic Jam Pilot system is Level 3, even though it’s much less capable than Autopilot today.

Adaptive cruise control and lane keeping on the highway is sufficient to be classified as Level 2, but insufficient to drive on the highway without driver input. Driving on the highway, on ramp to off ramp, with no driver input required a much more extensive set of features. However, both are still Level 2 systems.

I think Enhanced Autopilot will most likely remain Level 2.

But I think what we can all agree on is that the SAE level definitions are abiguous and we could argue all day where any given real-world system should land between L2 and L3 in particular

I'm interested in whether you will ever be allowed to read a book while letting the Tesla handle the driving, or truly "smart summon" it out of your garage and then let it park itself unsupervised at your destination. Or, of course, the holy grail being to let the Tesla be an autonomous taxi in a ride sharing network (which implies you don't need a driver in the car at all), or summon itself across the country to come and get you.

True, but I'm stating my use case. Many features are released by many CO's which have no use in the real world. See Microsoft: Clippy.Red herring. Tesla promised it, they need to deliver regardless of what you think is useful or prudent.

Blader, you did not respond to the message you quoted. You responded about the term "Software 2.0". Why don't you respond about whether the processes are the same or different for machine learning vs writing code? Obviously ML also involves some hand-written code, but it's a smaller chunk and you can have many "releases" of an ML system without touching a single line of hand-written code, so the business and engineering processes involved are clearly different. Similarites, yes; overlap, yes; but also there are notable differences.

No road debris concerns?Personally, I think being able to read a book while the car cruises on the highway is definitely doable with AP2.

No road debris concerns?

I would think the front radar could detect road debris. And Tesla could probably design the camera vision to recognize road debris too although I am sure that would be a challenge.

I agree with you that the SAE levels don't capture a lot of specific distinctions which is why different people have slightly different interpretations of what the levels actually mean in the real world. I would disagree with you that summon is L4 in your driveway. With Summon, the car is not self-driving at all, you are directly moving the car by remote control using your phone. It's no different than when you drive your a little toy car with a remote control. That's not L4.

I would also point out that the SAE levels don't say anything how to monitor driver engagement. Just because a system tracks the driver's eyes to gauge engagement does not automatically make it L3. For example, I would argue that GM's Supercruise, while hands free, is still L2.

I would disagree that it would still be L2. L2 is defined as a system where both speed and steering are automated by the car but the driver does everything else including monitoring the environment. L3 is defined as a system where both speed and steering are automated AND the car also monitors the environment but the driver may need to take over (hence still needs to supervise). So L3 can still require driver supervision. Just because the driver may need to supervise does not automatically bump it back down to L2. In your scenario, if the car is driving itself on local roads and able to stop at stop signs, traffic lights etc, then it is monitoring the environment hence by definition it is L3, not L2.

For reference again:

L3 is defined as a system where both speed and steering are automated AND the car also monitors the environment but the driver may need to take over (hence still needs to supervise). So L3 can still require driver supervision.

No L3 does NOT require driver supervision. It requires driver as safety fallback after appropriate timed request (up to 10 seconds according to the industry). That's a big difference. Again the 30 page document goes into detail about this.