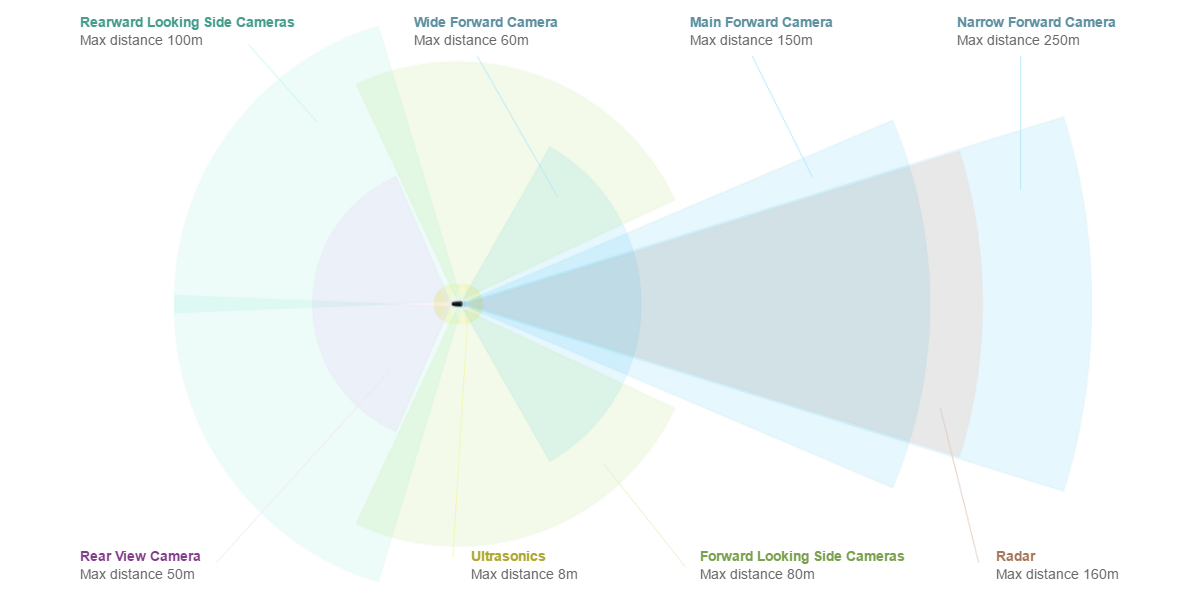

I wonder if the reason FSD can't currently do Roundabouts or T-Junctions autonomously is because it doesn't have and sensors pointing in the right direction.

Imagine a T-Junction, that's going to need things towards the nose of the car almost looking at right angles to the car (or if the car turns slightly to the left, even further back). Likewise at a roundabout, it'll need to be looking to the right.

In both instances not just a few feet from the car, but a long long way out.

Imagine a T-Junction, that's going to need things towards the nose of the car almost looking at right angles to the car (or if the car turns slightly to the left, even further back). Likewise at a roundabout, it'll need to be looking to the right.

In both instances not just a few feet from the car, but a long long way out.