What about all the testing Tesla supposedly does before shipping every bit of code we were assured of by some people?I, for one, would be glad to know that the system was validated in shadow mode before being deployed live.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

HW2.5 capabilities

- Thread starter verygreen

- Start date

-

- Tags

- Autonomous Vehicles

JeffK

Well-Known Member

I'm sure they do test it as Elon has mentioned in the past... but like every software developer knows, the end users are the best beta testers. They can find problems you didn't even know existed even when running only in shadow mode.What about all the testing Tesla supposedly does before shipping every bit of code we were assured of by some people?

Other tech companies like facebook, etc do the same thing with certain features.

croman

Well-Known Member

I'm sure they do test it as Elon has mentioned in the past... but like every software developer knows, the end users are the best beta testers. They can find problems you didn't even know existed even when running only in shadow mode.

Other tech companies like facebook, etc do the same thing with certain features.

I don't drive Facebook. This is life and death. Tesla shouldn't ask us to pay $100k+ to treat us like guinea pigs with our life on the line. Its completely immoral and disgusting they lack full and fair disclosure of such critical safety equipment. They did that garbage with me for my Q4 2016 AP2 purchase. I specifically wanted AEB and I was assured, in writing, that it would be there.

It makes total sense that Tesla has to validate their software with new hardware but 99.99% of Tesla HW2.5 owners would never know their car doesn't have the same features as AP2 cars, especially critical safety features like AEB.

So, Blader, you claimed LiDAR needed ZERO computation for object detection which, in the application of self-driving cars, is patently false.Don't be naive, i have posted that same video and timestamp multiple times. (heck you prob took that from one of my previous posts..im watching you!)

But we are comparing camera with lidar here, so let's stay on topic people!

Lidar doesn't NEED to know whether the object in-front of it is a pedestrian, bicyclist or a construction cone because it knows its exact dimension and position in space.

That doesn't mean you don't want to perform classification on the detected objects (raw data).

The point of this 5 page discussion is that:

1) A picture is a collection of mean-less numbers which without cutting edge deep learning is useless to a self driving car or any vision system for that matter. Therefore requiring object recognition and classification models.

2) A lidar returns xyz of the world around it, which even without any classification model, you can know that there is a object of precise dimension infront of you.

2) A radar returns data points of the world around it (although with extremely low/garbage resolution), which even without anyclassification model, you can know that there is a object of a certain size in-front of you.

Secondly, a NN needs to recognize objects in a picture and any object it can't recognize, the car CANNOT see.

This isn't the case for lidar which returns 3d coordinates.

End result is, camera is not the best sensor, lidar is.

Having found a "BLOB of something" in positional space is utterly meaningless unless you know what it is. Thus, every BLOB must be classified. And, in fact, Google already told you that was the case. And even demonstrated it.

Now you're quickly changing the subject to claim that LiDAR provides more useful data than the other two methods. Well of course it does. It's an active system with higher resolution.

But it doesn't remove the requirement to classify all the objects it actively scans. As should be obvious to any self-proclaimed genius.

Thus, your claim of zero computational power for LiDAR object classification is 100% wrong. Chris Urmson already told you that Google classifies all objects, yet you repeatedly made the false claim anyway.

Why make phony claims if "you're never wrong"??

Last edited:

Lidar is not a computer vision but a "high resolution directional radar". It does not recognize any objects or their movement, it only measures the length of a direct line through free (empty) space in one exact direction. It repeats this process of measuring lengths of such straight lines coming 'out' of it.

Vision is what your eyes and brain do. They do not measure distances from your head but recognize color coming into your eyes from different directions.

If one does not have functioning computer vision, lidar comes in very handy because it enables a machine to 'know' in simple and computationally cheap way if there is some free space in front of it or not. This suffices for relative slow safe automated movements. Companies using LIDAR tech will show some early progress, their cars will be able to slowly navigate their environments, but lidar faces hard problems in complex environments full of distinct objects, any of them having their own speed and trajectory. Lidar knows nothing about distinct objects, color (even just gray-scale) is needed to make an object model of environment and recognize future dangers. Autonomous driving is all about this recognition of possible future dangers and adequate responses.

It boils down to speed of FDS - lidar based FSD is possible up to some relatively slow speed. For higher speed FSD, real object recognition is mandatory. Ignoring color in this process (i.e. using lidar) is making the recognition much harder. It may happen that computer vision will not be successful for quite some time and LIDAR based approach will appear a better way. In the long run vision approach will win.

Vision is what your eyes and brain do. They do not measure distances from your head but recognize color coming into your eyes from different directions.

If one does not have functioning computer vision, lidar comes in very handy because it enables a machine to 'know' in simple and computationally cheap way if there is some free space in front of it or not. This suffices for relative slow safe automated movements. Companies using LIDAR tech will show some early progress, their cars will be able to slowly navigate their environments, but lidar faces hard problems in complex environments full of distinct objects, any of them having their own speed and trajectory. Lidar knows nothing about distinct objects, color (even just gray-scale) is needed to make an object model of environment and recognize future dangers. Autonomous driving is all about this recognition of possible future dangers and adequate responses.

It boils down to speed of FDS - lidar based FSD is possible up to some relatively slow speed. For higher speed FSD, real object recognition is mandatory. Ignoring color in this process (i.e. using lidar) is making the recognition much harder. It may happen that computer vision will not be successful for quite some time and LIDAR based approach will appear a better way. In the long run vision approach will win.

AnxietyRanger

Well-Known Member

It boils down to speed of FDS - lidar based FSD is possible up to some relatively slow speed. For higher speed FSD, real object recognition is mandatory. Ignoring color in this process (i.e. using lidar) is making the recognition much harder. It may happen that computer vision will not be successful for quite some time and LIDAR based approach will appear a better way. In the long run vision approach will win.

Why would this be an either-or scenario, though?

All technologies provide different benefits, for example radar can see through objects and lidar can see in the dark and provide very reliable distance information, for example - these are things where radar and lidar are superior to vision, no matter what.

It seems to me that this has become a bit of a Jobsian quest. Because Elon has been sour on Lidar for FSD, a lot of people are parroting this as an either-or scenario, when in reality it probably is not.

Are there current benefits for using radar and lidar that will eventually go away, including computational ones and vision being harder? Quite probably. That is probably why Tesla includes limited radar and ultrasonics on AP2 as well, because it makes early coding simpler.

But that doesn't mean these technologies do not have inherent benefits that help in the long run as well.

Neither Jobs nor Musk probably were/are quite as rigid in their own thinking at all, they were/are just masters of selling what they have available now.

lunitiks

Cool James & Black Teacher

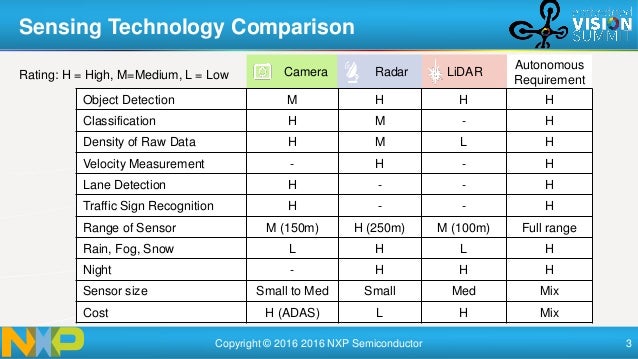

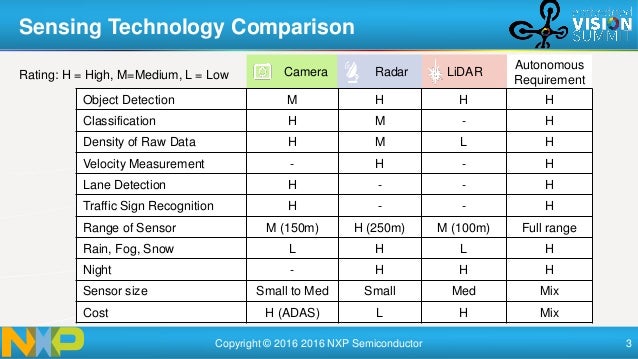

Well if that chart's true... GO RADAR!Why would this be an either-or scenario, though?

All technologies provide different benefits, for example radar can see through objects and lidar can see in the dark and provide very reliable distance information, for example - these are things where radar and lidar are superior to vision, no matter what.

It seems to me that this has become a bit of a Jobsian quest. Because Elon has been sour on Lidar for FSD, a lot of people are parroting this as an either-or scenario, when in reality it probably is not.

Are there current benefits for using radar and lidar that will eventually go away, including computational ones and vision being harder? Quite probably. That is probably why Tesla includes limited radar and ultrasonics on AP2 as well, because it makes early coding simpler.

But that doesn't mean these technologies do not have inherent benefits that help in the long run as well.

Neither Jobs nor Musk probably were/are quite as rigid in their own thinking at all, they were/are just masters of selling what they have available now.

JeffK

Well-Known Member

I don't drive Facebook. This is life and death. Tesla shouldn't ask us to pay $100k+ to treat us like guinea pigs with our life on the line. Its completely immoral and disgusting they lack full and fair disclosure of such critical safety equipment. They did that garbage with me for my Q4 2016 AP2 purchase. I specifically wanted AEB and I was assured, in writing, that it would be there.

It makes total sense that Tesla has to validate their software with new hardware but 99.99% of Tesla HW2.5 owners would never know their car doesn't have the same features as AP2 cars, especially critical safety features like AEB.

You're suggesting that 99.99% of Tesla owners with HW 2.5 cars don't read their email regarding the HW 2.5 cars.

From that message, it looked like they were disclosing it to people. I know in Q4 2016 it was made very clear on the AP2 phone call and the website that it wouldn't have certain safety features until a later date (they happened to be wrong about the exact date, but they still disclosed the lack of features).

Oct 2016 conference call:

the feature-set initially will be disabled say well at least for the first few months.

Tesla blog Oct 2016:

Before activating the features enabled by the new hardware, we will further calibrate the system using millions of miles of real-world driving to ensure significant improvements to safety and convenience. While this is occurring, Teslas with new hardware will temporarily lack certain features currently available on Teslas with first-generation Autopilot hardware, including some standard safety features such as automatic emergency braking, collision warning, lane holding and active cruise control. As these features are robustly validated we will enable them over the air, together with a rapidly expanding set of entirely new features. As always, our over-the-air software updates will keep customers at the forefront of technology and continue to make every Tesla, including those equipped with first-generation Autopilot and earlier cars, more capable over time.

They aren't treating people like guinea pigs because they are testing it in shadow mode. If they did the opposite and tested it live, then people would be like guinea pigs. If AEB triggered too many false positives it would endanger both the passengers and other drivers.

Why would this be an either-or scenario, though?

All technologies provide different benefits, for example radar can see through objects and lidar can see in the dark and provide very reliable distance information, for example - these are things where radar and lidar are superior to vision, no matter what.

It seems to me that this has become a bit of a Jobsian quest. Because Elon has been sour on Lidar for FSD, a lot of people are parroting this as an either-or scenario, when in reality it probably is not.

Are there current benefits for using radar and lidar that will eventually go away, including computational ones and vision being harder? Quite probably. That is probably why Tesla includes limited radar and ultrasonics on AP2 as well, because it makes early coding simpler.

But that doesn't mean these technologies do not have inherent benefits that help in the long run as well.

Neither Jobs nor Musk probably were/are quite as rigid in their own thinking at all, they were/are just masters of selling what they have available now.

If Lidar was that important I'm sure Elon would've included it as part of the current hardware suite.

croman

Well-Known Member

You're suggesting that 99.99% of Tesla owners with HW 2.5 cars don't read their email regarding the HW 2.5 cars.

From that message, it looked like they were disclosing it to people. I know in Q4 2016 it was made very clear on the AP2 phone call and the website that it wouldn't have certain safety features until a later date (they happened to be wrong about the exact date, but they still disclosed the lack of features).

Oct 2016 conference call:

Tesla blog Oct 2016:

They aren't treating people like guinea pigs because they are testing it in shadow mode. If they did the opposite and tested it live, then people would be like guinea pigs. If AEB triggered too many false positives it would endanger both the passengers and other drivers.

If you've already bought the car, its a bit late for full and fair disclosure. Nice try though. Tesla apologizing PhD?

Also, you acknowledge they got the date wrong. I knew cars in October didn't have AEB. I was told cars delivered in December 2016 would definitely have it and Tesla's website backed up those claims until it was sanitized. They were off by 6 months. Its shameful you've taken upon yourself to continue Tesla's deceit.

Also, the website clearly said AEB would be present in December 2016 and was only changed Feb 2017. I'm not an investor, nor is it legal to only disclose to investors and not actual consumers limitation such as AEB not being present. Tesla failed and it is indicative of a poor ethical, moral, and business philosophy.

If Lidar was that important I'm sure Elon would've included it as part of the current hardware suite.

No, I don't think that's true, Elon has sold a vision, if he was to back track on that today and say oops, we need lidar and went forth and placed it in the M3, he'd lose all credibility.

Elon has staked his reputation and Tesla's on a vision/radar only approach, now we just need to wait a few years to see if he's right or not.

I think he is, I don't think lidar is required, but I think his timelines are waaaaaaaaaay off, but we already know that... "three months maybe, six months definitely..."

but we already know that... "three months maybe, six months definitely..."

And once FSD is delievered, we will have a clear baseline for Elon Time. That alone must be worth the extra wait!

So, Blader, you claimed LiDAR needed ZERO computation for object detection which, in the application of self-driving cars, is patently false.

Thus, your claim of zero computational power for LiDAR object classification is 100% wrong. Chris Urmson already told you that Google classifies all objects, yet you repeatedly made the false claim anyway.

No i said Lidar needed no computation for object detection. object detection(also called recognition) and object classification are vastly two different things.

If you are going to come at me Atleast Come at me right!

Lidar needs 0 computation for any object detection task. zero, nada, none, zip.

Having found a "BLOB of something" in positional space is utterly meaningless unless you know what it is. Thus, every BLOB must be classified. And, in fact, Google already told you that was the case. And even demonstrated it.

Lidar doesn't provide a blob, they provide a precise and accurate 3d dimensions of an object.

This is a lidar data without any classification.

This is lidar data with classification, its simply putting 3d bounding boxes on the 3d cloud points and tagging them with different classes. With classification, you can do things like scene understanding and object prediction and it also helps immensely with path planning. but without it, you can still detect objects in your path precisely and avoid them because its 3d data relative to your position.

this is lidar data with no classification (the dots) from a low resolution lidar (small amount of lasers) projected onto an image. notice you don't need to classify to know that there is an object of precise dimension, position and speed in infront of your path or on the side of you and you can actuate controls appropriately. This is the same thing adaptive cruise control has been doing for decades with an even lower resolution radar.

Now you're quickly changing the subject to claim that LiDAR provides more useful data than the other two methods. Well of course it does. It's an active system with higher resolution.

But it doesn't remove the requirement to classify all the objects it actively scans. As should be obvious to any self-proclaimed genius.

Why make phony claims if "you're never wrong"??

You clearly have no clue what you are talking about. you are immensely out of your elements.

I already posted acouple videos which if you had watched would have taught you a few things.

Last edited:

Elon has staked his reputation and Tesla's on a vision/radar only approach.

Because that's probably all you need. Humans only have two eyes and yet we can drive perfectly fine. The car has 8 eyes that can see at the same time plus radar plus sensors.

He would've added lidar in the very beginning if it was really a need.

Because that's probably all you need. Humans only have two eyes and yet we can drive perfectly fine. The car has 8 eyes that can see at the same time plus radar plus sensors.

He would've added lidar in the very beginning if it was really a need.

Yes, I said that if you kept reading

Blader, for you to claim that LiDAR requires no computation for object detection is so laughably naive and obtuse that you're obviously trolling.No i said Lidar needed no computation for object detection. object detection(also called recognition) and object classification are vastly two different things....

What do you think all that processing power is doing in the trunk of every LiDAR test vehicle? There is an absolutely enormous amount of raw data being output by every horizontal scan every second and it must be assembled and processed. An object's "spot echoes" aren't free and without classification they are utterly meaningless. So, you're arguing that meaningless data requires no computation and you're not counting the enormous overhead of even gathering that data. If you hadn't taken your argument to such a clown-level I'd be mostly agreeing with your other points. LiDAR gives precise spatial positioning "by design" and has a number of other advantages.

Let's also understand that LiDAR still has a number of issues: There are latencies, which is why most LiDAR vehicles are limited to low speeds. There are enormous computational requirements, made worse by solid-state LiDAR which means multiple units and multiple data streams. And there are issues with harmful wavelengths, which is why the next generation of LiDAR will operate at a higher frequency.

Tesla's challenge, in a non-LiDAR setup, will be to understand the spatial positioning of all objects with just a front radar and surround cameras. I suspect this is mostly possible in the forward field-of-view, but enormously difficult (or impossible) to do with just single cameras in the other directions.

The problem with a single camera approach is predicting precise distances. I suspect Tesla will have trouble identifying distances to other perpendicular cars approaching a stop sign or realizing if someone is about to run a red light. Absent LiDAR, I think stereo cameras would have probably been a better approach and/or side radars.

I think it's "probably" possible to drive 2x better than a human with Tesla's setup, as Musk believes, but that's because humans are absolutely terrible drivers. And, in reality, no one would accept just a 50% accident reduction when the fault is placed on a machine and innocent people are being hurt. At-fault accidents need to be many times less than 2x. Thus, I strongly suspect Tesla will need to add additional hardware in the future, be it stereo cameras, side/rear radars, or LiDAR. In fact, the most robust setup is to contain all three: LiDAR, radar, and cameras, which I suspect all "future" self-driving vehicles will gain at some point.

Blader, for you to claim that LiDAR requires no computation for object detection is so laughably naive and obtuse that you're obviously trolling.

What do you think all that processing power is doing in the trunk of every LiDAR test vehicle? There is an absolutely enormous amount of raw data being output by every horizontal scan every second and it must be assembled and processed.

I'm gonna stop responding to you because its clear that you have absolutely no clue what you're talking about nor what we are even discussing.

If you hadn't taken your argument to such a clown-level I'd be mostly agreeing with your other points. LiDAR gives precise spatial positioning "by design" and has a number of other advantages.

That's because i'm never wrong because i do my diligent research.

AnxietyRanger

Well-Known Member

Because that's probably all you need. Humans only have two eyes and yet we can drive perfectly fine. The car has 8 eyes that can see at the same time plus radar plus sensors.

He would've added lidar in the very beginning if it was really a need.

Nobody knows what the real FSD need is yet, neither does Elon. He has made his opinion about lidar not needed for FSD clear, though. It is his stated view and Tesla's current strategy, sure. Tesla can not know if they can convince enough jurisdictions to accept vision only, for instance (which AP2 mostly is, the radar is very narrow front and ultrasonics are only useful for low-speed maneuvering basically).

For me the question at this stage is more about how much better FSD might be with 360 lidar and radar coverage compared to only vision. I can see it being very helpful on dark highways for instace.

AnxietyRanger

Well-Known Member

What exactly can be done with lidar that cannot be done with two cameras?

Tesla does not have two cameras pointing everywhere, I'm not sure even the front vision is really very suited for stereo vision...

Anyway, I would imagine darkness is the big thing that lidar can handle and cameras can not to the same extent.

Similar threads

- Article

- Replies

- 4

- Views

- 2K

- Replies

- 10

- Views

- 869

- Replies

- 1

- Views

- 213

- Replies

- 81

- Views

- 4K

- Replies

- 20

- Views

- 3K