paulp

Active Member

Can you clarify, does he work for tesla or is he expressing his opinion on what others are doing?I meant to link this video by the same guys, sorry. But they are both closely related. Long and dry but informative.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Can you clarify, does he work for tesla or is he expressing his opinion on what others are doing?I meant to link this video by the same guys, sorry. But they are both closely related. Long and dry but informative.

No.Can you clarify, does he work for tesla or is he expressing his opinion on what others are doing?

No you can’t clarify or no he doesnt work for tesla?

Fair assessment paulp. I don’t usually agree with you, but here you’ve done your research and posted a well balanced and well thought out opinion.I researched it and no he’s not a tesla employee, but he is a tesla investor….so keep those messages positive.

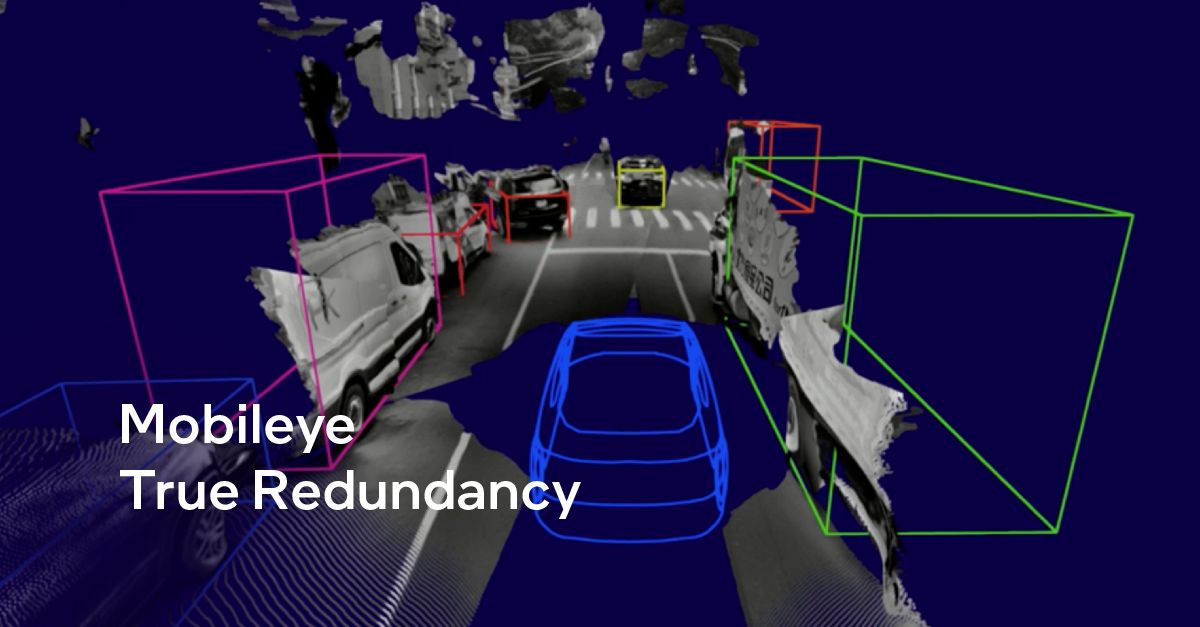

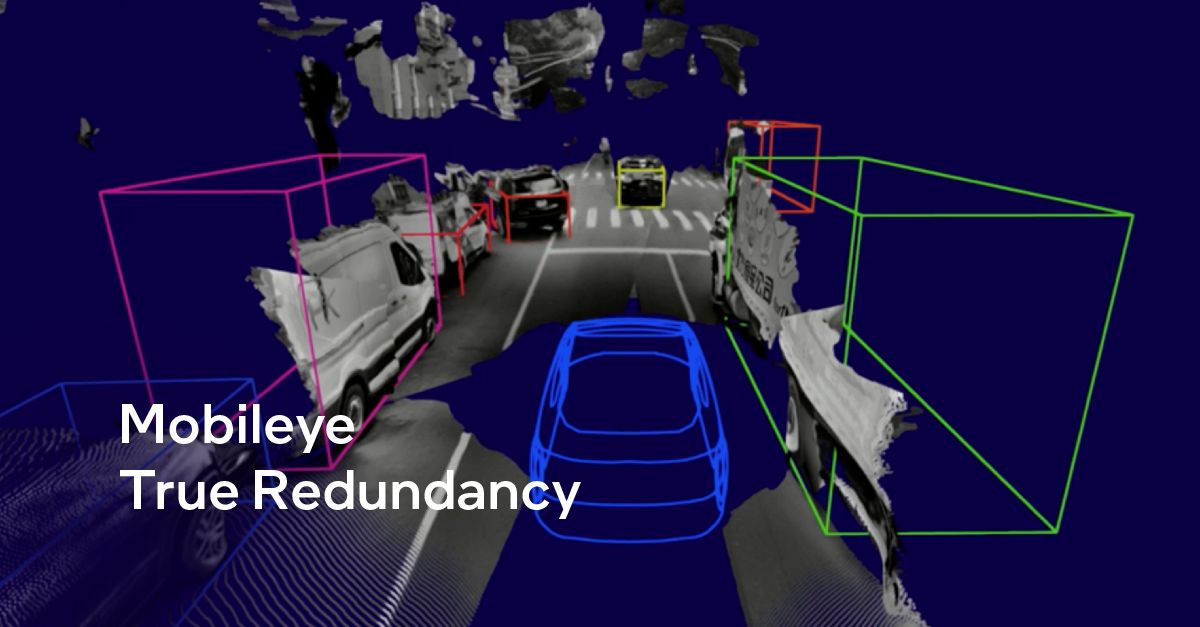

Its interesting that mobile eye, who created the first tesla autopilot (which was and for many tesla owners still is very good) and are global leaders, also agree a vision only system will work. They do however make it expressely clear that their system adds radar and/or lidar for redundancy. They dont have confidence in a system without redundancy. You can read more on their website.

Importantly, mobile eye are developing AV systems. They dont just have hypothetical chats.

that’s absolutely not true. There is no database of objects to reference when the NN is making inferences. There are objects that are used in training but this is purely for training. The resulting NN will have an intrinsic ability to predict distances no matter what the object is. Think about the vanishing line optical illusion, that’s what it’s replicating. Things like shadows, horizon etc are all used internally by the NN to make the prediction.My take on this:

If Tesla is perceiving depth from the size of the object (as opposed to binocular vision), then it can only make an assessment of its relative distance if it actually recognises the object and has a size comparison in its database. Ok for cars, bikes, humans, traffic cones, bins, animals.

But not ok for unexpected road hazards, eg, surf board, wardrobe, pile of junk.

Since the car is moving, Tesla vision can perceive that it is closing on the object because its size would grow faster than the surroundings, but it would have no idea whatever how close it is because it would not know the proper size of any object not in its database.

That is where radar is indispensible.

If one camera is obscured it would safely pull over. Forward facing cams are behind the wiper. The rider might be asked to clean the camera and it will continue driving. Detecting obscured cameras and finding a spot to pull over is an amazingly simple problem compared to 99.9999% of all autonomous KMs accident free.Computing power is not the issue. The cameras as they are currently released have no protection, no way of self cleaning. They can be completely obscured, with the photons never reach the receiver.

One splash of mud, snow, bird droppings, bug strike and the camera is offline. The cameras are not fit for purpose, if that purpose is a fully-autonomous, unattended vehicle like a robotaxi.

Assuming the robotaxi requires all 8 cameras to be working, there are 8 points of failure. If one camera becomes obscured surely the vehicle would have to stop operating. It couldn't hand over to its passenger, it might not even have one. Or it might have a child, someone visually impaired, someone intoxicated, some too old to keep their license. Would it stop in its current lane in the middle of the harbour bridge? Or keep driving with one eye closed (and now no radar) until it reached a street with parking? Would it be the owner's responsibility to find and rescue the vehicle and its passenger?

there is no way any government is going to approve autonomous vehicles on the basis that the occupant who may be a 10 year old child or a drunk has to get out mid journey and wash the cameras, especially if a competing brand can demonstrate triple redundancy that can still work if any of the vision/radar/lidar fail And when that dpredundancy doesnt come at a cost premium.Indeed there is no autonomous fast moving human carrying device that I’m aware of without complete full system redundancy. Even the humble lift car has two distinct methods to prevent freefall.If one camera is obscured it would safely pull over. Forward facing cams are behind the wiper. The rider might be asked to clean the camera and it will continue driving. Detecting obscured cameras and finding a spot to pull over is an amazingly simple problem compared to 99.9999% of all autonomous KMs accident free.

Why do you think the government would require 10 year olds to have the ability to ride by themselves in a robo taxi before the robo taxi can be of significant societal value ?there is no way any government is going to approve autonomous vehicles on the basis that the occupant who may be a 10 year old child or a drunk has to get out mid journey and wash the cameras, especially if a competing brand can demonstrate triple redundancy that can still work if any of the vision/radar/lidar fail And when that dpredundancy doesnt come at a cost premium.Indeed there is no autonomous fast moving human carrying device that I’m aware of without complete full system redundancy. Even the humble lift car has two distinct methods to prevent freefall.

Also which autonomous car carrying passengers and no driver has achieved 99.9999% accident free around our streets? That more an aspiration and a theory. Its not yet reality.

Suggest you go and advise Professor Shashua.Why do you think the government would require 10 year olds to have the ability to ride by themselves in a robo taxi before the robo taxi can be of significant societal value ?

what happens if you stick your 10 year old child in a taxi now and the driver has a heart attack? Should the government ban taxis?

also the triple redundancy of vision, lidar, radar isn’t an overlap as I’ve said. You can’t get vision from lidar, but you can get lidar from vision. Even if your AV had all 3 sensors, if a camera fails you will need to clean that camera because it’s the most important sensor. If that sensor isn’t obscured isn’t critical to drive, then having a vision based failure mode is just as safe as supplementing with lidar/radar. So again, having lidar/radar doesn’t fix anything and only makes it more complex and error probe.

Cameras being obscured is solvable problem via operational means. If a camera gets dirty, there are failure modes the system can fall back to… just like an elevator system. That’s a strange argument to make by the way, most people were incredibly negative and fearful towards automated elevators in the 1920s because they were misunderstood too. There was all kind of FUD surrounding them too.

Assuming 3mil HW3 on the road, Tesla can demonstrate whatever number the relevant authorities want. As I’ve said, this is about data, not your perception of safe/unsafe. Safety is an emergent property of the loss function. It would take Tesla a week/month/quarter to collect 1 million kms of shadow mode data in whatever city to validate performance.

10 year old is just an example of a passenger that couldn't be expected to clean the cameras. The car could just as easily be carrying no one at all, or a blind adult etc etc.Why do you think the government would require 10 year olds to have the ability to ride by themselves in a robo taxi before the robo taxi can be of significant societal value ?

Yes, it is an overlap.also the triple redundancy of vision, lidar, radar isn’t an overlap as I’ve said.

Suggest you go and advise Professor Shashua.

Mobileye True Redundancy | Realistic Path for AVs at Scale

Mobileye's two separate self-driving systems, one based on cameras alone, and one based on radar-LiDAR alone, provide True Redundancy for safer, more scalable AV systems.www.mobileye.com

10 year old is just an example of a passenger that couldn't be expected to clean the cameras. The car could just as easily be carrying no one at all, or a blind adult etc etc.

Yes, it is an overlap.

In fact, this thread started in particular lamenting the loss of radar, which can bounce under the car in front and 'see' through fog.

Radar cruise control is mainstream and very reliable at avoiding the usual 'run up the back of someone' accident. Seems a shame to lose it.

That's my point exactly.who knows maybe the robo taxi repeater modules will have a little wiper?

To be fair, you really dont have a clue how intel mobile eye’s new proprietary system works as they simply dont publish their IP, and given their number of clients its seems they all think Its very scalable.mobileye system requires HD maps in order to drive in LIDAR only mode and will stop at every intersection if there are no other cars to give confirmation of traffic light control. Their approach is totally valid it’s just not as scalable. MobilEye readily admit driving with cameras only is not only possible but preferable. The lidar system is there to appease conservative OEM partners.

Its certainly possible that current gen cars are stuck as a L2 system. However the L2 capability would cover the entire driving task. I would be pretty happy with that outcome even if I can't send my car off to make me $20k per year in robo taxi.That's my point exactly.

The website no longer says it, but it used to say that the current cars are capable of joining the "Tesla Network" or whatever their robotaxi service was called.

I just don't think they ever will.

Maybe the next gen cars will.

To be fair, you really dont have a clue how intel mobile eye’s new proprietary system works as they simply dont publish their IP, and given their number of clients its seems they all think Its very scalable.

The lidar systems purpose is outlined on their website, and it seems intel and the lidar partner have come up with a low cost solution to it. They also make it clear that vision is the basis of the system.

Actually this is no entirely true. A driver makes mistakes too. The worst accident Waymo have reported was when a safety driver ignored the fact that the car was slowing down for a green light and punched the accelerator. The car was actually slowing down because another vehicle was about to run a red light as cross traffic and the autonomous system had correctly predicted this. In that case, it would have been better to simply not have a driver in the car at all.I think the thing that will possibly kill it is not safety, but what happens when the car can't decide what to do in the many "edge cases". No problem when there's a "driver" but robotaxis? And again, no problem if there are one or two - but fleets of vehicles, stopping - safely - because they can't negotiate something?

ShockOnT's correct - robotaxis aren't going to be on our roads on our current hardware and probably not in my lifetime.

Yes, it is an overlap.

In fact, this thread started in particular lamenting the loss of radar, which can bounce under the car in front and 'see' through fog.

Radar cruise control is mainstream and very reliable at avoiding the usual 'run up the back of someone' accident. Seems a shame to lose it.

Lidar is line-of-sight. Can't see through fog or bounce under car in front to see occluded second vehicle.Short term pain for long term gain.

A pseudo-lidar CNN will give AP a depth map that a radar unit could only dream of providing. It will also correlate pixels to depth (which currently it cannot do, there are seperate radar responses and seperate vision inputs)

This means (as I've already explained in depth a few posts back) that the AP stack will not only be able to detect the car in front of the car but also a myriad of other partially occluded objects that may stationary, different radar reflectivity etc.

I find it so funny how people demand that AP improves constantly yet are not willing to accept the cost to this improvement.

A whole coke? Are we talking a fancy glass bottle one? Such confidenceLet's put it another way: if we ever see a fleet of robotaxis on AP3 I will buy you a coke.