YieldFarmer

Analytic.eth

Yes, all cars are getting it nowJust got offered this version on a MX, which as far as I know is not TeslaVision.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Yes, all cars are getting it nowJust got offered this version on a MX, which as far as I know is not TeslaVision.

Assuming you're referring to this:

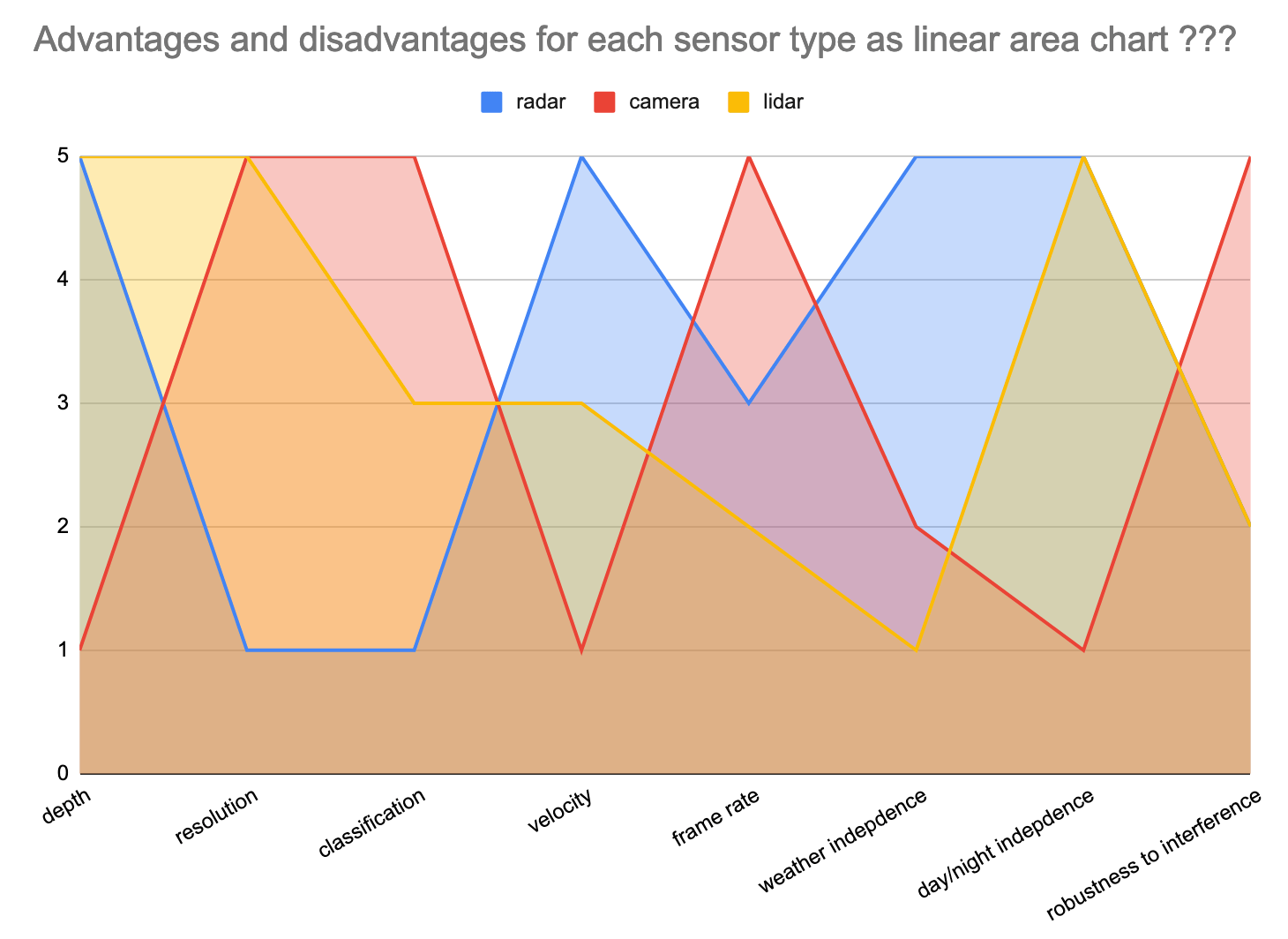

I put in the "???" to try to indicate this is a poor way to show the data. To be explicit, the circular version of the visualization is also bad, and the ordering and "connecting" of the attributes doesn't make sense either, which I was hoping to be more obvious in this linear version where having arbitrary values on the X axis that don't progress (e.g., 1, 2, 3) is bad chart design.

Except human cognition is not comparable to any current ML/AI techniques or technology. Not by any stretch of the imagination. The operation of the human brain is completely different than digital computers (and is still very poorly understood). The ability of one human brain to synthesize information far outclasses all the compute power of the earth currently. It is not a logically supportable argument that just because a human can do complex decision making based on visual input that therefore a computer system definitely can as well. I mean, perhaps it can be used to drive a car at an acceptable level of safety, but there is so far absolutely no shred of evidence for that yet (and tones of reasons to be very skeptical).I think that there isn’t an advantage to putting them in a chart like that. Since it doesn’t make sense to compare them, really.

Ultimately we can achieve full self driving with vision. That is a fact. People can drive without radar or lidAr. we just need to figure out how to code that

So the question is how can lidAr or radar makes the job of coding easier that using pure vision. Or, how can lidAr and radar provide capability better than vision alone beyond what is optimally possible with cameras.

the problem with the chart is that it assumes that all the advantages are fully exploitable. They aren’t currently

Except human cognition is not comparable to any current ML/AI techniques or technology.

I mean, perhaps it can be used to drive a car at an acceptable level of safety, but there is so far absolutely no shred of evidence for that yet (and tones of reasons to be very skeptical).

well, two camera vision can be used 100 percent for sure. Can humans code for it is a different question. But it’s pretty obvious the “code” exists to make it possible.

I never said otherwise. Straw men aboundSure the "code" exists but actually developing the "code" is no trivial matter As discussed in this thread, we are very far from achieving human-like computer intelligence.

It does seem like loading self-driving code in to a chimpanzee brain would be the way to go if we could do that. If you think about it that would be far more likely to work than HW3 if it were technologically possible right now.well, two camera vision can be used 100 percent for sure. Can humans code for it is a different question. But it’s pretty obvious the “code” exists to make it possible.

It does seem like loading self-driving code in to a chimpanzee brain would be the way to go if we could do that. If you think about it that would be far more likely to work than HW3 if it were technologically possible right now.

Indeed he is.Don't give Elon any ideas. He's already connecting monkey brains to computers with neuralink.

I used to think so, but now I'm not so sure.Except human cognition is not comparable to any current ML/AI techniques or technology. Not by any stretch of the imagination. The operation of the human brain is completely different than digital computers (and is still very poorly understood). The ability of one human brain to synthesize information far outclasses all the compute power of the earth currently.

Then more recently a computer (AlphaGo) beat the world's best Go player, consistently, and even invented new ways of playing (moves) that humans would never have thought of along the way. It innovated as it learned to master this "silly human game".

Of important note, humans didn't teach this computer how to be a great Go player. It learned that on its own from experience.

So although it is hard to imagine at present, I think it seems reasonable that eventually a computer like this could learn to be the best player at the game "Driving Cars In Streets" given enough time to learn, and with no more sensors than humans have.

Vision only cars are on this build too, so you possibly have both radar (normal) and vision speed estimation (from .15.x)I installed 2021.4.18.2 on my Model S a little while ago. Not sure what it all contains, but greentheonly indicated that the V3 autowipers from the .15.x releases is back in this build. Lines up with Elon's tweet about "one more production release this week"

so the "no_radar" is a feature. Probably will get charged for it.Camera-based Driver Monitoring System (DMS) is only active in radar cars for now it seems.

Honestly I’m starting to think speed is the biggest factor in it failing to keep autopilot. After I posted my Reddit thread many posted videos of it working in heavier rain that I did, but they weren’t 70+ MPH like my run and that video.Some poor rain performance when the roads are wet / reflective here near the end: