CyberGus

Not Just a Member

This is dangerous, folks.

Still safer than owning a Chevy Volt.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

This is dangerous, folks.

How do you know this?In fact this is what is already driving FSD 8.x beta, though I understand the camera integration is far from complete, including in V9 where the main change is removal of radar as a primary input (not a switch to BEV, which has already happened).

How do you know this?

This would imply bird's eye view vision has not yet been fully deployed.Second, Elon and others have mentioned that they are working on integrating more cameras into the BEV (implying that the 8.x is already relying a subset of the cameras for a BEV)

You are misinterpreting him:Finally, Elon has tweeted that the main change in 9.x is the removal of dependency of radar

You get rick rolled if you follow through.

If I could do three laughing emojis, I would for this one. Classic.Still safer than owning a Chevy Volt.

This would imply bird's eye view vision has not yet been fully deployed.

You are misinterpreting him:

But as I and others have noted, that basic BEV milestone was part of FSD from the start, and has always been present in the beta. The whole point of the last 18 months of work by the AI/NN team has been to change the NN to synthesize a top-down model from the combined camera views. And that's what it has done. You can see this in every single FSD video back from the first public beta onwards. The FSD would not have been possible without this.Tesla is removing radar because of improvements in vision. Those improvements in vision are achieved through “significant architectural changes”.

Based on the things Elon and Andrej have said over the last two years, including things Elon has said recently, I speculate that one of the most significant changes from FSD beta v8.2 to v9.0 is the transition to an approach to vision that is fully top-down, bird’s eye view, natively 3D using a fully neural network approach to go from raw pixels from the eight cameras to the 3D, 360-degree bird’s eye view model of the world.

What exists in v8.2 seems to be some sort of hybrid or halfway point to the aspiration of natively 3D, fully NN-based computer vision that Elon and Andrej have described, such as in the clip in the OP of this thread.

the term "3D" here refers to the temporal aspect (x+y+time)

I don't know why you think 9.0 is some huge radical rethink of the basic FSD stack

You might think of it that way, others dont in this specific context. The specific references used in much of the FSD discussion (including the video you reference) use 3D to mean a BEV view that includes a velocity (i.e. time) component.No. It’s x, y, and z axis. Width, height, and depth. When you add the dimension of time, it’s 4D.

So what? I pointed out that there is terminology confusion, but that's irrelevant. As I've already noted, whatever its called, this basic technology is already present in the 8.x stack, as you can see if you examine the various beta videos. Aside from Elons usual hype words ("significant", "fundamental"), the actual functional change in 9.x is not to add BEV, but to enhance it and remove any reliance on radar. If you are expecting 9.x to be a fundamental change, as you claimed in the OP, then you are likely to be disappointed. Sure, it will be better (I hope), but hyping it as a "showstopper" is dubious at best. Remember all those huge excited threads before the holiday update? People were claiming it would be V11, and would have vast new features etc etc etc. What did we get? A few tweaks and a new game or two.Detailed explanation of 2D vs. 3D vs. 4D:

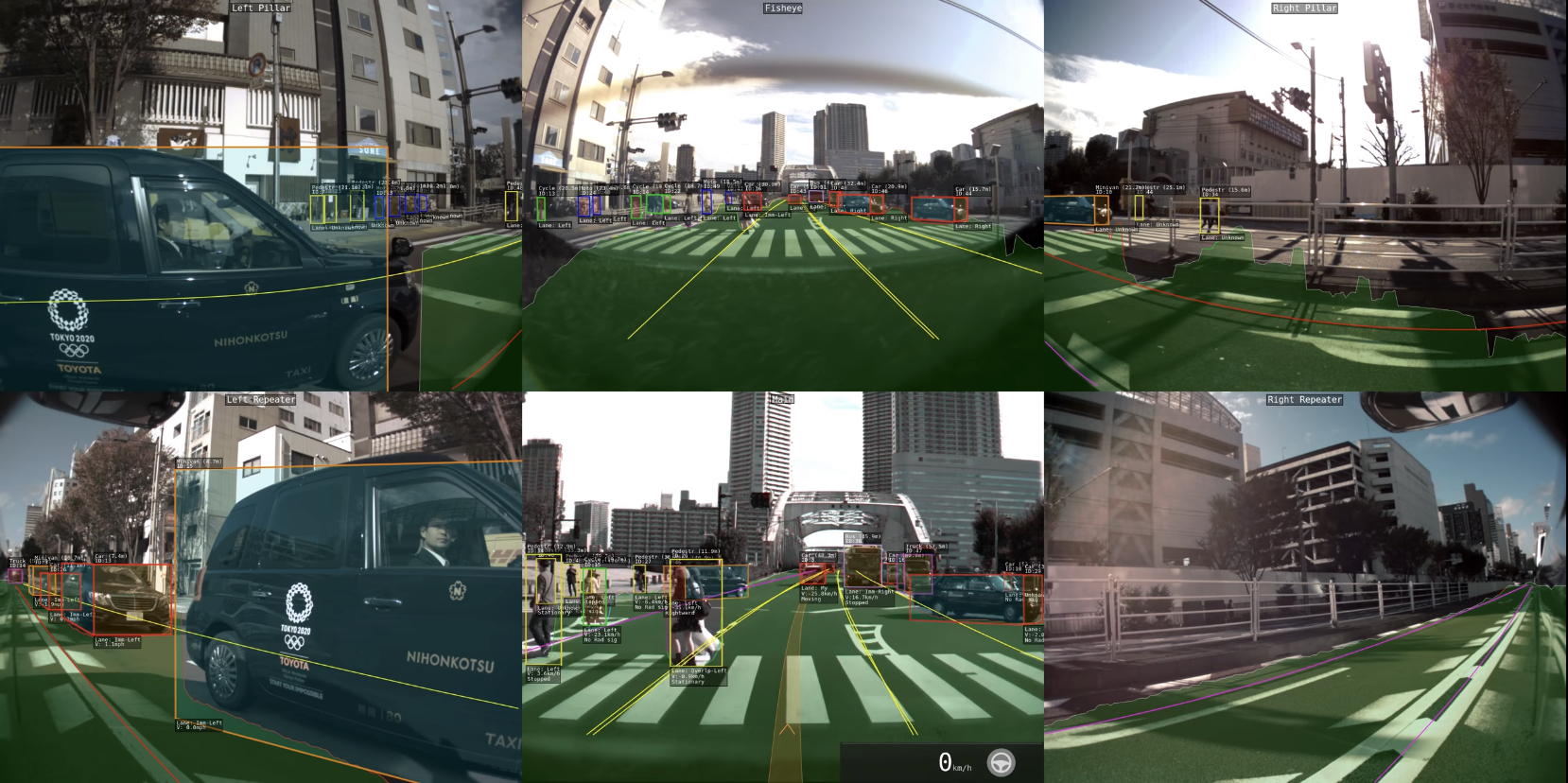

4D vision

This is 2D vision: Image credit: greentheonly. Projecting out 3D models from detections within individual 2D images. This is 3D vision: Image source: Karpathy at CVPR 2020. The neural network directly outputs a 3D model from the 8 camera images. This is 4D vision (3D + time)...teslamotorsclub.com

If you watch the entire Karpathy talk, and study the slides (and some of his tweets, which I dont have at hand), you will see that he pretty much admits that FSD without BEV really cannot be done.

The distinction between cameras vs NNs is not relevant here

Some other threads, and indeed some discussions elsewhere, started using "3D' to indicate a primarily 2D system (i.e. a map) with velocity projections, was "3D", which would have been better expressed as 2D+T

If you watch the entire Karpathy talk, and study the slides (and some of his tweets, which I dont have at hand), you will see that he pretty much admits that FSD without BEV really cannot be done. He shows with/without examples, where (say) an intersection cannot be recreated without the NN generating a BEV. The BEV examples he shows, right down to the color schema for the BEV view of roads, cars etc, matches pretty much exactly that in the 8.x FSD beta. Further, since he stated that BEV was required and that this was their primary focus in 2020, I think this is pretty conclusive that 8.x does indeed use BEV .. because you can see it on the car screen.

The distinction between cameras vs NNs is not relevant here, and I'm not clear what "focal areas" means in Musk-ese, but no doubt we shall see when 9.x does indeed get released.

As for this 2D/3D stuff that seems to get you flustered. Some other threads, and indeed some discussions elsewhere, started using "3D' to indicate a primarily 2D system (i.e. a map) with velocity projections, was "3D", which would have been better expressed as 2D+T or some such. I'm not disagreeing that we live in a world of 3 spatial and one time dimension (string theory notwithstanding), but merely that some references to "3D" in other threads should be taken to mean "top down map view plus velocity".

In fact, the NN is probably more accurately described as 2D+T or perhaps 2.5D+T, since I doubt if it would (say) correctly place a car if it was flying through the air. The visual system must ultimately establish a ground plane, and then use the NN to place objects onto that plane in the correct (X,Y) locations .. that, after all, is basically what a BEV is.

(oh, and that purple "cuboid" is of course strictly speaking a 2D co-planar abutted square and trapezoid on my computer screen