FYI, if you happen to be inclined to read anything I’ve written about Tesla, Waymo, or autonomy, I would recommend you ignore anything written before 2019. Not all of it is wrong, but some of it definitely is. I can’t even remember most of what I wrote 3 or 4 years ago.

Some of my old stuff I'm still proud of, but nobody's 1st article or 10th article is going to be as good as their 100th. The stuff I've written in 2021 is better than what I wrote in 2020, and what I wrote in 2020 is better than what I wrote in 2019.

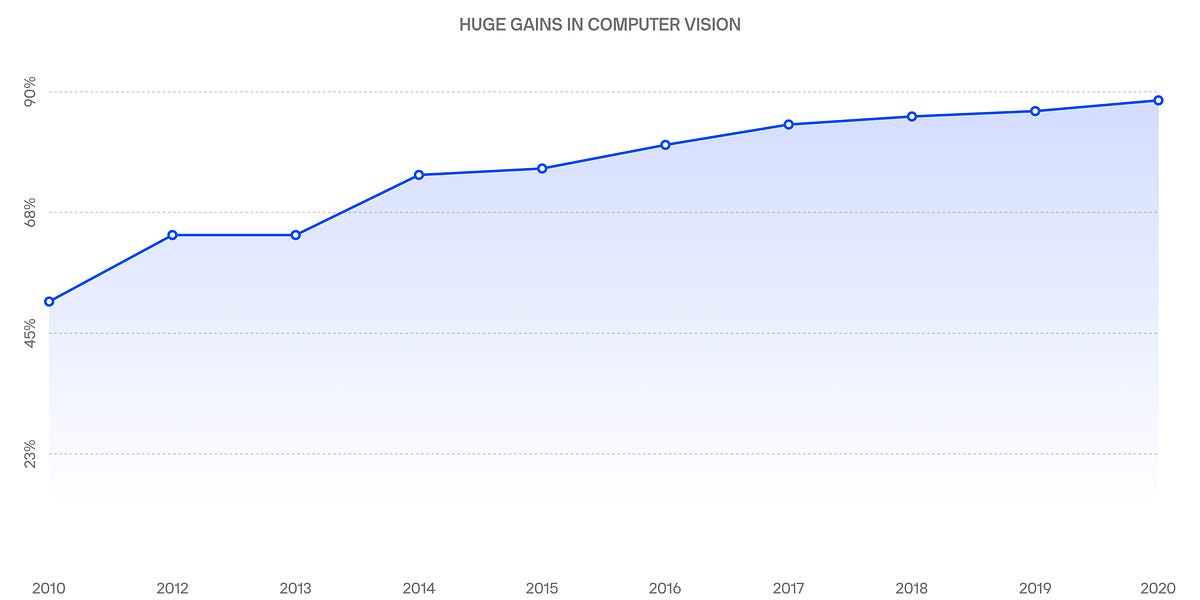

Also, autonomous driving is a fast-changing field, as are its constituent fields of deep learning, reinforcement learning, imitation learning, computer vision, etc. Articles I wrote 3 or 4 years ago would be hopelessly out of date even if they were otherwise perfect at the time — which they were not. Not only have I learned a lot about autonomous driving in the last 4 years and corrected a lot of my mistakes, the subject itself has changed substantially.

If you’ve followed the research in these fields or watched technical presentations from the major companies like Waymo, Cruise, Mobileye, Tesla, or the now defunct Uber ATG (acquired by Aurora), you can notice significant changes in what approaches they’re using or exploring from one year to the next. Anything anyone's said or written about autonomy that’s even a year old risks being out of date.

These days, I’m not focused on defending my old work or having a track record of always being right. That’s ego bullshit. I just like robots, I like AI, I like learning, and I like making money. As an investor, I'd rather be wrong and rich than right and broke. Which would you rather have: the feeling of being right or a wheelbarrow full of cash?

So, I’m focused on trying to have a better understanding of what’s true as I learn, discuss, debate, research, talk to experts, commission papers, talk things out with friends, watch FSD videos, etc. If in that process, I find reasons to believe (for instance) that Waymo is close to full autonomy and Tesla isn't, I'll move all the money I have in Tesla into Alphabet. I can do it in one minute on my brokerage app. The less emotionally invested I am in feeling like I'm right, the more flexible I'll be about changing my mind, and my financial investments will be better served.

I'm not an unattached Buddha, but I've pretty much stopped writing publicly, I deleted Twitter a while ago, and on places like this I try to only engage with people who I feel like are gonna have productive conversations that actually go somewhere and uncover new truths.

Some people are like scary obsessed with me, to the point that I had a cyberstalker who hounded me for about a year. The only reason I was able to get him to stop was that he carelessly revealed his identity. He was super creepy and did stuff that genuinely terrified me. I'm so relieved he didn't cover his tracks or I might still be dealing with that today.

There are some deeply unwell people you'll encounter on the Internet who seem to pour a disturbing amount of their self-worth into being right about a specific topic and beating down people who they perceive as being wrong. Seeing how unhappy those people are and how much hate, anger, and bitterness they're filled with and how f***ed up their mental health is was one reason I decided I had to let go of the desire to be vindicated or get public acclaim.

It's like meeting Gollum and seeing where the path you're on ends up if you keep putting on the Ring. Engaging too much in the dark aspects of the Internet can really warp your mind and your heart and f*** with you in a lasting way.