Dear camera savvy, driver assistance-knowledgeable and AP2HW insightful fellows. My head is full of questions about Teslas 8 x camera sensor suite. Such questions are being touched upon now and then in many different threads, but I would really like to read some more focused discussion on the topic. So:

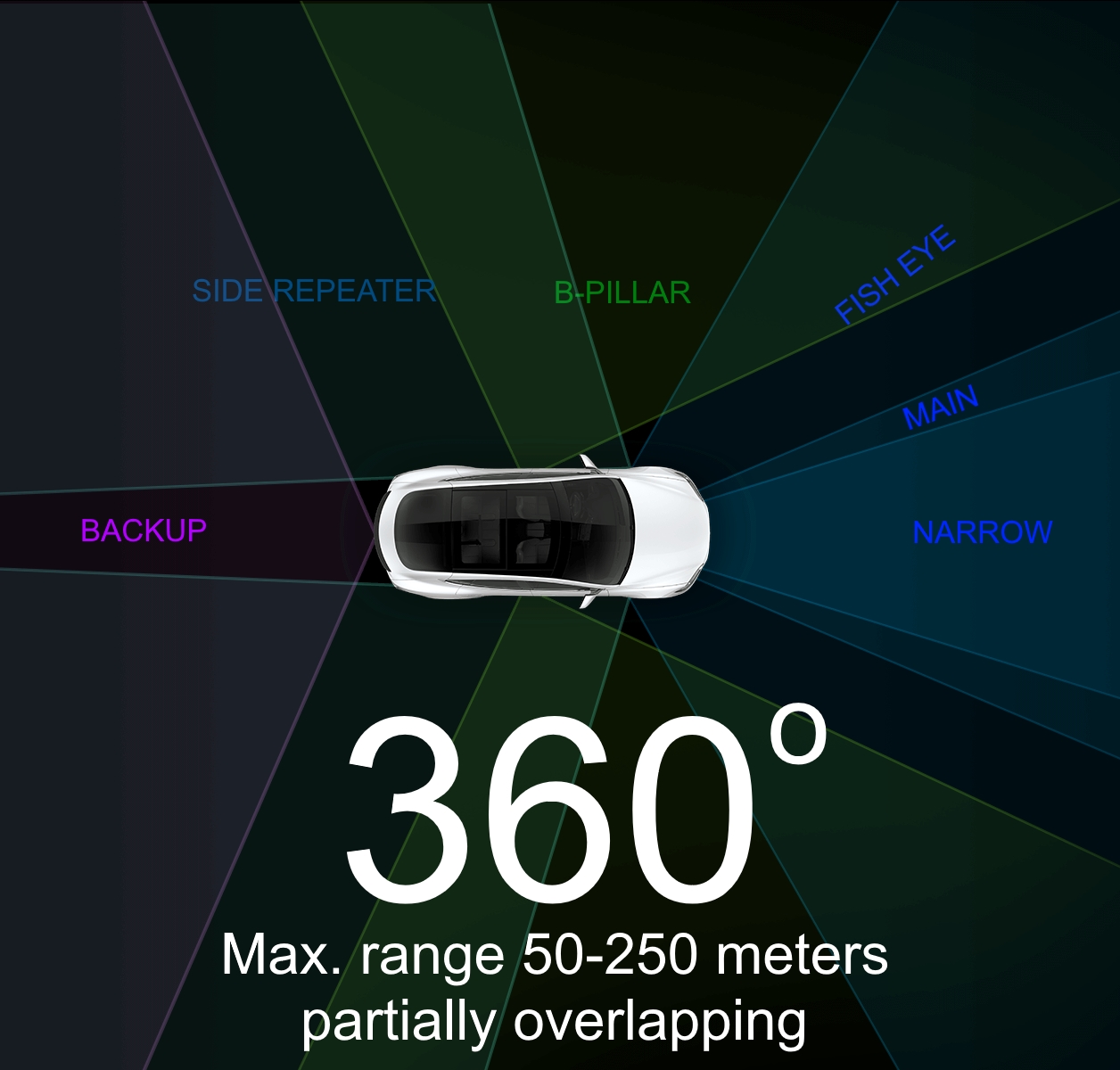

Forward cameras:

Narrow: 250 m (820 ft)

Main: 150 m (490 ft)

Wide: 60 m (195 ft)

Forward / side looking B-pillar cameras:

80 m (260 ft)

Rearward / side looking side repeater cameras:

100 m (330 ft)

Backup (rear view) camera:

50 m (165 ft)

And here's a quick sketchup of the camera vision, based on the official renderings:

Please, please don't destroy this thread with long discussions about radars, lidars, GPS, ultrasonic or other sensor types. OTOH, all camera talk must pass

- What capabilities and limitations can/should we expect from Teslas 8 x camera sensors, given available information about their physical position, their FOVs, range, colors, resolution etc.?

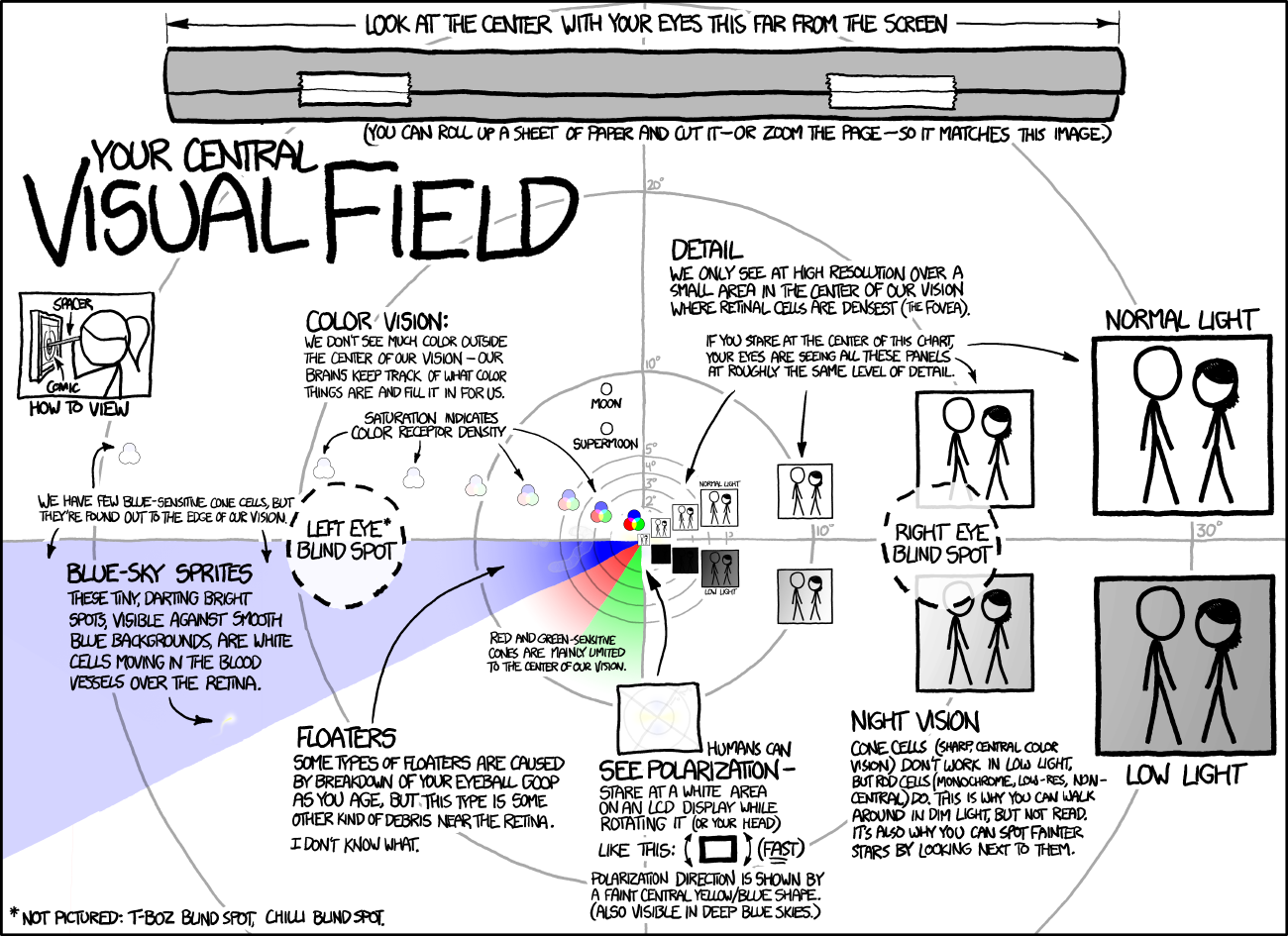

- How do the cameras compare to our human eyesight?

- How much of a difference does ambient light, artificial light (headlamps etc.) play for the camera vision?

- Are there certain scenarios where we can absolutely and definitely say that the cameras are of no help to the AP2-system?

- Also, what do you think about the physical positioning of the cameras on the vehicle? I'm also wondering about the so called "heating elements", the absence of water and dust wiping/blasting mechanisms, etc.

Forward cameras:

Narrow: 250 m (820 ft)

Main: 150 m (490 ft)

Wide: 60 m (195 ft)

Forward / side looking B-pillar cameras:

80 m (260 ft)

Rearward / side looking side repeater cameras:

100 m (330 ft)

Backup (rear view) camera:

50 m (165 ft)

And here's a quick sketchup of the camera vision, based on the official renderings:

Please, please don't destroy this thread with long discussions about radars, lidars, GPS, ultrasonic or other sensor types. OTOH, all camera talk must pass