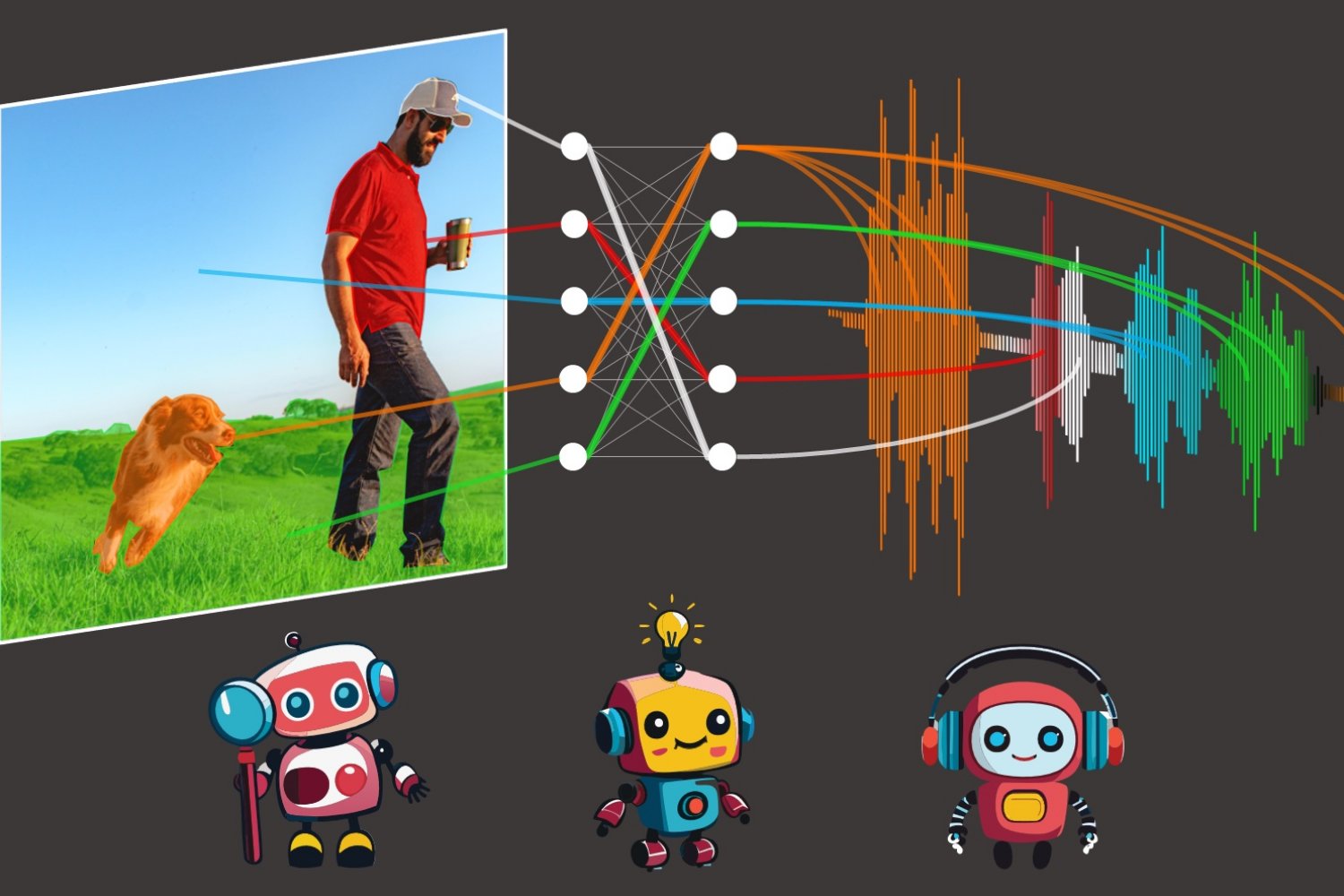

I guess when Elon is talking about using 100M cars with HW4+ as a massive cluster it pretty much is this but scaled up:

High latency low reliability network connections aren't so useful for very large scale training. There's a good reason the highly intimately linked supercomputer NVidia boards binding coprocessors very tightly and other connections binding boards closely are preferred and most useful. This means high performance supercomputer purpose designed, not loose conglomerations of cheap consumer hardware. This is hard core NVidia tech and extremely difficult to implement.

Stochastic Gradient Descent (and variants that everyone uses to train) is intrinsically serial because you want to score and backprop the new examples starting from the model parameters which have been previously updated. You can try to distribute some gradient updates and add them which does work partially but it is still lowering training performance when they're based on where the model used to be some number of steps ago, and they aren't synchronized.

In most measures the model train performance is proportional to the number of gradient updates you can do, meaning serial gradient updates. Wide distribution is increasing the minibatch size (and adding some noise in addition) and possible latency and dead time, but increasing minibatch size doesn't improve performance after some point vs getting more updates.

Given a trillion examples to get through the tradeoff of more gradient updates on smaller batches (up to some point) is preferred vs the limit of of course one highly distributed batch of a trillion examples with gradients all added up.

Even more clear example: weather prediction & fluid mechanics. You can't susbtitute 100x the comptue power predicting weather in more detail starting from "now" vs evolving the prediction forward in physical time and predicting future small steps beyond that, theres a reason supercomputing needs very low latency high reliability distribution right nearby.

In a nutshell, Elon is bullshitting and trying to sell cars and equity price by implying those 100M cars will have any significant use for training. That's a "No". Same with Apple if they're implying the same.

His newly acquired $56 billion on the other hand which he took from himself away from the shareholders could have been a capital raise for the company, and the shareholders would have owned and his scientists could have bought and used some very powerful and actually useful training supercomputers.

Tesla - Elon + $56 billion capital >> Tesla + Elon - 56 billion.

You dont see Zuckerberg demanding more free equity from the board for himself even though by funding Pytorch for free he's done far more for AI than any other businessman in truth. And TBH NVidia owes Meta for that too by making NVidia the most preferred target & best supported.