Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AP2.0 Cameras: Capabilities and Limitations?

- Thread starter lunitiks

- Start date

JeffK

Well-Known Member

Unless that demo was based on nVidia code.Of course there is a another version of AP being developed. It become obvious after 2.0 was released and how crude it was compared to Tesla AP demo video.

Unless that demo was based on nVidia code.

Tesla was using Mobileye code before and they made the best working system of all cars that used it.

I would see nothing wrong if Tesla reused nVidia 'code' now. Or, Do you imply that Tesla cheated us with the system entirely made by nVidia?

Personally I am of opinion that Tesla sees nVidia as a competitor (just like Mobileye) and expect that relationship to come to an end as well. If you recall EM said that their code can run on AMD hardware as well. Because of that, I do not think that Tesla depends heavily on nVidia's code.

Eh platforms come with demo/reference code, I see absolutely nothing unusual about rewriting and throwing that out.

Every Cortex-M3 part that you buy comes with reference code for being a pedometer. Yet I bet Fitbit has a completely different algorithm on their devices and probably didn't use any of that code.

When I worked on digital motor control a few years ago, the chip that we selected also came with complete reference code for doing space-vector PWM of 3-phase induction motors. We played around with it for maybe a week before undertaking a full rewrite as well. In our case, the "reference platform" was written by a grad student / intern of the chipmaker, was full of nonsensical comments and horribly cut-and-paste code, and parts of the underlying control algorithm were actually just plain wrong. But it did spin a motor, and convince us that the SoC's hardware was capable of getting that job done.

And I think that's the role of demo/reference code. It helps a chipmaker sell their product to their customers. Sure you can say "yeah in theory this thing consumes little power and can is clocked to do FFT's fast enough to be a fitness wearable" or "yeah it has 6 PWM outputs and 6 ADC inputs, I think I can turn it into a motor controller".... but nothing is as convincing as actually seeing some demo code in action. Even if the right thing to do is to rip apart the demo code and start over again.

Every Cortex-M3 part that you buy comes with reference code for being a pedometer. Yet I bet Fitbit has a completely different algorithm on their devices and probably didn't use any of that code.

When I worked on digital motor control a few years ago, the chip that we selected also came with complete reference code for doing space-vector PWM of 3-phase induction motors. We played around with it for maybe a week before undertaking a full rewrite as well. In our case, the "reference platform" was written by a grad student / intern of the chipmaker, was full of nonsensical comments and horribly cut-and-paste code, and parts of the underlying control algorithm were actually just plain wrong. But it did spin a motor, and convince us that the SoC's hardware was capable of getting that job done.

And I think that's the role of demo/reference code. It helps a chipmaker sell their product to their customers. Sure you can say "yeah in theory this thing consumes little power and can is clocked to do FFT's fast enough to be a fitness wearable" or "yeah it has 6 PWM outputs and 6 ADC inputs, I think I can turn it into a motor controller".... but nothing is as convincing as actually seeing some demo code in action. Even if the right thing to do is to rip apart the demo code and start over again.

Tesla was using Mobileye code before and they made the best working system of all cars that used it.

I would see nothing wrong if Tesla reused nVidia 'code' now. Or, Do you imply that Tesla cheated us with the system entirely made by nVidia?

Personally I am of opinion that Tesla sees nVidia as a competitor (just like Mobileye) and expect that relationship to come to an end as well. If you recall EM said that their code can run on AMD hardware as well. Because of that, I do not think that Tesla depends heavily on nVidia's code.

i'm only saying, that if Tesla used nVidia code in the demo, but has desided not to use nVidia code in the FSD, then we cannot say anything about Tesla's FSD progress based on that demo.

i'm only saying, that if Tesla used nVidia code in the demo, but has desided not to use nVidia code in the FSD, then we cannot say anything about Tesla's FSD progress based on that demo.

Agreed. All we can say is:

(1) At 25mph it looks like the system has enough processing power to drive itself and avoid various obstacles like pedestrians and cross traffic.

(2) It looks like it has cameras pointed at the right angles to deal with situations like stop signs.

mitchellh3

Member

One of the early AP2 releases showed stop signs in the IC - this quickly disappeared

I've actually never known or heard this. Do you have a link to a thread or screenshots of this? I'd be interested to see.

I've actually never known or heard this. Do you have a link to a thread or screenshots of this? I'd be interested to see.

Sure!

via electrek

First indicator that Tesla Autopilot 2.0 can detect stop signsI've actually never known or heard this. Do you have a link to a thread or screenshots of this? I'd be interested to see.

mitchellh3

Member

Thanks! Interesting.

And just in case it wasn't clear: I didn't doubt you, I've always just been generally curious about how they might do the UI for stop signs and signals. Although this may not be the final UI in any way, I was curious what an iteration looked like.

What do you mean by the "ap image library"? The trained neural net does not really have an "image library", it has some stuff it uses to categorize images in unknown ways possibly looking at totally wrong things that appear to work for the training set of images (that's how people got .various non-car things in their garage to show up as cars and such).One of the early AP2 releases showed stop signs in the IC - this quickly disappeared. Can't imagine that they would build that and not use it, IMO evidence of a second, more feature-rich development stream.

That reminds me ... @verygreen have you found the ap image library? Anything interesting in there?

If you mean the IC images to be shown on the instrument cluster, I guess those could be easily seen, but they mean nothing as long as AP does not instruct IC to show them.

S4WRXTTCS

Well-Known Member

What do you mean by the "ap image library"? The trained neural net does not really have an "image library", it has some stuff it uses to categorize images in unknown ways possibly looking at totally wrong things that appear to work for the training set of images (that's how people got .various non-car things in their garage to show up as cars and such).

If you mean the IC images to be shown on the instrument cluster, I guess those could be easily seen, but they mean nothing as long as AP does not instruct IC to show them.

What you don't have access to the entire training set used for it? Or the very least the label files

If you mean the IC images to be shown on the instrument cluster, I guess those could be easily seen, but they mean nothing as long as AP does not instruct IC to show them.

That's what I figured he means. Of course, it's meaningless as to whether or not those features are coming soon, but at least it provides a bit of a hint as to what kinds of features are coming or were considered at one point.

As someone with relevant industry experience, I must say, your analysis shows that Tesla does a fairly poor job of hiding unreleased features and internal-facing features from customer-shipping software compared to the consumer electronics world.

that's because process just gets in the way of fast development, we have covered this in some other threads alreadyAs someone with relevant industry experience, I must say, your analysis shows that Tesla does a fairly poor job of hiding unreleased features and internal-facing features from customer-shipping software compared to the consumer electronics world.

that's because process just gets in the way of fast development, we have covered this in some other threads already

HAHAHA. Fast, hazy, tomato, tomatoe…..

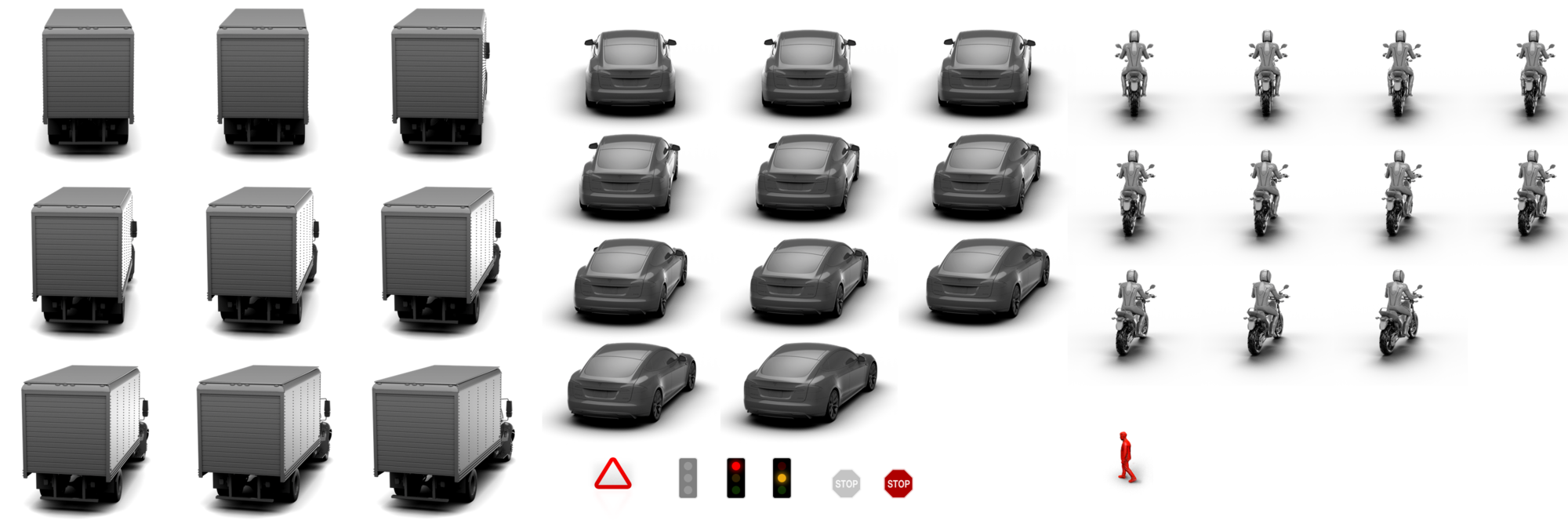

These are all the possible autopilot IC pictograms in the 17.28.27 firmware (also known as c528869 "developer version", somebody fat-fingered something so it started to show githash of the build instead of the version?)

Does not include the background and the color bar for the side collision warnings.

Does not include the background and the color bar for the side collision warnings.

These are all the possible autopilot IC pictograms in the 17.28.27 firmware (also known as c528869 "developer version", somebody fat-fingered something so it started to show githash of the build instead of the version?)

Does not include the background and the color bar for the side collision warnings.

View attachment 237562

Very cool! sure seems to hint towards traffic sign/signal recognition!

These are all the possible autopilot IC pictograms in the 17.28.27 firmware (also known as c528869 "developer version", somebody fat-fingered something so it started to show githash of the build instead of the version?)

Does not include the background and the color bar for the side collision warnings.

View attachment 237562

This is exactly what I meant - thanks!

Traffic lights

These are all the possible autopilot IC pictograms in the 17.28.27 firmware (also known as c528869 "developer version", somebody fat-fingered something so it started to show githash of the build instead of the version?)

Does not include the background and the color bar for the side collision warnings.

View attachment 237562

Interesting. The first concrete evidence of signal alerts coming that I've seen (though someone mentioned a little bit ago that I apparently missed a thread about an early AP2 version showing the gray stop sign.)

Any idea what the triangle is going to be used for? It's upside down for the US yield sign it otherwise resembles - I think I've seen some EU caution signs that look like that?

Similar threads

- Article

- Replies

- 4

- Views

- 2K

- Replies

- 7

- Views

- 285

- Replies

- 3

- Views

- 4K

- Replies

- 30

- Views

- 4K

- Replies

- 26

- Views

- 1K