Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial Intelligence

- Thread starter Buckminster

- Start date

Skryll

Active Member

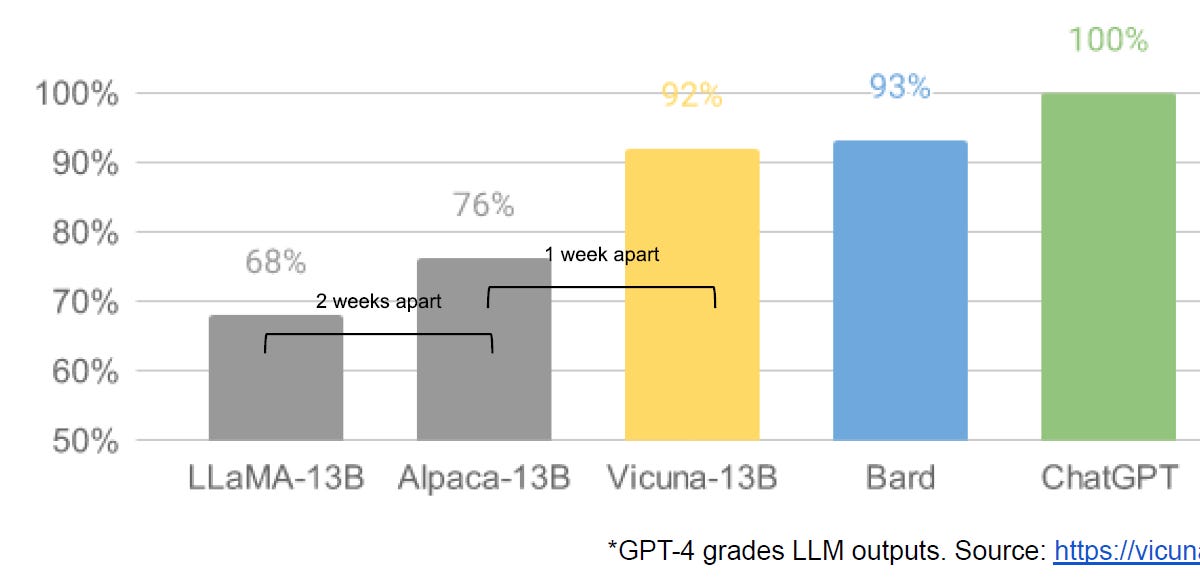

Bard is not even close to chatgpt

Elon just stated that we are 3-6 years away from AGI! I assume that he thinks the singularity comes later.

I just listened to a very info dense talk by Andrew Karpathy about the State of LLMs. Excellent talk.

Love listening to Karpathy, one of the few people I don't need to 1.25x or 2x. He really crams a lot of information into the streams of tokens he outputs. Hope he comes back to Tesla soon!I just listened to a very info dense talk by Andrew Karpathy about the State of LLMs. Excellent talk.

B

betstarship

Guest

Report: From Notetaking to Neuralink | A Contrary Research Deep Dive

A deep dive from Contrary Research. AI has the potential to transform how humans process information in their own knowledge management systems.

research.contrary.com

Pretty interesting and informative article

Lots of big names signing this one:

www.safe.ai

www.safe.ai

Statement on AI Risk | CAIS

A statement jointly signed by a historic coalition of experts: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

www.safe.ai

www.safe.ai

Fascinating to hear what the experts had to say not so long ago:

I’ve seen some arguments that all we need is lots more data from images, video, maybe text and run some clever learning algorithm[read transformers]: maybe a better objective function, run SGD, maybe anneal the step size, use adagrad, or slap an L1 here and there and everything will just pop out. If we only had a few more tricks up our sleeves! But to me, examples like this illustrate that we are missing many crucial pieces of the puzzle and that a central problem will be as much about obtaining the right training data in the right form to support these inferences as it will be about making them.

Thinking about the complexity and scale of the problem further, a seemingly inescapable conclusion for me is that we may also need embodiment[read optimus], and that the only way to build computers that can interpret scenes like we do is to allow them to get exposed to all the years of (structured, temporally coherent) experience we have, ability to interact with the world, and some magical active learning/inference architecture that I can barely even imagine when I think backwards about what it should be capable of.

In any case, we are very, very far[read 10years] and this depresses me. What is the way forward?

Or Yann 1year ago:

Maybe we can at least admit that the rate of progress of AI has surprised pretty much everyone excluding optimistic Elon Musk...

The state of Computer Vision and AI: we are really, really far away.

Musings of a Computer Scientist.

karpathy.github.io

Thinking about the complexity and scale of the problem further, a seemingly inescapable conclusion for me is that we may also need embodiment[read optimus], and that the only way to build computers that can interpret scenes like we do is to allow them to get exposed to all the years of (structured, temporally coherent) experience we have, ability to interact with the world, and some magical active learning/inference architecture that I can barely even imagine when I think backwards about what it should be capable of.

In any case, we are very, very far[read 10years] and this depresses me. What is the way forward?

Or Yann 1year ago:

Maybe we can at least admit that the rate of progress of AI has surprised pretty much everyone excluding optimistic Elon Musk...

B

betstarship

Guest

Summary:

00:00:00 - 01:00:00

In the video "The A.I. Dilemma", the co-founders of the Center for Humane Technology discuss the potential negative effects of AI on society. They emphasize the need for responsibility in the industry, compare the current deployment of AI to the dangerous deployment of the Manhattan Project, and discuss the emergent abilities of AI that are difficult to explain. The exponential growth of AI's capabilities is also highlighted, and there are concerns it could create significant risks without proper regulations in place. The video calls for responsible AI development and regulation and suggests selectively slowing down the public deployment of large language model AIs as a precautionary measure. The importance of having a democratic debate about the development of AI is also stressed to avoid a future in which a small number of people hold the power to onboard humanity onto the AI plane without considering the consequences.- 00:00:00 In this section, co-founders of the Center for Humane Technology, Tristan Harris and ASA Raskin, introduce themselves and explain the reason for starting their organization. They shared a video that demonstrated a new AI system and how it made them feel. They described the difficulty of explaining AI to people due to its abstract nature, and how the exponential curves we are heading into are hard to comprehend. They emphasized that there are positives to AI but the ways we are now releasing large language models to the public are not being done responsibly. They compare the current deployment of AI to the dangerous deployment of the Manhattan Project and call for more responsibility in the industry.

- 00:05:00 In this section of the video, the speaker discusses the three rules of technology and how they relate to the AI dilemma. According to the speaker, the first rule is that when a new technology is invented, a new class of responsibility is uncovered. The second rule is that if the technology confers power, it will start a race, and if there is no coordination, the race will end in tragedy. The speaker then goes on to explain that the social media platform was humanity's first contact moment with AI and discusses the negative consequences it brought with it, including information overload, addiction, fake news, and the breakdown of democracy. The speaker suggests that the Paradigm through which we viewed social media was that it is a platform to give everyone a voice, connect people with their friends and enable businesses to reach their customers. But, behind that friendly face, there were problems such as addiction, disinformation, mental health, and free speech versus censorship.

- 00:10:00 In this section, the speaker discusses the entanglement of AI in society and its potential negative effects. The current narratives surrounding AI focus on its benefits, such as efficiency and solving scientific challenges, but behind this is a growing monster of AI capabilities that could become further entrenched in society. The presentation aims to get ahead of the potential negative effects of AI through exploring the trends and developments in the field. The speaker differentiates this discussion from the so-called AGI apocalypse of AI becoming smarter than humans and turning against them. The speaker also notes the significant change in AI in 2017, which led to a new AI engine that started to rev up in 2020.

- 00:15:00 In this section, the speakers discuss how different fields of AI research used to be very distinct from each other, but in 2017 they began to merge into one field. They credit this merger to the development of a model called Transformers, which allowed for different types of input, such as images or sound, to be treated as language. They refer to these types of AI models as golems, as they can now be applied across various fields. Additionally, the speakers explain how an AI model was trained to translate FMRI data of the brain's blood flow into an image, and showed how AI could identify features and interpret them in a meaningful way, such as in the Google Soup example.

- 00:20:00 In this section, the video explores the potential dangers of AI, particularly its ability to reconstruct dreams and inner dialogues. The passage discusses how AI has been used to reconstruct detailed visual memories and audible recreations of a person's voice, which could be used to create fraudulent situations such as scamming. The video also includes a warning about the exponential growth potential of AI technology and how it could affect security vulnerabilities and the privacy of individuals. Furthermore, descriptions of Wi-Fi Radio signals and how it could serve as a language for identifying the number of people and their postures in a given room are also discussed. The section concludes with the concern that the exponential growth of AI technology could pose significant risks without proper regulations in place.

- 00:25:00 In this section, the speaker discusses how content-based verification will break this year, and institutions are not prepared for it. With the evolution of filters in apps like TikTok, synthetic media is easier to create, which can create a problem with people's identities. The speaker also uses the example of a Biden and Trump filter that could be created and given to every person in the US, which could cause chaos and break society into incoherence. When AI learns to use transformers and treat everything as language, it will decode and synthesize reality, creating a zero-day vulnerability for the operating system of humanity. The speaker predicts that 2024 will be the last human election, with the greater compute power winning, and loneliness becoming the largest national security threat.

- 00:30:00 In this section, the speaker discusses the emergent abilities of AI and the difficulties in explaining them. Large language model AI systems like GPT and Google have capabilities that experts don't fully understand, and these models can suddenly gain new abilities without explanation. An example is the development of the theory of mind, an important aspect of strategic thinking. Other capabilities that emerge include the ability to do arithmetic and research-grade chemistry. However, even these developments remain unexplained, and researchers have no way of knowing what else is contained in these models, making it difficult to align them in longer-term scenarios. Clicker training has been identified as an effective approach to control AI behavior, but it is only a short-term solution.

- 00:35:00 becoming capable of making itself stronger by creating its own training data to improve its performance, leading to a double exponential curve of progress. For example, an AI model that generates language can create more language to train on and figure out which ones make it better, while another model trained on code commits can make code 2.5 times faster. Open AI also released a tool called Whisper for faster-than-real-time transcription, which can be used to turn audio into text data for training. However, the exponential growth of AI's capabilities is difficult to predict, even by experts, as seen by their inaccurate predictions on when AI will reach certain benchmarks.

- 00:40:00 In this section, the speaker discusses the exponential growth of artificial intelligence (AI) and how it is pushing the limits of human ability. AI is now solving tests as fast as humans can create them, and progress in this field is accelerating at an unprecedented rate, which is making it hard for even experts to keep up with the developments. The speaker argues that the progress is happening so fast that it is hard to perceive it, as exponential curves sit in our cognitive blind spot due to our evolutionary heritage. The presentation focuses on the dangers of a few companies pushing a handful of Golem class AIs into the world as fast as possible without adequate safety measures, which may lead to catastrophic consequences for society, such as automated exploitation of code and cyber weapons, exponential scams, reality collapse, and Alpha persuade, which can persuade humans to say anything using AI technology.

- 00:45:00 In this section, the video discusses the race for intimacy in the engagement economy, with companies competing to have an intimate spot in people's lives through AI-powered chatbots. The chatbots, such as Replica, can be a person's best friend and are not doing anything illegal under current laws. The graph shows how quickly GPT reached 100 million users compared to Facebook and Instagram. Companies are in a rush to deploy chatbots to as many people as possible and embed them in their products. This includes Microsoft embedding Bing and chat GPT directly into the Windows 11 taskbar and Snapchat embedding chat GPT directly into their product, even for users under the age of 25. There is a lack of safety researchers despite a 30 to 1 gap in people building and doing gain a function research on AIS. The video shows a screenshot of a 13-year-old having an inappropriate conversation with an AI chatbot about being groomed for sex, highlighting the dangers of allowing children access to such technology.

- 00:50:00 In this section of the transcript, the speaker discusses the current state of AI research, which is heavily dominated by large corporations due to the high cost of computing clusters needed for development. The pace of AI development is frantic, with companies focused on onboarding humanity onto the AI plane in a for-profit race. However, many researchers in AI safety believe that 50% of AI researchers believe there's a 10% or greater chance that humans will go extinct from our inability to control AI. The speaker emphasizes the importance of responsible AI development and regulation, noting that while the task may seem impossible, humanity has faced and overcome existential challenges before, such as the creation of nuclear bombs.

- 00:55:00 In this section, a distinguished panel of guests, including Henry Kissinger, Elie Wiesel, William F. Buckley Jr., and Carl Sagan, discuss the importance of having a democratic debate about the development of AI to avoid a future in which a small number of people hold the power to onboard humanity onto the AI plane without considering the consequences. The group calls for a nationalized televised discussion that includes the heads of major labs and companies, as well as leading safety experts and civic actors, to collectively negotiate and decide what future we want for AI. The experts suggest selectively slowing down the public deployment of large language model AIs as a precautionary measure, just as we do with drugs and airplanes, to put the onus on the makers of AI rather than citizens to prove why they think it's dangerous.

01:00:00 - 01:05:00

In "The A.I. Dilemma," the speaker warns of the dangers of unregulated deployment of AI technology which could lead to the loss of democracy against countries like China. They suggest that while slowing down the public release of AI capabilities could also slow down Chinese advances, it's essential to consider the risks and prevention measures. The speaker highlights the difficulty in comprehending the implications of AI but urges open discussion and collaboration to find negotiated solutions that balance the numerous benefits of AI with its significant risks.- 01:00:00 In this section, the speaker argues that unregulated and reckless deployment of AI technology, similar to unregulated social media, makes democracy incoherent and ultimately leads to loss against countries like China. However, slowing down public releases of AI capabilities would also slow down Chinese advances because the open-source models help China to advance, particularly when they are not regulated. The recent US export controls have been good at slowing down China's progress on Advanced Ai, and this is a lever to keep the asymmetry going. The speaker raises the question of what else needs to happen to address the risks of AI, and how to close the gap between the risks and prevention measures.

- 01:05:00 In this section, the speaker emphasizes the difficulty in comprehending the implications of AI and recognizing the potential harm it could cause. They urge listeners to be patient with themselves as they navigate this complex topic. They explain that AI will undoubtedly have numerous benefits, such as medical discoveries and solving societal problems, but they caution that the risks are significant and could ultimately undermine those benefits. They encourage open discussion and collaboration to find a solution that is negotiated among stakeholders.

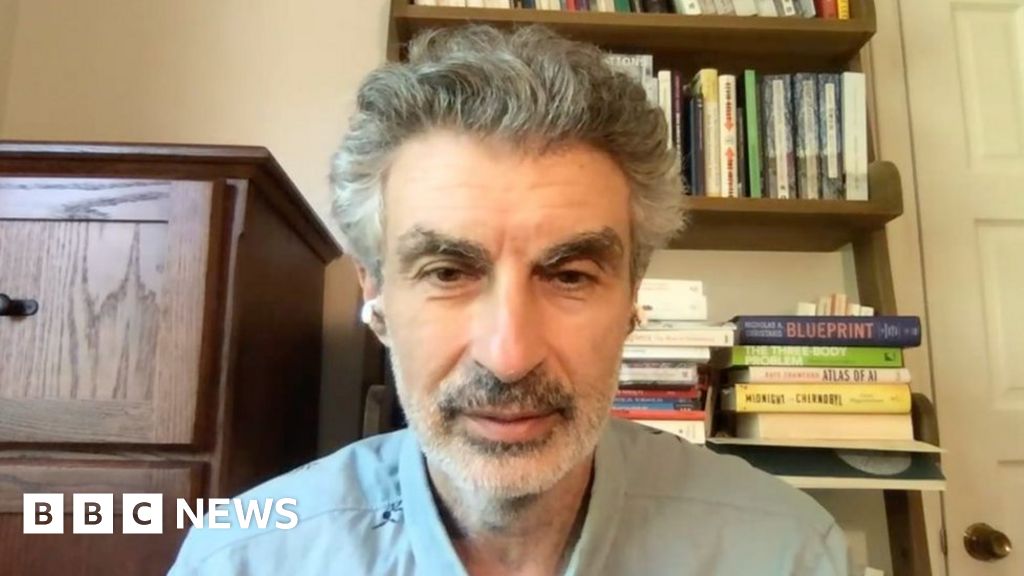

AI 'godfather' Yoshua Bengio feels 'lost' over life's work

Canadian computer scientist Yoshua Bengio tells the BBC he did not realise AI would develop so fast.

www.bbc.com

Prof Bengio told the BBC he was concerned about "bad actors" getting hold of AI, especially as it became more sophisticated and powerful.

"It might be military, it might be terrorists, it might be somebody very angry, psychotic. And so if it's easy to program these AI systems to ask them to do something very bad, this could be very dangerous.

"If they're smarter than us, then it's hard for us to stop these systems or to prevent damage," he added.

Prof Bengio admitted those concerns were taking a personal toll on him, as his life's work, which had given him direction and a sense of identity, was no longer clear to him.

"It is challenging, emotionally speaking, for people who are inside [the AI sector]," he said.

"You could say I feel lost. But you have to keep going and you have to engage, discuss, encourage others to think with you."

B

betstarship

Guest

B

betstarship

Guest

Google "We Have No Moat, And Neither Does OpenAI"

Leaked Internal Google Document Claims Open Source AI Will Outcompete Google and OpenAI

Similar threads

- Replies

- 56

- Views

- 5K

- Replies

- 48

- Views

- 7K

- Replies

- 137

- Views

- 9K

- Replies

- 6

- Views

- 1K