Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial Intelligence

- Thread starter Buckminster

- Start date

jabloomf1230

Minister of Silly Walks

ChatGPT's fate hangs in the balance as OpenAI reportedly edges closer to bankruptcy

OpenAI's AI-powered chatbot is running on fumes.

www.windowscentral.com

www.windowscentral.com

Tiger

Active Member

ChatGPT's fate hangs in the balance as OpenAI reportedly edges closer to bankruptcy

OpenAI's AI-powered chatbot is running on fumes.www.windowscentral.com

Strange hitpiece, Amazon didn't make profit for years, how do they expect OpenAI to start making profit from the get-go and would that even be a smart strategy? All they need to do is IPO it if they ever had issues financing, but I doubt it.

B

betstarship

Guest

Might be insightful

blog.eladgil.com

blog.eladgil.com

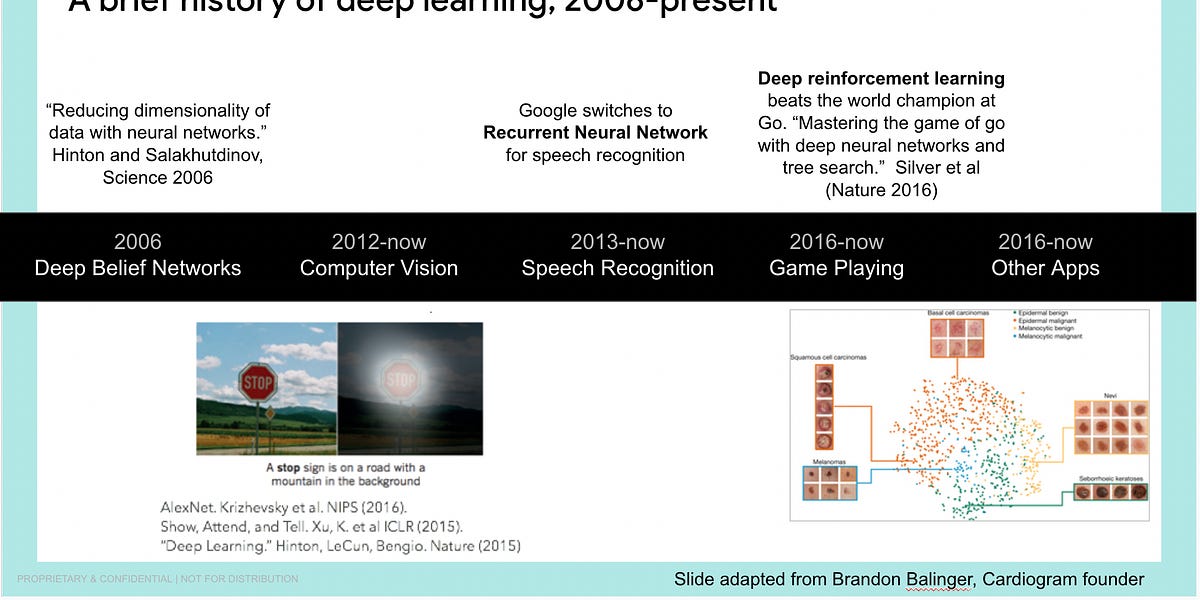

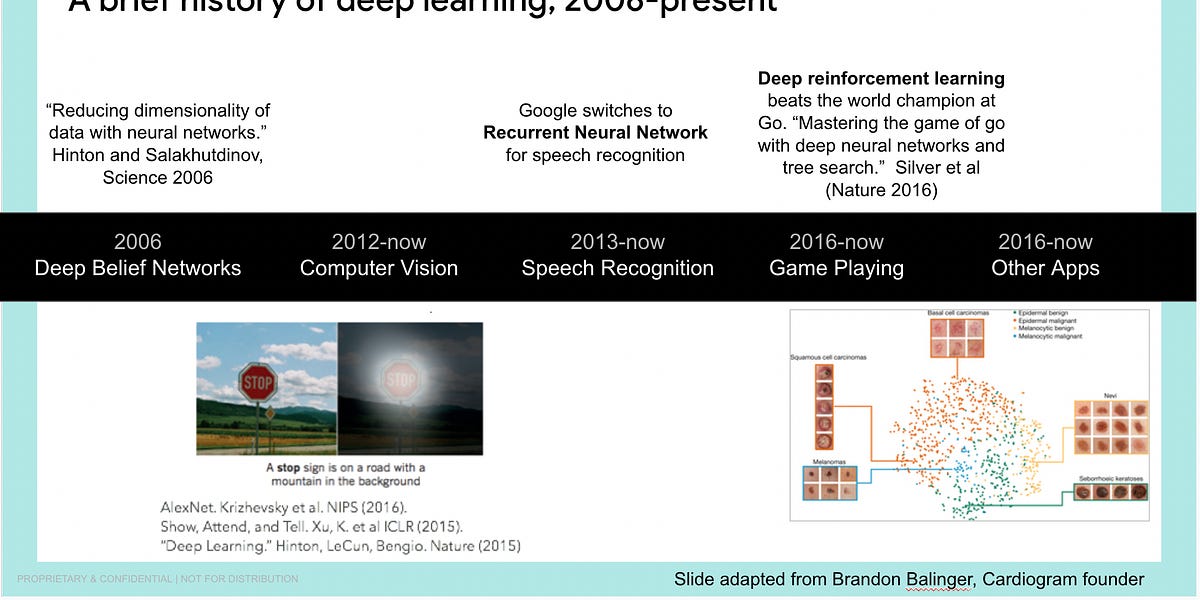

Early days of AI

Rather then view LLMs, Transformers, and diffusion models as part of a continuum with past "AI", it is worth thinking of this as an entirely new era and discontinuity from the past

Not just Zuck but also Bill and Elon in the same room!

Good talk about alignment and AGI, 1.75x was a good speed for me:

cliffs: AGI very likely in our lifetime, >megagigantic impact on our lives, alignment is hard as reinforcement learning is creative.

cliffs: AGI very likely in our lifetime, >megagigantic impact on our lives, alignment is hard as reinforcement learning is creative.

First video my main takeaway is that we should regulate the big actors. Imo the problem with this is that the small actors today can do what only the big actors could do a few years ago. Sometimes the small actors area almost ahead of the big actors, see for example llama2, midjourney etc. So this seems like a very temporary solution. Maybe useful to do, but not enough to ease our worry.

The second video my main takeaway is that we should use AI to prove that AI is safe and even if we are not smart enough to understand the AI, at least we can verify the proof. This sounds nice, as long as everyone always use these proof requirements. But as technology improves more and more people will have the technology to run these experiments and can we really control that all of them will run these proof-checkers all the time? Humanity being saved by mathematical proofs sounds like something a math guy would want to believe and support.

I come out from these talks less optimistic.

Last edited:

Super impressive results, will kill so many apps and jobs. Makes me want to renew that GPT4 sub...

DALL·E 3

DALL·E 3 understands significantly more nuance and detail than our previous systems, allowing you to easily translate your ideas into exceptionally accurate images.

openai.com

Last edited:

No Intel Corp or any Chip Manufacturers (NVidia is nearest equivalent, unless I missed one). Likely not priority vs software, but maybe a bit more Hardware should at least be at the table here. Pat, somebody, give-em a call.Not just Zuck but also Bill and Elon in the same room!

Without question I am seriously ill-equipped to understand present day innovations in AI. I retain serious interest after a decade working directly with LISP in early 1980’s commercial expert systems then later applications while consulting with some leaders. I have rarely thought much about IBM in the last few years. This article, though, struck me as a strong possible development to ease distributed applications of otherwise computationally limited locations, say, cars, robots, airplanes etc.

Is this really likely to be a material advance in applicability of, say, FSD and Optimus?

www.sciencemagazinedigital.org

www.sciencemagazinedigital.org

Is this really likely to be a material advance in applicability of, say, FSD and Optimus?

Science Magazine - AI computing reaches for the edge

Artificial intelligence (AI)—the ability of computers to perform human cognitive functions in real-world scenarios—requires substantial computation power,

Seems like a nonstatement. Superhuman persuasion is a subset of AGI so obv it will come before or at the same time.

But don't worry about doom, OpenAI have a plan. Their plan is to roll it out as fast as possible:

Their old safety measures are being followed very strictly:

Someone watched Star Trek!

Similar threads

- Replies

- 72

- Views

- 7K

- Replies

- 9

- Views

- 1K

- Replies

- 48

- Views

- 8K

- Replies

- 8

- Views

- 1K