As is true for every other human on the face of the earth.It’s a shame that we cannot take Elon’s statements at face value.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FSD v12.x (end to end AI)

- Thread starter Buckminster

- Start date

-

- Tags

- #fixphantombraking 12.4.x is coming autopilot chill is not chill elon is an ass elon rocks fix fsd extreme late deceleration fsd does not commit to go fsd does not maintain proper speed fsd doesn't read signs properly fsd hard and late braking fsd has decision wobble fsd jerky driving fsd late deceleration fsd sometimes may not detect train crossings fsd stops too early too quickly fsd stops too far behind line fsd stops when there is zero visibility fsd tailgating in city streets fsd very slow at stops fsd will never enable l3 with hw4

AlanSubie4Life

Efficiency Obsessed Member

There’s a concept called credibility.As is true for every other human on the face of the earth.

Of course there are no guarantees. But that is not the point!

As a world-class (best ever?) sales/marketing guy who places less importance on technical correctness, Elon definitely uses creative license from time to time!

Last edited:

That's still no different from everyone else selling products.There’s a concept called credibility.

Of course there are no guarantees. But that is not the point!

As a world-class (best ever?) sales/marketing guy who places less importance on technical correctness, Elon definitely uses creative license from time to time!

AlanSubie4Life

Efficiency Obsessed Member

And I did not say otherwise!That's still no different from everyone else selling products.

Um. I've been reading, listening to some fairly interesting podcasts, and most of this sourced, one way or the other, by Real Researchers. Which of course, I'm not.Not clear that what we call machine learning/NNs bears any resemblance to the human brain.

It’s just a term of art - it’s not meant to suggest we are duplicating the function of the brain!

No one knows how our brain actually works, exactly, yet. Know even less about it than we know about NNs.

But a year or three ago I was listening to a real researcher describing how the human brain, when processing images, separates everything into 5-sided polygons.. and that's how he started, he promptly lost me on the second turn.

I had the distinct understanding after a half hour of all this that he wasn't just putting up random gonzo stuff on what he thought, but that the base level (those polygons, for example) were settled fact.

There are actual structures in the brain, visible with the right equipment, that handle image and color processing.

The point is, here, is that I'm just scratching the surface. Forty years ago, your statement, "No one knows how are brains actually work, yet." may have been absolutely correct. These days.. I don't think that's the case.

At one time people envisaged the operation of human brains as being like Lovelace-style computers, complete with gears. Later popular ideas centered around computers and flip flops.

But Neural Networks aren't founded upon us playing around with silicon: It's actual operation of the axions and such of the nerves and connections in our brains. I've read reports which talk about the layers of the brain and the directions that these signals travel.

A whole, working model? Nope. Getting closer, with active research? Sure. Feedback mechanisms and math? You betcha. Silicon chip models that mimic the real thing? It's in our cars.. How close is what the car does to what our brains are doing? By no means the same.. but there are hints. Mother Nature by dint of bloody tooth and claw weeds out the stuff that doesn't work as well; if something like flip flops were more efficient the NNs, we likely would have developed that, instead. And the fact that HW3 apparently works faster under 12.X than 11.x, well..

The parallel with the brain is that both “see” what their sensors see and learn what the input means. Even as adults we have all had the moment when we looked at what was in front of us but didn’t “get” it for a second or two—or even longer if the data was bad (dark, snowing, etc). That’s enough to use parallel language.

Driving rules might be useful as a boundary check before FSD executes a move, such as the EU g-force limitation on autonomous vehicles taking curves on a highway ramp. They would have to be non-absolute for safe evasive maneuvers, for example. Once all vehicles are moved by computers they can come up with their own rules.

Driving rules might be useful as a boundary check before FSD executes a move, such as the EU g-force limitation on autonomous vehicles taking curves on a highway ramp. They would have to be non-absolute for safe evasive maneuvers, for example. Once all vehicles are moved by computers they can come up with their own rules.

As the CEO of the only tech company in the world with an ADAS working on city streets in an entire country, Elon’s credibility is very high.There’s a concept called credibility.

Of course there are no guarantees. But that is not the point!

As a world-class (best ever?) sales/marketing guy who places less importance on technical correctness, Elon definitely uses creative license from time to time!

That’s not marketing. City Streets ADAS is SHIPPING FUNCTIONALITY WAY ahead of everyone else.

diplomat33

Average guy who loves autonomous vehicles

In light of the V12 livestream, I have some questions about Tesla's end-to-end video training approach:

- Since the approach is dependent on video training, will overfitting be an issue? Could we see V12 work better in some areas where Tesla has more video data and work less well in other areas with less video data?

- Will there be diminishing returns as Tesla gets to more of the long tail? I feel like in the beginning, as Tesla is training on very common cases that are easier to find, they won't need as much data to get the same results. Progress will be faster. But as they get to rarer edge cases, Tesla will need more and more data to get the same results and progress will slow down. Is that a real concern?

- In the US, different areas have different road infrastructure, different driving behaviors, different traffic rules. Won't be hard to truly generalize one NN to handle all of those differences? Won't Tesla need to collect training data from basically everywhere to train the NN on all the differences? Will Tesla need to backtrack on the "all nets" and use some special rules for certain areas?

Last edited:

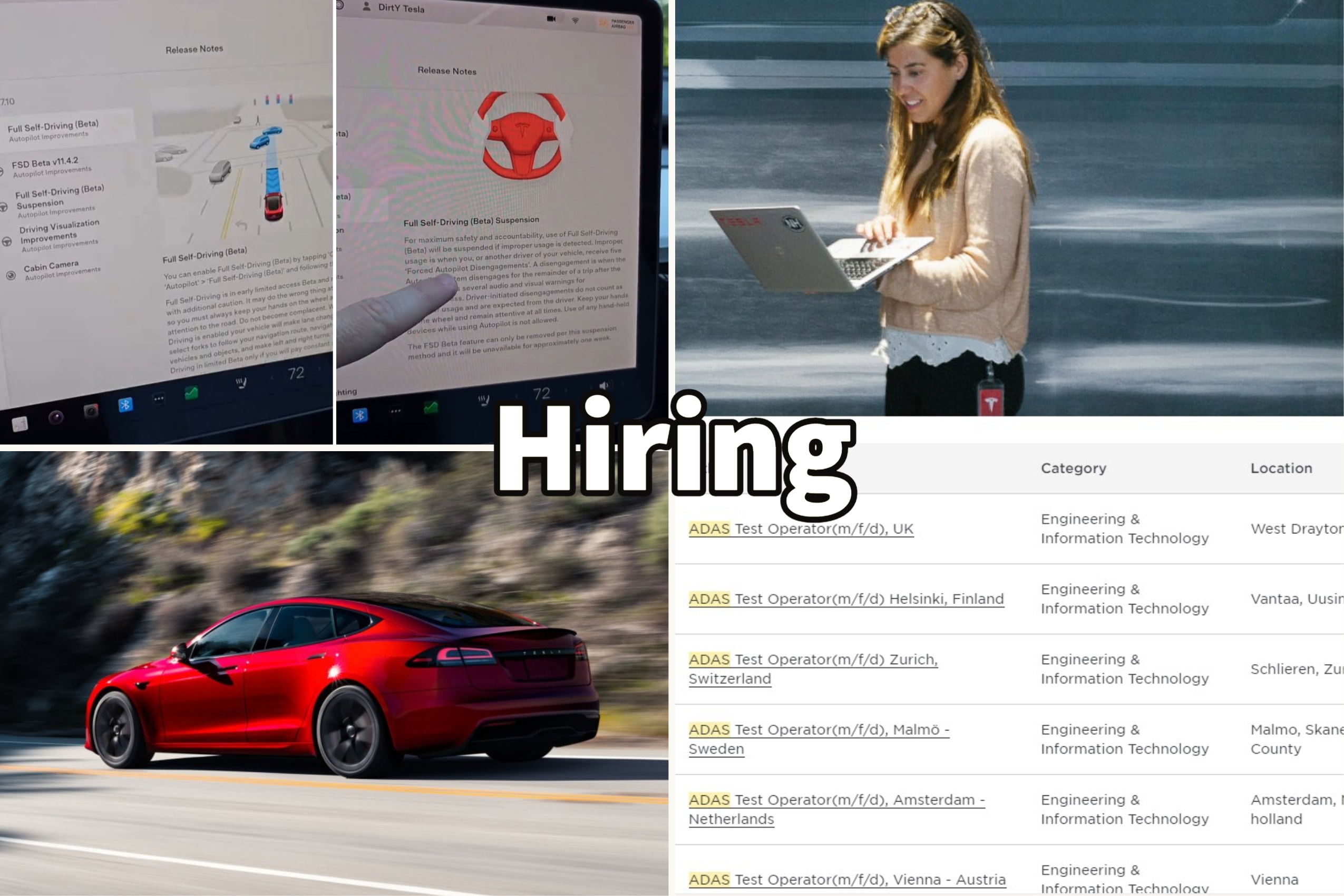

Elon said on the stream & I quote "We got FSD 12 test drivers around the world"Those of us in the RoW are stuck on the 7 year old stack

Patience! This is a hugely positive news that the “ADAS test operators” that Tesla has been recruiting all over the world are testing FSD V12!

(Damnit, I should have applied for the job

If the system is told that driving on the road is good, why does it have to be told that driving off a cliff is bad? Because it may have enough weak positive cues (e.g. poorly marked roads, etc) that it would entertain driving off the cliff?

Sure, or many other cases.

Because our perception of the world "driving off a cliff" doesn't necessarily match the internal perceptions and intuitions of a big bag of grey neural goo whose representations we don't understand. Like it's never seen cliffs before or know what a concept of a cliff is.

We don't understand human internal representations either, but humans brains are the product of millions of years of vertebrate evolution which had sharp reinforcement feedback on doing stupid things. And even then, teenage drivers are really bad with poor judgment.

If so, can't that be addressed by complaining that the system doesn't have enough confidence in good actions that it requires the driver to take over?

That's a giant unsolved problem in machine learning (and humans too): the confidently incorrect. And suppose it alerts like a frightened mother-in-law screaming from the other seat 400 milliseconds away from a disaster?

Isn't that part of the whole SAE driving levels thing?

Sure, but declaring a level doesn't solve the problem technologically.

diplomat33

Average guy who loves autonomous vehicles

No surprise, the head of Tesla's FSD says FSD will be the bestest thing ever. lol.

In light of the V12 livestream, I have some questions about Tesla's end-to-end video training approach:

- In the US, different areas have different road infrastructure, different driving behaviors, different traffic rules. Won't be hard to truly generalize one NN to handle all of those differences? Won't Tesla need to collect training data from basically everywhere to train the NN on all the differences? Will Tesla need to backtrack on the "all nets" and use some special rules for certain areas?

Technologically that can be solved by having some nets be segmented (whether fully or partially) or fine-tuned by region and other parts remain the same in an end to end training. Or, like the LLM fine-tuning, a low-rank overlay delta that's region specific on top of a generic base model.

The big problem will of course be the data curation and testing, as now certain adherence to rules can't be tested with normal rule-based but only inferred from a huge set of test cases (and there can be overfitting indirectly there too).

And some time back the previous versions were a "kludgy mess" and V10 or V11 was going to be the awesome sauceMaybe the most refreshing info from this stream was Elon finally admitting V11.x is a kludgy mess.

Andrej Karpathy providing some insights into keeping neural network modules around such as vector space outputs not only to bootstrap end-to-end training but also provide insights into what's affecting end-to-end video -> control.

goRt

Active Member

Yours is GA, he refers to a couple of test mulesElon said on the stream & I quote "We got FSD 12 test drivers around the world"

Patience! This is a hugely positive news that the “ADAS test operators” that Tesla has been recruiting all over the world are testing FSD V12!

(Damnit, I should have applied for the job)

Our stack is 7 years old

AlanSubie4Life

Efficiency Obsessed Member

I would not argue with this at all (with the caveat that I have no experience with other systems with exception of primitive Toyota).City Streets ADAS is SHIPPING FUNCTIONALITY WAY ahead of everyone else.

However that is irrelevant to Elon’s credibility. I was speaking to his specific statements on what is going on under the hood with V12. Not his promises of what will be delivered (setting aside when it will be delivered - which refreshingly he did not talk about in the video…as I recall).

Hallucinations have been used to describe when large language models put together words that sound like facts but are false. More generally and more relatable to what we've experienced with FSD Beta is false positives, e.g., believing there's a pedestrian at a mailbox when there's nobody there. With traditional control code, it tells the vehicle to go around pedestrians, but potentially with control network, it could determine that the mailbox doesn't require swerving around based on other signals over time.I wonder how they will prevent hallucinations with this method?

But more broadly, now with end-to-end networks, any incorrect control behavior could somewhat be considered a hallucination if you want to call it that.

In fact, he refers to a few countries.. And these countries have test mules in all the major cities.Yours is GA, he refers to a couple of test mules

Tesla Hired a Lot of ADAS Test Operators in Europe, and We All Know What It Means

Tesla has opened many ADAS test operator positions throughout Europe, hinting at the Full Self-Driving program beginning testing in full force

BTW, NFI what GA means.

AlanSubie4Life

Efficiency Obsessed Member

Yeah that is what I am referring to. I think exactly how this sort of thing will manifest in driving is unclear (to me at least). But I also think that occasional unexplained (or easily explained by a human) errors are likely with an unconstrained approach (as advertised in the video - this is what I specifically wonder about with Elon’s claims)., now with end-to-end networks, any incorrect control behavior could somewhat be considered a hallucination if you want to call it that.

I always took Ashook as a yes man guy who wants to be liked by Elon compared to Andrej.No surprise, the head of Tesla's FSD says FSD will be the bestest thing ever. lol.

Andrej would never say some nonsense like this.

It sounds like something a child would say. "My mom is..."

Infact I recall Andrej saying the opposite when asked about timelines.

He would always downplay/deflect and say how hard things they were working on were.

100% cringe

Similar threads

- Replies

- 72

- Views

- 7K

- Replies

- 80

- Views

- 11K