Eno Deb

Active Member

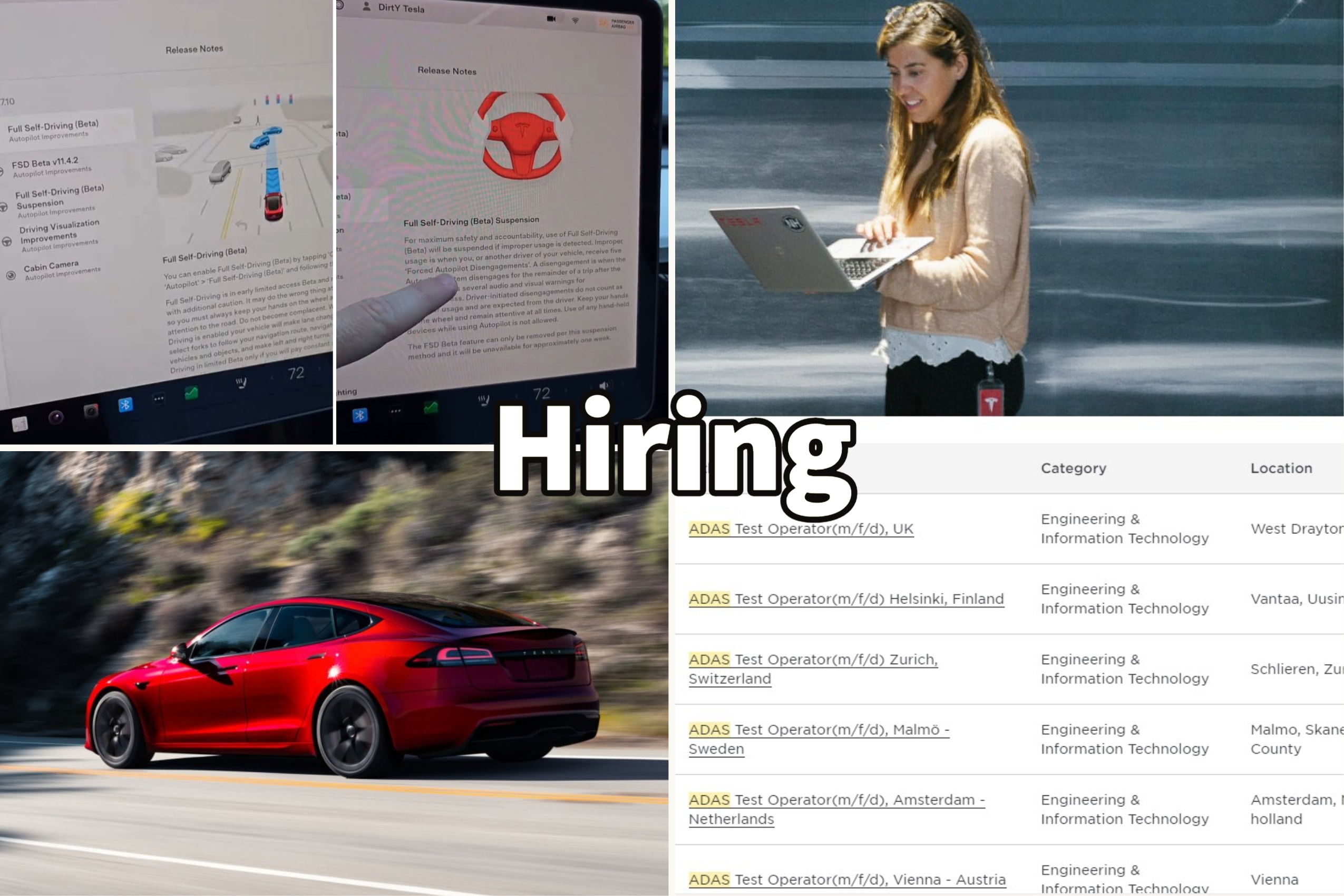

That could mean that they are technologically ahead, or just that they are more reckless in releasing unfinished functionality ...As the CEO of the only tech company in the world with an ADAS working on city streets in an entire country, Elon’s credibility is very high.

That’s not marketing. City Streets ADAS is SHIPPING FUNCTIONALITY WAY ahead of everyone else.

Measured by Musk's promises, what they released simply doesn't work. Here's a quote I ran across earlier today:

" The car will be able to find you in a parking lot, pick you up, take you all the way to your destination without an intervention this year. I'm certain of that. That is not a question mark. It will be essentially safe to fall asleep and wake up at their destination towards the end of next year."

That was in February 2019 ...