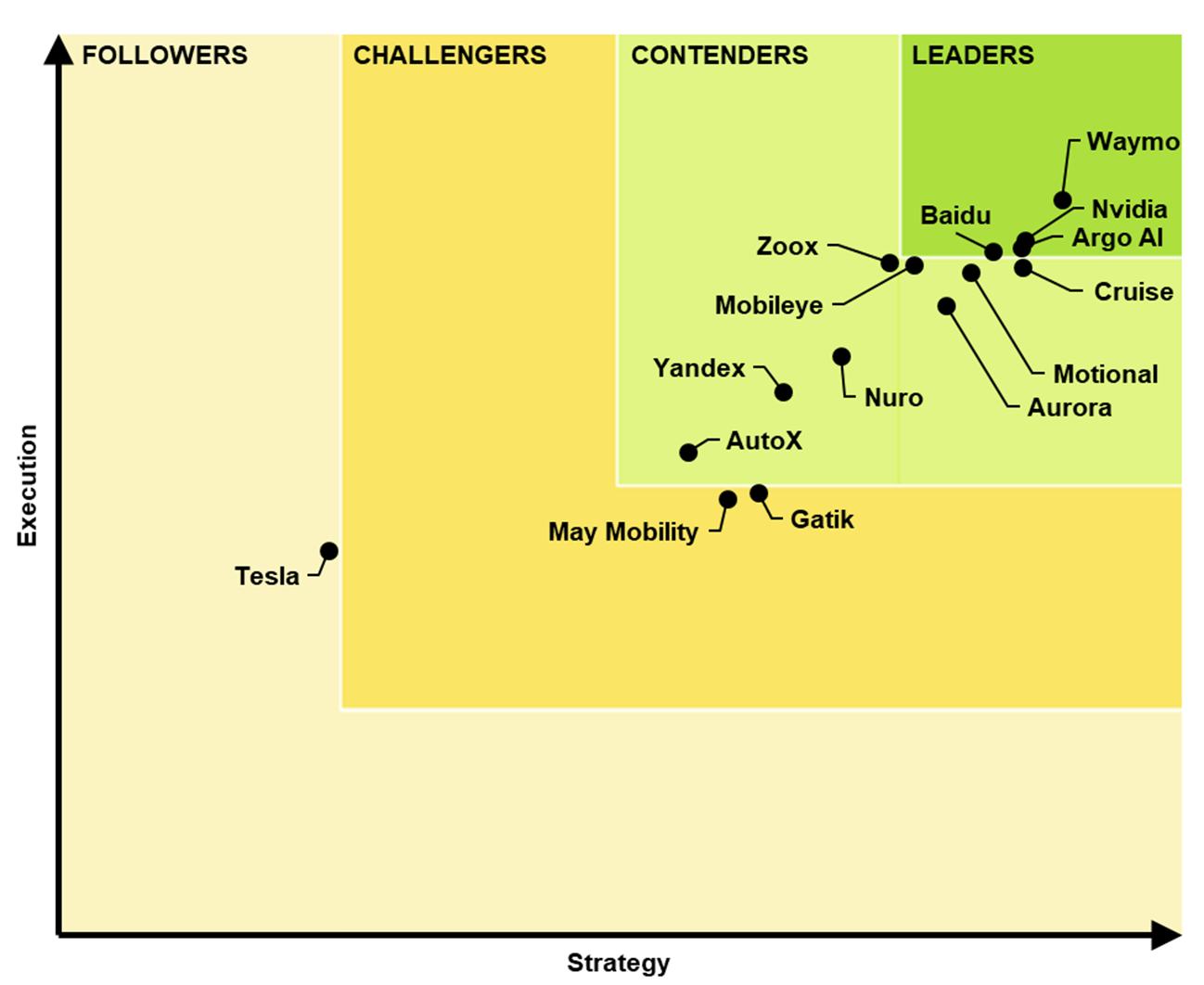

That's interesting, and there are certainly lots of serious players in this field. However, I doubt it fully considers the rate at which AI can develop, particularly with Tesla's massive data intake and their approach.

That's the neural net religion, though. Belief without proof that, if we just have enough data flow through the neural nets, then they'll magically figure things out. That works to a certain extent, but not any further.

What is strategy, and how is it ordered? That is, how have they placed them along the horizontal axis?

I didn't buy the full report, which is expensive, but the ranking agrees with at least one other I've seen.

I think the fundamental flaws with the Tesla system are:

Some important positions lacking cameras (four corners pointing straight left and right). There's no cross traffic awareness. Also, humans not only have a binocular swivel head that can see a pretty far range, we also have three mirrors that show us a pretty good range too.

Thinking that, if we just get enough data, the neural nets will solve the engineering problem for us. I cannot disprove this as possible, but as I've read it would take a lot more processing power and levels of neural nets to get there. And even then it's bit of a crap shoot.

Dropping radar. I get repeated warnings when in areas of bright light and shadows. It's silly.

Avoiding LIDAR. That solves a major vision problem easy. Why avoid it?

In actual real-world use, this all shows up in countless ways:

I can see the cars ahead of the car ahead of me, and I can slow down when needed.

I can see the brake lights half a mile down the road, or the bunching of traffic, and give some extra following distance.

I can see the traffic light half a mile away and anticipate.

I can see the guy drifting in his lane and about to signal he's moving over, so I give him room before he signals.

I can see the look on the face of the woman across the intersection and know I should go or she should go.

I can see the guy on the other side of the parked truck, walking rapidly towards the street, and swing wide for when he walks into the road, even though I can't see him for most of this interaction.

As far as I can tell, the Tesla system does none of this. As far as I can tell, the Tesla system barely sees past its own nose. The vision recognition is poor, only showing a fraction of cars on the road that I can see nearby, let alone far away. Does it know about that mid-corner bump coming? Definitely not. A significant amount of my driving involves anticipating and avoiding road imperfections, and Tesla doesn't do this at all.

So I think Tesla took the dark side approach to automotive AI. They took the easy path on the low hanging fruit, but they don't seem to have much of a long term technological strategy beyond more data and more processing.

In sharp contrast, have you looked at all at what Waymo does? It's far more sophisticated.

I’ll go out on a limb here and say I’m 100% certain we’ll have a L4 or L5 vehiicle BEFORE 2045, and then out on a branch and say I’m 90% certain Tesla wouldn’t have robotaxis in 2 years. Probably not even 3-4. Maybe not even 5. I’ll set some calendar reminders to check back on. ;-)

I hope you're right. I don't see any evidence that you are, though.