Is the understanding that it's the sensor inputs t (i.e. cam pixel counts, etc...) that is the issue with FSD running on HW4 vs. HW3?I should have checked TMC before posting the question.

This post has the most interesting information:- Do any HW4 Vehicles have FSD Beta activated ?

Also this one:- Do any HW4 Vehicles have FSD Beta activated ?

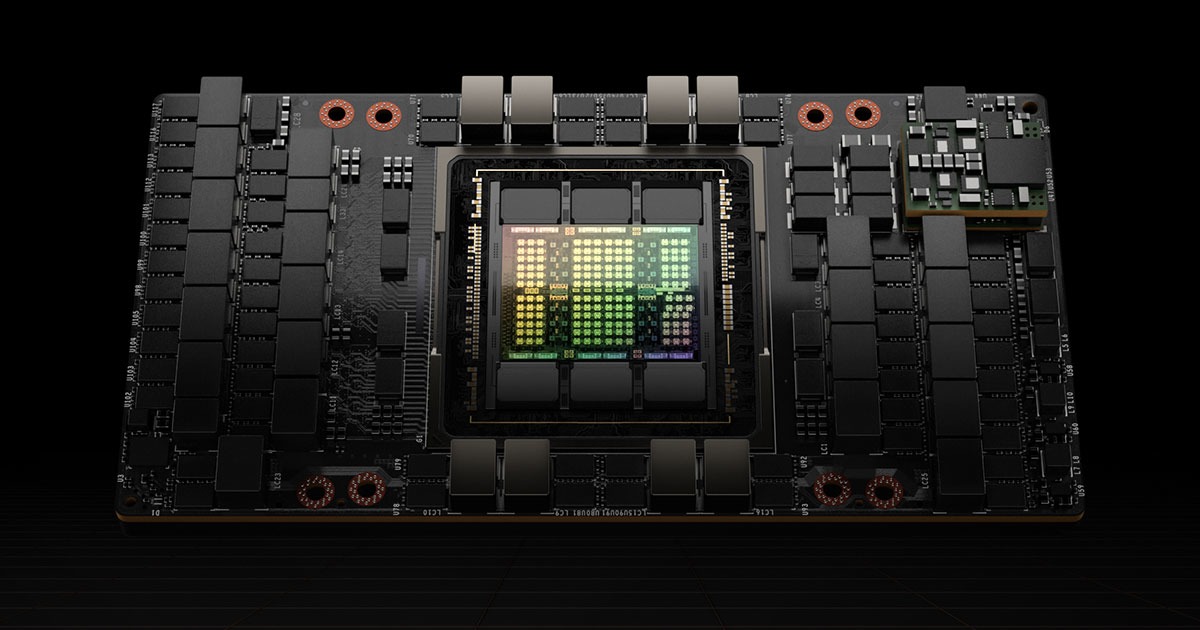

I would have expected the actual hardware to be a superset of HW3, more/faster NN processing cores, more memory, higher on/off die bandwidth, more/faster ARM CPU cores, etc... I'd also expect that there may be some new architectural additions (i.e. new CPU instructions, additional data types the NN cores can process, etc..). This would all allow for the old code to run on the new hardware, just faster. Whereas new code that took advantage of new features wouldn't run on the older HW, in much the way new x86 CPUs could run old stuff, but new SW that used stuff like SSE instructions wouldn't work on old.

If so, the NN's should largely run unmodified except for things that are timing or sensor-format dependent... which is hopefully a small amount of modification...

Or is the thought that HW4 is a significant architectural departure from HW3?