Well as you can already tell, they hyped it up by calling it "level 5" but their statement is far from it as it states "in almost all conditions".

As far as the full system capabilities, we already know there are multiple situation where the side repeater cameras will be blocked when pulling out of driveways/parking lots that are adjacent to a highway. not only that but the entire architecture infrastructure is built on concrete side barriers, bushes, gardening, whatever that are at the height level of the side repeaters which would eliminate them. Making these situations far more in occurrence than you think. This is why Nissan said all cameras must be on top unless the views are jeopardized and why mobileye moved up their camera design to mirrors.

Then you look at their use of ultrasonic for parking and how that fails to see low/thin objects.

Finally the problem with vision only system (which i'm the first and only person who argued that this weren't a vision/radar system but a vision only while the radar's task will eventually be somewhat like look for the car ahead and the car ahead of it) is that the vision system doesn't see what it doesn't understand. If a UFO lands in the middle of the street, using only a vision system, the car will run into it. There are possible ways to circumvent this, using a semantic free space that detects the road textures, treat the edge of the free space as an obstacle.

But that's not only a bandage to a sink hole sized problem.

The full potential for this system is L3 on highways and L2 on urban roads.

Elon has been proclaiming over a span of 2 years ago that level 5 will ready by dec 2017, june 2018 and now lately april 2019. In 2015 he said "I really consider autonomous driving a solved problem," now he is saying if they solve vision then they solve autonomous driving.

He's a hype machine, you will never know tesla true capabilities listening to what he says. He vowed in 2016 that 100-200k model 3 will be made by end of 2017. The true reality is more like 10k-20k.

But I won't be surprised when Elon releases their FSD software as driver assistance early 2018. Elon has always showed he has a big ego and wants to be seen as first and the best. AP2 software was released in pre-alpha state and qa tested by customers. I won't be surprised if they do the same to FSD. But it will be a pre-alpha product with disengagement every 1-10 miles.

To Elon, this is just another way to assert that they are first to FSD and that its currently in "beta" and he knows his fans/media will lap it up. Just like his hyped media cross country drive.

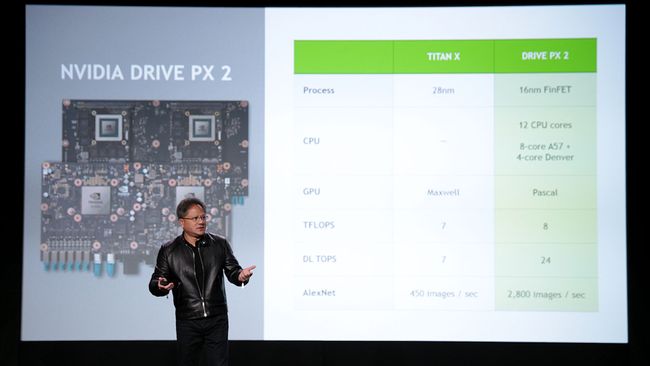

i remember Nvidia saying that one PX2 is equivalent to 4 or 6 titan x,