In @DamianXVI's post you can actually see one of the windshield defroster threads (ref the fisheye-pic)

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AP2.0 Cameras: Capabilities and Limitations?

- Thread starter lunitiks

- Start date

AnxietyRanger

Well-Known Member

Hard to tell from a 140-char limited medium but I believe this Tweet by Elon potentially confirms that the camera data from more than the rear view can be routed to the cid. I recall earlier pages noting that there is only one video out to the cid seemingly connected only to rear view cam from a cable perspective but questions were raised about whether the data going out can be controlled via software. It appears so.

Elon Musk on Twitter

Alternate reading could be that based on the parent post, they could only capture and put on an attached flash drive, but I think thats less likely of something that Elon would state would be interesting to ship to end users.

I believe it would make sense for the output to be software controllable, basically be the video output of the nVidia computer. Offering an alternative 360 view for parking situations, even if it were black and white and not see down enough in parts, would be IMO interesting and useful.

Just put a black box in the middle for the parts it can not see. It could also cover only the sides and the back of the car - like earlier see-around systems did without nose cameras... or even if this view only showed the image from the three rear-looking cameras, that would seem to hug the sides of the car very closely, it would IMO be useful.

Something like this should be doable:

Last edited:

AnxietyRanger

Well-Known Member

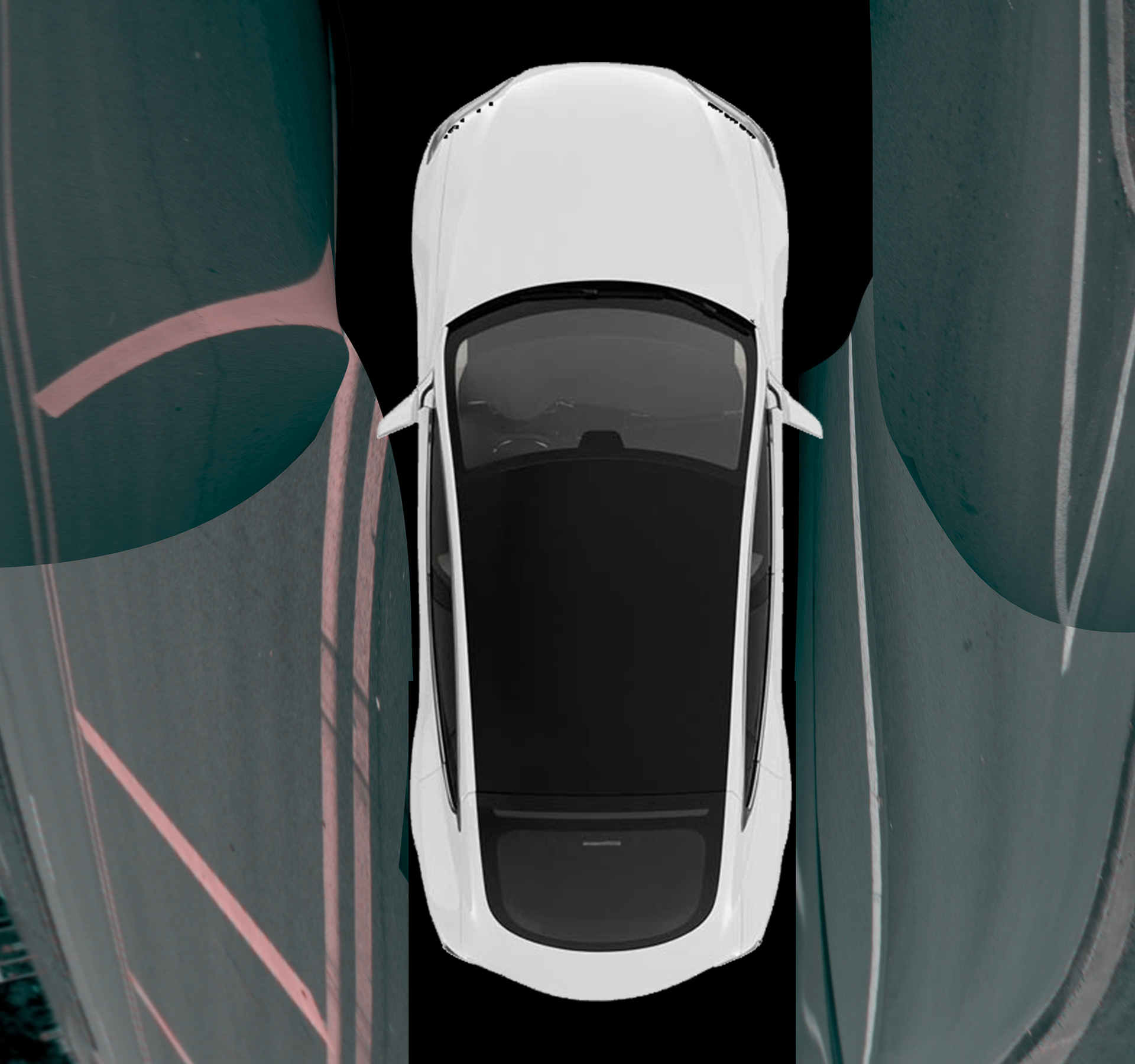

I made a quick mock-up using @DamianXVI's images of what a 360 birdseye view could look like as far as the side cameras are concerned.

Nevermind the very poor perspective work on my part, the main thing here is illustrating the blindspots of the system around the front quarter of the car, where the cameras can not see all the way to the metal. This should be less of an issue towards the rear. Follow the road markings flanking the car, they are visible in the backwards looking cameras, but not in the forwards looking side cameras.

Of course the rear can be completed with the rear-view camera, not seen here - and front could be completemented (at some distance from the nose) with the front fisheye...

IMO this could still be a very useful view, especially up to around the side mirror area and backwards...

Nevermind the very poor perspective work on my part, the main thing here is illustrating the blindspots of the system around the front quarter of the car, where the cameras can not see all the way to the metal. This should be less of an issue towards the rear. Follow the road markings flanking the car, they are visible in the backwards looking cameras, but not in the forwards looking side cameras.

Of course the rear can be completed with the rear-view camera, not seen here - and front could be completemented (at some distance from the nose) with the front fisheye...

IMO this could still be a very useful view, especially up to around the side mirror area and backwards...

Last edited:

I just created a (very color-wrong) video from the h265 frames finally, now to figure out how to tell ffmpeg to switch the image format underneath.

so far hevc stream implies yuv which is certainly wrong.

file out.mp4 in Box | Simple Online Collaboration: Online File Storage, FTP Replacement, Team Workspaces

so far hevc stream implies yuv which is certainly wrong.

file out.mp4 in Box | Simple Online Collaboration: Online File Storage, FTP Replacement, Team Workspaces

stopcrazypp

Well-Known Member

Kind of off topic, but given you have access to the GPS screen, have you been able to figure out the accuracy? I've previously speculated whatever method they are using (maybe with inertial sensors) they are getting accuracy within 2-3 feet, not the 10 feet maximum on regular GPS. It would be nice to settle this question.Ok, to tie some loose ends from before.

The Tuning parameters screen requested:

View attachment 227921

The GPS screen (wow, 12 sats in my garage)

View attachment 227922

btw on ape there's an "inertializer" binary that I guess also might be doing stuff related to positioning?

And this is ape on a parked car (in park), happily blasting away analyzing my garage. Still not sure how much it really consumes.

View attachment 227923

But how would I verify it? Are there calibrated lots you go to that have every feet marked and you can compare the markings against the detected data, or how does that work?Kind of off topic, but given you have access to the GPS screen, have you been able to figure out the accuracy? I've previously speculated whatever method they are using (maybe with inertial sensors) they are getting accuracy within 2-3 feet, not the 10 feet maximum on regular GPS. It would be nice to settle this question.

But how would I verify it? Are there calibrated lots you go to that have every feet marked and you can compare the markings against the detected data, or how does that work?

you can use google maps and enter the lat/long and compare.

you can also use your phone and enter its lat/long and compare aswell.

but why do you think my phone is any more accurate than the car?you can use google maps and enter the lat/long and compare.

you can also use your phone and enter its lat/long and compare aswell.

As for google maps, does it really have that much resolution to be able to tell it?

but why do you think my phone is any more accurate than the car?

As for google maps, does it really have that much resolution to be able to tell it?

Satellite map overlays are definitely not accurate enough to determine this! And virtually every navigation app uses some sort of likelihood-of-driving-biased filtering to resolve your location (e.g. it statistically prefers to put your location as driving on a road).

You would need another very high resolution GPS system such as a military laser targeting system to get meaningful reference measurements.

(I used to work on high resolution location systems for the defense industry)

Satellite map overlays are definitely not accurate enough to determine this! And virtually every navigation app uses some sort of likelihood-of-driving-biased filtering to resolve your location (e.g. it statistically prefers to put your location as driving on a road).

You would need another very high resolution GPS system such as a military laser targeting system to get meaningful reference measurements.

(I used to work on high resolution location systems for the defense industry)

I wasn't referring to driving/navigation app, but the actual google system The lat/long grid using the World Geodetic System (WGS 84) which can pinpoint any position in the world.

I believe mobileye used google maps to showcase their REM if im remembering correctly.

Its not the actual maps but the lats/long coordinates....

World Geodetic System - Wikipedia

@verygreen

BTW anybody figured out how to simply turn .h265 files into a video?

I just created a (very color-wrong) video from the h265 frames finally, now to figure out how to tell ffmpeg to switch the image format underneath.

so far hevc stream implies yuv which is certainly wrong.

I used ffmpeg to convert it into "rawvideo" format. Ended up with stream of raw 16bit/px images, grayscale and quarter size red images interleaved. I didn't thought abut that for long and just modified the tool that I was creating for color computation to convert them frame by frame into color bmp files. Than compressed those bmps back into h264 mp4. So I end up with worse quality that the original, keep in mind.

Resulting replay from main camera: main_replay - Streamable

LargeHamCollider

Battery cells != scalable

M8L has an advertised circular error probability of 1.5 meters.Kind of off topic, but given you have access to the GPS screen, have you been able to figure out the accuracy? I've previously speculated whatever method they are using (maybe with inertial sensors) they are getting accuracy within 2-3 feet, not the 10 feet maximum on regular GPS. It would be nice to settle this question.

nice!I used ffmpeg to convert it into "rawvideo" format. Ended up with stream of raw 16bit/px images, grayscale and quarter size red images interleaved. I didn't thought abut that for long and just modified the tool that I was creating for color computation to convert them frame by frame into color bmp files. Than compressed those bmps back into h264 mp4. So I end up with worse quality that the original, keep in mind.

Resulting replay from main camera: main_replay - Streamable

@lunitiks - oh look, there's a pedestrian in that video (and therefore in images too)

So I plugged the coordinates form raw and corrected fields into google maps.you can use google maps and enter the lat/long and compare.

you can also use your phone and enter its lat/long and compare aswell.

Raw is in the middle of my garage, corrected is in the middle of road in front of my house.

stopcrazypp

Well-Known Member

So presumably "corrected" is the road biased one (assuming your car is in the garage). I'm surprised you can get a lock in your garage, but even so, I do wonder if it affects accuracy.So I plugged the coordinates form raw and corrected fields into google maps.

Raw is in the middle of my garage, corrected is in the middle of road in front of my house.

I guess you can try the same when you have a chance at an open air parking lot and see where it places your car vs the actual parking spot.

So I plugged the coordinates form raw and corrected fields into google maps.

Raw is in the middle of my garage, corrected is in the middle of road in front of my house.

So presumably "corrected" is the road biased one (assuming your car is in the garage). I'm surprised you can get a lock in your garage, but even so, I do wonder if it affects accuracy.

I guess you can try the same when you have a chance at an open air parking lot and see where it places your car vs the actual parking spot.

i was just about to say this... particularly where the markings haven't changed.

Like you can compare them with the sat images and see the parking spot markings of what ever spot your in.

AnxietyRanger

Well-Known Member

Christopher1

Member

while this is cool/amazing and allows people access to have a pretty good dashcam if elon decides to enable it and also allows on-board conversion (which might add some costs).

But the autopilot doesn't see the video like this, it sees it in complete grey-scale.

But the autopilot doesn't see the video like this, it sees it in complete grey-scale.

I used ffmpeg to convert it into "rawvideo" format. Ended up with stream of raw 16bit/px images, grayscale and quarter size red images interleaved. I didn't thought abut that for long and just modified the tool that I was creating for color computation to convert them frame by frame into color bmp files. Than compressed those bmps back into h264 mp4. So I end up with worse quality that the original, keep in mind.

Resulting replay from main camera: main_replay - Streamable

Similar threads

- Article

- Replies

- 4

- Views

- 2K

- Replies

- 7

- Views

- 288

- Replies

- 3

- Views

- 4K

- Replies

- 30

- Views

- 4K