Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FSD Beta 10.69

- Thread starter Buckminster

- Start date

Daniel in SD

(supervised)

That's not what this discussion is about. The question is why isn't this behavior of FSD beta predictable in simulation? Why wasn't a scenario close enough to this caught by Monte Carlo simulation?A simulation can only respond to the inputs given for the scenario. It can not simulate the irrational, unpredictable behavior of humans.

willow_hiller

Well-Known Member

Why wasn't a scenario close enough to this caught by Monte Carlo simulation?

I'd venture that it wasn't caught because the safety performance of any given maneuver can be really subjective.

For that particular case, it seems to me that Chuck took over because he thought the vehicle was too slow to completely enter the median. But the only on-coming vehicle was in the middle lane, so the vehicle was in no imminent danger of a collision. You can model this scenario in a simulation and figure out how often the maneuver results in a collision; but you cannot use a simulation to model how safe a human in the car feels during the maneuver.

Daniel in SD

(supervised)

You can definitely create a model for how safe a human feels. They have a crazy amount of disengagement data to look at to analyze and see how comfortable people are with cars coming towards them at high speed. Watching the video again I do see your point that if Chuck hadn't disengaged there probably would not have been a collision even with no evasive action by the other car. That's an extremely rude way to drive though and not really practical for a system that needs to be monitored by humans.I'd venture that it wasn't caught because the safety performance of any given maneuver can be really subjective.

For that particular case, it seems to me that Chuck took over because he thought the vehicle was too slow to completely enter the median. But the only on-coming vehicle was in the middle lane, so the vehicle was in no imminent danger of a collision. You can model this scenario in a simulation and figure out how often the maneuver results in a collision; but you cannot use a simulation to model how safe a human in the car feels during the maneuver.

Cheburashka

Active Member

Dude constantly says it was just a bump in the video comments, his rim is fine.

No way that was a dip in the road, he full-on hit that protruding curb at speed and went up a good 8". I'm afraid I don't believe him.

View looking back at the curb

View attachment 844407

Wow. I mean it sucks that FSD drove into the curb but also hard to feel bad for that guy.

AlanSubie4Life

Efficiency Obsessed Member

You can definitely simulate that! You just have to make sure margins are sufficient.but you cannot use a simulation to model how safe a human in the car feels during the maneuver.

We’ve looked at this disengagement before. There was no problem with the go decision. There was plenty of time to make a safe-feeling move. But then it limited its speed to 8-9mph, for traffic on the far side of the street, before exiting traffic lanes. This is why Chuck took over - it was just jogging across the street. Humans don’t like to be in traffic lanes with passing vehicles (even if they are not in the lane with the traffic).

This seems like an easy thing to simulate (and design) to ensure prompt exit from traffic lanes, with ample margins regardless of traffic on the far side of the road.

The other stuff Tesla is doing all the time and has accomplished is way more complicated that setting up this set of rules.

Anyway this can definitely be simulated.

willow_hiller

Well-Known Member

Anyway this can definitely be simulated.

You can model subjective feelings of safety or discomfort, but how else do you validate your model other than testing it in real life?

Maybe they have run this scenario through a simulation, and their model predicted that occupants would feel safer slowly approaching the dense traffic ahead in scenarios where the traffic behind the vehicle is light. No reason that behavior couldn't have already come from a simulation, but if it did, the assumptions they programmed into the simulation have now been proven incorrect.

Based on the visualization and driving behavior, it seems like FSD Beta 10.69 did not see the sign / pole / concrete base. But maybe it just wasn't actually that close. The keynote about occupancy network does show that skinny poles aren't necessarily a problem:Do we feel that FSD Beta saw the pole though?

But then again, the presented occlusion detection based on occupancy were at very low speeds, so unclear if getting accurate predictions at higher speeds "just" needs more training data or architectural changes or isn't enabled in 10.69.

It'll be interesting how much Tesla keeps or splits among the moving objects vs static objects vs occupancy networks not just for visualization but also driving behavior. Having explicit labels for say car vs bike vs pedestrian is useful for modeling each of their possible behaviors, but maybe that's the wrong approach anyway for some corner cases, e.g., a pedestrian hopping on/off of a moving trolley. But at least in the "base" case of an unidentified static object like this pole, the occupancy network is designed to catch these cases (although maybe not yet in 10.69).

There are some obvious parameters that you can use to evaluate passenger feelings of safety. You can measure the levels of lateral jerk, acceleration and deceleration. These are key areas that cause people to feel less confident about the cars driving. You can certainly measure the cars proximity to other objects in the simulation (cars, people, UFOs, etc). It is not difficult to come up with minimum proximity values for different types of objects based on speed and situation. You run your simulation and assess whether the car exceeded any of these comfort factors.You can model subjective feelings of safety or discomfort, but how else do you validate your model other than testing it in real life?

Maybe they have run this scenario through a simulation, and their model predicted that occupants would feel safer slowly approaching the dense traffic ahead in scenarios where the traffic behind the vehicle is light. No reason that behavior couldn't have already come from a simulation, but if it did, the assumptions they programmed into the simulation have now been proven incorrect.

Tesla has referred to improving comfort in past release notes, so it's obviously something that they look at. I also seem to recall Musk stating a while back that they were, at that time, putting more effort into reducing disengagements than on improving comfort.

willow_hiller

Well-Known Member

For future reference, you can frame reverse / frame advance through a YouTube video with the comma and period keys on your keyboard respectively.

At about 25 frames prior to the pole being approximately at the front of the vehicle, you can see the intent-line make a deliberate shift to the left. And then twice at about 17 frames prior to the pole, and 13 frames prior to the pole, you can see it render on the FSD visualization.

This video runs at 30 FPS, and 25 MPH is about 36 feet per second. So we can estimate that FSD first diverted its path for the pole about 30 feet prior to it, and it was first rendered on the screen about 20 feet prior to it. EDIT: Also just realized that this segment is running at at least 8x speed due to the speed of the hand-gestures. So it's possible it first saw the pole closer to 80 yards away, and first rendered it on the screen closer to 50 yards away.

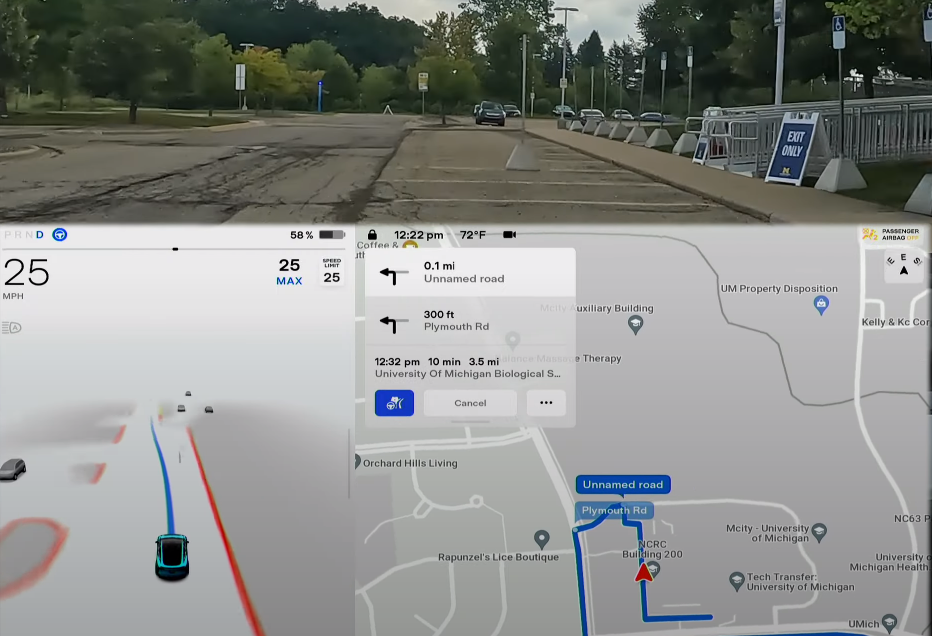

So there's no question that FSD saw the pole in a parking lot while traveling at 25 MPH. Here's a screenshot from 13 frames prior.

At about 25 frames prior to the pole being approximately at the front of the vehicle, you can see the intent-line make a deliberate shift to the left. And then twice at about 17 frames prior to the pole, and 13 frames prior to the pole, you can see it render on the FSD visualization.

This video runs at 30 FPS, and 25 MPH is about 36 feet per second. So we can estimate that FSD first diverted its path for the pole about 30 feet prior to it, and it was first rendered on the screen about 20 feet prior to it. EDIT: Also just realized that this segment is running at at least 8x speed due to the speed of the hand-gestures. So it's possible it first saw the pole closer to 80 yards away, and first rendered it on the screen closer to 50 yards away.

So there's no question that FSD saw the pole in a parking lot while traveling at 25 MPH. Here's a screenshot from 13 frames prior.

Last edited:

Good catch about something related to the pole showing up in the visualization, but I have my doubts that it diverted path due to the pole vs the curb ahead where the parking spots end. The "pole" actually appears twice in the visualization different from the screenshot you shared:So we can estimate that FSD first diverted its path for the pole about 30 feet prior to it, and it was first rendered on the screen about 20 feet prior to it

It clearly is drawing some line and the "bumpy" gray just to the top/right of it.

Additionally, after going past it, the repeater camera probably had a little bit more contrast with a view from closer to the ground to result in more occupancy visualization (for a single frame in this sped up video):

sleepydoc

Well-Known Member

in all fairness, that pole isn't the easiest to see for me. It may have been easier in real life but in the static picture it kind of blends into the other poles on the other side of the sidewalk.For future reference, you can frame reverse / frame advance through a YouTube video with the comma and period keys on your keyboard respectively.

At about 25 frames prior to the pole being approximately at the front of the vehicle, you can see the intent-line make a deliberate shift to the left. And then twice at about 17 frames prior to the pole, and 13 frames prior to the pole, you can see it render on the FSD visualization.

This video runs at 30 FPS, and 25 MPH is about 36 feet per second. So we can estimate that FSD first diverted its path for the pole about 30 feet prior to it, and it was first rendered on the screen about 20 feet prior to it. EDIT: Also just realized that this segment is running at at least 8x speed due to the speed of the hand-gestures. So it's possible it first saw the pole closer to 80 yards away, and first rendered it on the screen closer to 50 yards away.

So there's no question that FSD saw the pole in a parking lot while traveling at 25 MPH. Here's a screenshot from 13 frames prior.

View attachment 844520

sleepydoc

Well-Known Member

I get the feeling that you're overestimating the abilities of simulation and/or don't understand how much goes into a simulation. (Don't take this wrong, I don't mean it disparagingly) I work in healthcare and we do simulations but even with decades of experience the simulations are still poor models of a complex biological system. This is similar - it's a highly complex and dynamic system with numerous inputs that Tesla is still developing and has relatively limited experience with. If you don't understand the complexities at this point there's not much point in continuing the conversation.Maybe, I'm just curious why simulation doesn't work in this case. It's hard for me to imagine them getting to the performance required for driverless operation if they haven't figured out how to use data from the fleet to solve Chuck's ULT.

What I would like to know is why it often signals when going around a curve (sometimes even the wrong signal direction) BUT rarely signals when moving into a turn lane.

For things like roundabouts here you are required to signal; even if you're going in a constant circle.

Almost no one does though.

sleepydoc

Well-Known Member

Where is 'here?' In MN you're not.For things like roundabouts here you are required to signal; even if you're going in a constant circle.

Almost no one does though.

Yup totally agree, and as I would expect, it seems like Tesla is prioritizing what's important to them although frequency of issue could be an additional differentiator. Pretty high on their list is safety such as improving predictions about intersections so that FSD Beta doesn't drive into oncoming traffic. There can also be "uninteresting" improvements that satisfy business needs such as preparing for single stack highway driving where the marginal benefit to users might seem little given that Navigate on Autopilot is already quite capable.Tesla will work them when and if they decide to work them. You don't necessarily work all the simple ones first.

Similarly earlier 10.x had various improvements to some "visibility network" trying to improve creeping behavior as well as improvements to static objects, etc.; but given a hard problem like Chuck Cook's unprotected left turn, it became clearer to Tesla that their existing approaches was insufficient requiring the new occupancy network that solves multiple issues at the same time while increasing overall capability of the system. So in this case for 10.69, it simultaneously addressed some safety issues as well as internal business / technical desire to clean up multiple legacy approaches.

Unfortunately this can make "obvious" annoyances seem even more annoying that Tesla isn't getting around to fixing them. But at least the Autopilot team is large enough to not only focus on "safety-only" issues as with 10.69, there seem to be quite a few improvements related to polish / smoothness.

hamoneaster

Member

Anyone know if the FSD stikes get reset with new releases? I have 2 that I got due to oversensitive cabin cam. Afraid I'll get more!! lol

Because you are warning anyone in the other lane that you are merging? Doesn't seem extraneous to me.Why signal out of courtesy? What benefit does that serve? Honestly it’s discourteous as it is extraneous noise that could interfere with legitimate signals.

willow_hiller

Well-Known Member

Elon clarifying that the "early Beta" 1,000 is mostly employees:

Elon clarifying that the "early Beta" 1,000 is mostly employees:

I wonder if the early group is now 999 testers...

Similar threads

- Article

- Replies

- 56

- Views

- 11K

- Replies

- 19

- Views

- 2K

- Replies

- 97

- Views

- 5K

- Replies

- 9

- Views

- 4K

- Replies

- 25

- Views

- 4K